Thanks to Sarah, Pranav, Peter, Isaac, Vik, Loubna, Sophia, Eugene, Dan, Paige, Luca, Nathan, Graham, Dylan and Jon and our incredible team of volunteers and sponsors and last but not least the 2,200 of you who joined us online and IRL!

It’s been a couple weeks since we have recorded the episode, so Anshul recorded a “post-launch craziness” recap with some of the learnings and updates. After listening to the episode, make sure to check it out on YouTube.

Our second podcast guest ever in March 2023 was Varun Mohan, CEO of Codeium; at the time, they had around 10,000 users and vowed to keep autocomplete free forever:

Their decision to price the core auto-completion features for free (when Copilot was $10/month) wasn't just about competition, but a bet on where the value would ultimately be captured in AI development tools: agentic code generation.

We’ve been fortunate to also have a hat-trick of hit posts from Anshul telling us their thinking on building Codeium, all the way from pivot to unicorn startup:

Today, over a million developers use their products, they still have their free tier, and they recently launched Windsurf, an AI IDE, which has been very well reviewed and, more importantly, passed the Latent Space Discord vibe check.

While Cursor chose to fork VS Code (we covered this in our Cursor episode), Codeium initially focused on cross-IDE compatibility. They support Eclipse, JetBrains, etc, which gives them a huge advantage in enterprise environments.

They are now moving beyond text editing to agentic workflows, and they are using their own IDE, Windsurf, to enable that. They are centering it around this idea of a “Cascade” flow which allows the model not only to autocomplete one line, but do multi-step editing of multiple files with the same flow as an engineer.

In the backend, there are now multiple models making decisions for you:

Custom models for both low-latency keystroke-level features and code generation

Proprietary systems for high-quality retrieval for large codebases

Third-party models for high-level planning

All of this powered by custom infrastructure that allows them to support features like fill-in-the-middle that others don’t have. They are now quickly rolling out new waves of features to build on top of the new Windsurf experience starting with Wave 1: Cascade Memories and Automated Terminal Commands:

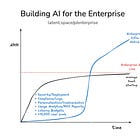

Enterprise Strategy: Going Slow to Go Fast

Unlike many startups that rush to market with minimal features and add enterprise capabilities later, Codeium invested heavily in enterprise-ready infrastructure from the start. This "go slow to go fast" approach has proven particularly valuable in the AI tools space.

When we go to an enterprise that has tens of thousands of developers and they're like, 'does your infrastructure work for tens of thousands of developers?' We can turn around and be like, 'well, we have hundreds of thousands of developers on an individual plan that we're serving.'

Some of the “boring” things they had to invest early on:

Built containerized systems from the start to make on-prem and VPC deployments easier

Invest in security and compliance early

Maintained core competencies in critical areas like model inference and distributed systems, even if they were overkill early on

Varun mentioned <10% of the Fortune 500 cos they talk to are fully on Github. This forced them to support multiple version control platforms and systems like GitLab, Bitbucket, Perforce, CVS, Mercurial, etc.

The other choice they made is that rather than immediately hiring traditional enterprise salespeople, the founders first sold to 30-40 customers themselves to understand the dynamics. This hands-on experience shaped their view of what enterprise sales in AI tools requires:

Technical Depth: Unlike traditional enterprise software, AI tool sales requires deep technical understanding as the difference between all these tools are quite nuanced. An outside would probably think that Github Copilot, Cursor, and Codeium are all the same thing.

Deployment Engineering: Taking inspiration from Palantir's model, Codeium employs deployment engineers who work closely with sales teams. These technical specialists help customers with deployments, but also bring back a lot of product feedback that you wouldn’t otherwise get from X or Discord.

Continuous Learning: "Our technology is changing very quickly... People [that are buying our technology] are curious about RAG, right? And imagine we had a sales team that is scaling where no one understands any of this stuff. We're not going to be great partners for our customers."

They started with a free-forever model, they then added on a $10/mo Pro plan, went up to $15/mo, and later added a $60/mo upgrade. There’s then the usual “Enterprise Plan” with custom pricing.

If you’re a founder building in the AI space, this episode is a great deep dive on how to think about the boundaries of models and your product, and how to build an effective go-to market in a crowded space.

Show Notes

Anshul’s Guest Posts:

Chapters

00:00:00: Introductions & Catchup

00:03:52: Why they created Windsurf

00:05:52: Limitations of VS Code

00:10:12: Evaluation methods for Cascade and Windsurf

00:16:15: Listener questions about Windsurf launch

00:20:30: Remote execution and security concerns

00:25:18: Evolution of Codeium's strategy

00:28:29: Cascade and its capabilities

00:33:12: Multi-agent systems

00:37:02: Areas of improvement for Windsurf

00:39:12: Building an enterprise-first company

00:42:01: Copilot for X, AI UX, and Enterprise AI blog posts

Transcript

Alessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.

Swyx [00:00:12]: Hey, and today we are delighted to be, I think, the first podcast in the new Codeium office. So thanks for having us and welcome Varun and Anshul.

Varun [00:00:21]: Thanks for having us.

Swyx [00:00:23]: This is the Silicon Valley office? So like, what's the story behind this?

Varun [00:00:25]: The story is that the office was previously, so we used to be on Castro Street, so this is in Mountain View. And I think a lot of the people at the company previously, you know, were NSF or still NSF. And actually one thing you, if you notice about the company is it's actually like a two minute walk from the Caltrain. And I think we were, we didn't want to move the office like very far away from the Caltrain. That would probably, you know, piss off a lot of the people that lived in San Francisco, this guy included. So we were like scouting a lot of spaces in the nearby. And this area popped up. It previously was being leased by, I think, Facebook slash WhatsApp. And then immediately after that, Ghost Autonomy. And then now here we are. And we also, you know, I guess one of the things that the landlord told us was this was the place that they shot all the scenes for Silicon Valley, at least like externally and stuff like that. So that just became a meme. Trust me, that wasn't like the main reason why we did it. But we've leaned into it.

Swyx [00:01:21]: It doesn't hurt. Yeah. And obviously that played a little bit into your launch with Windsurf as well. Yeah. So let's get caught up. You were guest number four. I think it was two. Maybe it was two. Two. Might have been two. So a lot has happened since then. You've raised a huge round and also just launched your idea. Like what's been the progress over the last year or so since Latent Space people last saw you?

Varun [00:01:44]: Yeah. So I think the biggest things that have happened are Codeium's extensions have continued to gain a lot of sort of popularity. You know, we have over 800,000 sort of developers that use that product. Lots of large enterprises. We also use the product. We were recently awarded JPMorgan Chase's Hall of Innovation Award, which is usually not something a company gets, you know, within a year of deploying an enterprise product. And then large companies like Dell and stuff use the product. So I think we've seen a lot of traction on the enterprise space. But I think one of the most exciting things we've launched recently is this actually IDE called Windsurf. And I think for us, one of the things that we've always thought about is how do we build the most powerful AI system for developers everywhere? The reason why we started out with the extension system was we felt that. There were lots of developers that were not going to be on one platform. And that still is true, by the way. Outside of Silicon Valley, a lot of people don't use GitHub. This is like a very surprising finding. But most people use GitLab, Bitbucket, Garrett, Perverse, CVS, Harvest, Mercurial. I could keep going down a list, but there's probably 10 of them. GitHub might have less than 10% penetration of the Fortune 500. Full penetration. It's very small. And then also on top of that, GitHub has very high switching costs for source code management tools. Right? Right. Right. Right. Right. Right. Right. Over all the dependent systems on this workflow software. It's much harder than even switching off of a database. So because of that, we actually found ways in which we could be better partners to our customers, regardless of where they started their source code. And then more specifically on the IDE category, a lot of developers, surprise, surprise, don't just write TypeScript and Python. Right? They write Java. They write Golang. They write a lot of different languages. And then high-quality language servers and debuggers matter. Very honestly, JetBrains has the best debugger for Java. It's not even close. Right? These are extremely complex pieces of software. We have customers where over 70% of their developers use JetBrains. And because of that, we wanted to provide a great experience wherever the developer was. But one thing that we found was lacking was, you know, we were running into the limitations of building within the VS Code ecosystem on the VS Code platform. And I think we felt that there was an opportunity for us to build a premier sort of experience. And that was within the reach of the team. Right? The team has done all the work, all the infrastructure work to build the best possible experience. Right? And plug it into every IDE. Why don't we just build our own IDE? Right? Right. Right. Because it's by far the best experience. And as these agentic products start to become more and more possible, and all the research we've done on retrieval and just reasoning about code bases became more and more to life, we were like, hey, if we launch this agentic product on top of a system that we didn't have a lot of control over, it's just going to limit the value of the product and we're just not going to be able to build the best tool. That's why we were super excited to launch Windsurf. I do think it is the most powerful IDE system out there right now in the capability. Right? And this is just the beginning. I think we suspect that there's. There's much, much more we can do, more than just the autocomplete sort of side. Right? When we originally talked, probably autocomplete was the only piece of functionality the product actually had. And we've come a long way since then. Right? And we've come a long way since then. These systems can now reason about large code bases without you adding everything. Like when you use Google, do you say like at New York Times post, blah, blah, blah, and like ask it a question? No. We want it to be a magical experience where you don't need to do that. We want it to actually go out and execute code. We think code execution is a really, really important piece. And when you write software, you no longer. You not only just kind of come up with an idea, the way software kind of gets created is software is originally this amorphous blob. And as time goes on and you have an idea, the blob and the clouds sort of disappear and you see this mountain. And we want it to be the case that as soon as you see the mountain, the AI helps you get to the mountain. And as soon as you see the mountain, the AI just creates the mountain for you. Right? And that's why we don't believe in this sort of modality where you just write a task and it just goes out and does it. Right? It's good for zero to one apps. And I think people have been seeing Windsurf is capable of doing that. And I'll let Anshul talk about that a little bit. But we've been seeing real value in real software development, which is more to say, this is not to say that current tools can't. But I think more in the process of actually evolving code from a very basic idea. Code is not really built as you have a PRD and then you get some output out. It's more like you have a general vision. And yes, and as you write the code, you get more and more clarity on approaches that don't work and do work. You're killing ideas and creating ideas constantly. And we think Windsurf is the right paradigm for that.

Alessio [00:05:52]: Can you spell out what? What? What you couldn't do in VS Code? Because I think when we did the Cursor episode, explain, then everybody on AgriNews is like, oh, why did you fork? Why? You could have done it in an extension. Like, can you maybe just explain more of those limitations?

Anshul [00:06:08]: I mean, I think a lot of the limitations around like APIs are pretty well documented. I don't know if we need to necessarily go down that rabbit hole. I think it was when we started thinking, OK, what are the pieces that we actually need to give the AI to get to that kind of, you know, emergent behavior that we're going to talk about? And yes, we were talking about all the knowledge retrieval systems that we've been building for the enterprise all this time. Like, that's obviously a component of that. You know, we were talking about all the different tools that we could give it access to so they can go, like, do that kind of terminal execution and things like that. But the third main category that we realized would be like kind of that magical thing where you're not out there writing out a PRD, you're not scoping the problem for the AI, is that if we're actually being able to understand the kind of the trajectory of what developers are doing within the editor, right, if we actually are being able to see like, oh, the developer just went and opened up the API. This part of the directory and tried to view it, then they made these kind of edits and they tried to do like some kind of commands in the terminal. And if we actually understand that trajectory, then our ability for the AI to just be immediately be like, oh, I understand your intent. This is what you want to do without you having to spell it all out for it. That is one like that kind of like magic would really happen. I think that was kind of like that intuition. So you have the restrictions of the APIs that are well documented. We have the kind of vision of like what we actually need to be able to hook into to really expose this. And I think it was that combination of those two where we're like. I think it's about time to do the editor. The editor was not like a necessarily a new idea. I think we've been talking about the editor for a very long time. I think it's like, of course, we just pulled it all together in the last couple of months. But it was always something in the back of the mind. And it's only when we started realizing, OK, the models are not capable of doing this. We actually can look at this data. Like we have a really good context awareness system. We're like, I think now's the time. And we went on and executed on it.

Alessio [00:07:47]: So it's basically not actually it's not like one action you couldn't do, but it's like how you brought it all together.

Varun [00:07:52]: It's like the VSCode is kind of like sandbox, so to speak, or let me let me maybe like even just to go one step deeper on each of the aspects that Anshul talked about. Let's go with the API aspect. So right now, I'll give you an example. Super complete is actually a feature that I think is like very exciting about the product. Right. It can suggest refactors of the code. I think you can do it quickly and very powerfully on VSCode. Actually, the problem for us wasn't actually being able to implement the feature. We had the feature for a while. Problem was actually even to show the feature VSCode would not expose an API for us to do this. So what we actually ended up doing. Was dynamically generating PNGs to actually go out and showcase this. It was not really aligned. We actually ended up doing it ourselves and it took us a couple hours to actually go out and implement this. Right. And that wasn't because we were bad engineers. No, our good engineering time was being spent fighting against the system rather than being a good system. Another example is we needed to go out and find ways to refactor the code. The VSCode API would constantly keep breaking on us. We constantly need to show a worse and worse experience. This actually comes down to the second point which Anshul brought up, which is like we can come up with great work and great research. All. The work we have here is not like the research on Cascade is not like a couple month thing. This is like a nine months to a year thing that we've been investigating as a company. Investing in on evals, right? Even the evals for this are a lot of effort, right? A lot of actually systems work to actually go out and do it. But ultimately, like this needs to be a product that developers actually use. And I think, you know, let's even go for a Cascade, for example, like and looking at the trajectory.

Swyx [00:09:13]: Yeah, we'd like to see Cascade because that's the first time you brought it up. Yeah.

Varun [00:09:16]: So Cascade is the product that is the actual agentic part of the product, right? That is capable of. Of. Of taking information from both these human trajectories and these AI trajectories, what the human ended up doing, what the AI ended up doing to actually propose changes and actually execute code to finally get you the final work output, right? I'll even talk about something very basic. Cascade gives you a bunch of code. We want developers to very easily be able to review this code. Okay, then we can show developers a hideous UI that they don't want to look at and no one's going to really use this product. And we think that this is like a fundamental building block for us to make the product materially better. If people are not even willing to use the building block, where does this go? Right. And we just felt our ceiling was capped on what we could deliver as an experience. Interestingly, JetBrains is a much more configurable paradigm than VS Code is, but we just felt so limited on both the sort of directions that Anshul said that we were just like, hey, if we actually remove these limitations, we can move substantially faster. And we believe that this was a necessary step for us.

Swyx [00:10:12]: I'm curious more about the evals, because you brought it up and we have to ask about evals anytime anyone brings up evals. Yeah. How do you evaluate a thing like this? How do you evaluate a thing like this that is so multi-stepped and so spanning, like so much context?

Varun [00:10:26]: So what you can imagine we can sort of do, and this is like one of the beautiful things about code is code can be executed. We could go take a bunch of open source code. We can find a bunch of commits, right? And we can actually see if some of these commits have tests associated with them. We can start stripping the commits and the approach of stripping the commits is good because it tests the fact that the code is in an incomplete state, right? When you're writing the commit, the goal is not the commit has already been written for you. You're given it in a state. There were the entire thing has not been written. And can we go out and actually retrieve the right snippets and actually come up with a cohesive plan and iterative loop that gets you to a state where the code actually passes? So you can actually break down and decompose this complex problem into like a planning retrieval and multi-step execution problem. And you can see on every single one of these axes is getting better. And if you do this across enough repositories, you've turned this highly discontinuous and discrete problem of make a PR work versus make it not work into a continuous problem. And now that's a hill you can actually climb. And that's a way that you can actually apply research where it's like, hey, my retrieval got way better. This made my evil get better, right? And then notice how the way to Eva works is I'm not that interested in the evil where purely to commit message and you finish the entire thing I'm more interested in the code is in an incomplete state. And the commit message isn't even given to you because that's another thing about developers. They are not willing to tell you exactly what's in their head. That's the actual important piece of this problem. We believe that developers will never completely pose the problem statement, right? Because the problem statement lives in their head. Conversations that you and I have had at the coffee area. Conversations that I've had over Slack. Conversations I've had over Jira. Maybe not Jira, let's say linear. That's the cool thing nowadays.

Anshul [00:12:02]: They're talking about Jira.

Varun [00:12:03]: Yeah, so conversations I've had on linear. And all of these things come together to actually finally propose sort of a solution there, which is why we want to test the incomplete code. What happens if the state is in an incomplete state? And am I actually able to make this pass without the commit? And can I actually guess your commit well? Well, now you can convert the problem into a mask prediction problem where you want to guess both the high-level intent and as well as the remainder of changes to make the actual test pass. And you can imagine if you build up all of these, now you can see, hey, my systems are getting better. Retrieval quality is getting better. And you can actually start testing this on larger and larger code bases, right? And I guess that's one thing that we honestly, to be honest, we could have done a little faster. We had the technology to go out and build these zero-to-one apps very quickly. And I think people are using Windsurf to actually do that. And it's extremely impressive. But the real value, I think, is actually much, much deeper than that. It's actually that you take a large code base, and it's actually a really good first pass. And I'm not saying it's perfect, but it's only going to keep getting better. And we have deep infrastructure to that actually is validating that we are getting better on this dimension.

Anshul [00:13:02]: You mentioned the end-to-end evals that we have for the system, which I think are super cool. But I think you can even decompose each of those steps, right? The ideas of just take retrieval, for example. How can we make eval for retrieval really good? And I think this is just a general thing that's been true about us as a company. It's like most evals and benchmarks, that exist out there for software development is kind of bogus. There's not really a better way of putting it. Like, okay, you have Swybench, that's cool. No actual professional work looks like Swybench. Like, human eval, same thing. Like, these things are just a little kind of broken. So when you're trying to optimize against a metric that's a little bit broken, you end up making kind of suboptimal decisions. So something that we're always very keen on is like, okay, what is the actual metric that we want to test for this part of the system? And so take retrieval, for example. A lot of the benchmarks for these embedding-based systems are like needle in the haystack problems. Like, I want to find this one particular piece of information out of all this potential context. That's not really what actually is necessary for doing software engineering because code is a super distributed knowledge store. You actually want to pull in snippets from a lot of different parts of the code base in order to do the work, right? And so we built systems that, instead of looking at retrieval at one, you're looking at retrieval at like 50. What are the 50 highest things that you can actually retrieve? And are you capturing all of the necessary pieces for that? And what are all the necessary pieces? Well, you can look again back at old, old commits and see what were all the different files that together were edited to make a commit because those are semantically similar things that might not actually show if you actually try to map out a code graph, right? And so we can actually build these kind of golden sets. We can do this evaluation even for sub-problems in the overall task. And so now we have like, you know, an engineering team that can iterate on all of these things and still make sure that the end goal that we're trying to build to is like really, really strong so that we have confidence of what we're pushing out.

Varun [00:14:43]: And by the way, just to talk, we'll say one more thing about the sweep bench thing. Just to showcase these existing, I think benchmarks are not a bad thing. You do want benchmarks. Actually, like I would prefer if there are benchmarks versus let's say everything was just vibes, right? But vibes are also very important, by the way, because they showcase that where the benchmark is not valuable because actually vibes sometimes show you where criminal issues are sort of exist in the benchmark. But like you look at some of the ways in which people have like optimized sweep bench, it's like make sure to run PyTest every time X happens. And it's like, yeah, like sure. You can start like prompting it in like every single possible way. And like, if you remove that, suddenly it doesn't get good at it. It's like, what really matters? What really matters here? What really matters here is like across a broad set of tasks, you're performing like high quality sort of suggestions for people and people love using the product. And I think actually like the way these things work is beyond a certain point, because yes, I actually think it's valuable beyond a certain point. But once it starts hitting the peak of these benchmarks, getting that last 10% actually probably is like counterintuitive to the actual goal of what the benchmark was. Like you probably should find a new hill to climb rather than sort of p-hacking or really optimizing for how you can get higher on the benchmark. Yeah.

Alessio [00:15:49]: We did an episode with Anthropic about their recent, like SwyAgent, SwyBench results. And we talked about the human eval versus SwyBench. And like human eval is kind of like a Greenfield benchmark. You know, you need to be good at that. SwyBench is more existing, but it sounds like, I mean, your eval creation is similar to SwyBench as far as like using GitHub commits and kind of like that history. But then it's more like masking at the commit level versus just testing the output of the, of the thing. Cool.

Swyx [00:16:15]: Well, we have some listener questions actually about the Windsurf launch. Cool. Well, we have some listener questions actually about the Windsurf launch. And obviously, I also want to give you the chance to just respond to Hacker News.

Varun [00:16:23]: Oh, man. Hey, let me tell you something very, very interesting. I love Hacker News as much as the next person. But the moment we launched our product, the first comment, like this was a year ago, the first comment was, this product is a virus. And we were like...

Anshul [00:16:38]: This was the original Codeium launch like two years ago. This is the original. Like, I am analyzing the binary as we speak. We'll report back.

Varun [00:16:44]: And then he's like, it's a virus. And I was like, dude, like, it's not a virus, dude.

Anshul [00:16:51]: We just want to give autocomplete suggestions. That's all we want to do.

Varun [00:16:54]: Yeah. Okay.

Alessio [00:16:55]: Wow. I didn't expect that. And then there was like Teo drama. There's enough drama on the launch to cover. But I don't know if we want to just make this a Cascade piece. But we had a bunch of people in our Discord try out the product, give a lot of feedback. One question people have is like, to them, Cascade already felt pretty agentic. Like, is that something you want to do more of? You know, obviously, since you just launched on ID, you're kind of like... You're focusing on having people write the code. But maybe this is kind of like the Trojan horse to just do more full-on end-to-end, like, code creation. Devin style. Yeah.

Anshul [00:17:27]: I think it's like, how do you get there in a real principled manner? We obviously have Enterprise asking us all the time, like, oh, when's it going to be like end-to-end work? The reality is like, okay, well, if we have something in the ID that, again, can see your entire actions and get a lot of intent that you can't actually get if you're not in the ID, I mean, if the agent there has to always get human, like, involvement to keep on fixing itself, it's probably not ready to become a full end-to-end automated system because then we're just going to turn into a linter where, like, it produces a bunch of things and no one looks at any of it. Like, that's not the great end state. But if we start seeing, like, oh, yeah, there's common patterns that people do that, like, never require human involvement, just end-to-end just totally works without, like, any, like, intent-based information, sure, that can become, like, fully agentic. And, like, we will learn what those tasks are, like, pretty quickly because we have a lot of data.

Varun [00:18:14]: Maybe add on to that, I think that if the answer is, like, full agentic is called, like, is Devin, I think, like, yes, the answer is this product should become fully agentic and limited human interaction is the goal, is 100% the goal. And I think, honestly, of all usable products right now, I think we're the closest right now, of all usable products in an ID. Now, let me caveat this by saying I think there are lots of hard problems that have yet to be solved that we need to go out and solve to actually make this happen. Like, for instance, I think one of the most annoying parts about the product is the fact that you need to accept every command that kind of gets run. It's actually fairly annoying. I would like it to go out and run it. Unfortunately, me going out and running arbitrary binaries has some problems in that if it, like, RMRs my hard disk, I'm not going to be... It's actually a virus. I'm not saying, actually, the hacker needs to be with you. Yeah, it does become a virus. I think this is solvable with, like, with complex systems. I think we love working on complex systems infrastructure. I think we'll solve it. Now, the simpler way to go about solving this is don't run it on the user's machine and run it somewhere else because then if you bought that machine, you're kind of totally fine. I think, though, maybe there's a little bit of trade-off of, like, running it locally versus remotely, and I think we might change our mind on this, but I think the goal for this is not for this to be the final state. I think the goal for this is, A, it's actually able to do very complex tasks with limited human interaction, but it needs to know when to actually go back to the human, right? Also, on top of that, compress every cycle that the agent is running. Right now, actually, I even feel like the product is too slow for me sometimes right now. Even with it running really fast, it's objectively pretty fast, I would still want it to be faster, right? So there is, like, systems work and probably modeling work that needs to happen there to make the product even faster on both the retrieval side and the generation side, right? And then finally speaking, I think another key piece here that's, like, really important is I actually think asking people to do things explicitly is probably going to be more of an anti-pattern if we can actually go and passively suggest the entire change for the user. So almost imagine, as the user is using the product, that we're going to suggest the remainder of the PR without the user kind of, like, even asking us for it. I think this is sort of the beginning of the process. I think this is sort of the beginning of the process. But, yeah, like, these are hard problems. I can't give a particular deadline for this. I think this is, like, a big step up than what we had particularly in the past. But I think what Anshul said is 100% true, but the goal is for us to get better at this.

Alessio [00:20:30]: I mean, the remote execution thing is interesting. You've wrote a post about the end of local host. Yeah. It's almost like then we were kind of like, well, no, maybe we do need the internet and, like, people want to run things. But now it's like, okay, no, actually, I don't really care. Like, I want the model to do the thing. And if you were like, you can do a task end-to-end, but it needs to run remotely, not on your computer, I'm sure most people would say, yeah.

Varun [00:20:50]: No, I agree with that. I actually agree with it running remotely. That's not a security issue. I actually, I totally agree with you that it's possible that everything could run remotely.

Swyx [00:20:59]: That's how it is at most, like, big codes, like Facebook, like, nobody runs things locally. No one does.

Varun [00:21:04]: In fact, you connect to a... A sensation to the mainframe. You're right on that. Maybe the one thing that I do think is kind of important for these systems that is more than just running remotely is, basically, you're not running it remotely. Basically, like, you know, when you look at these agents, there's kind of, like, a rollout of a trajectory. And I kind of want to roll this trajectory back, right? In some ways, I want, like, a snapshot of the system that I can, like, constantly checkpoint and move back and forth. And then also, on top of that, I might want to do multiple rollouts of this. So, basically, I think there needs to be a way to almost, like, move forward and move backwards the system. And whether that's locally or remotely, I think that's necessary. But every time, if you move the system forward, it, like, destroys your machine. It's probably going to be a hard system to kind of, or potentially destroys your machine. That's just not a workable solution. So, I think the local versus remote, I think you still need to solve the problem of this thing is not going to destroy your machine on every execution, if that makes sense. Yeah.

Swyx [00:21:53]: There's a category of emerging infrastructure providers that are working on time travel VMs.

Anshul [00:21:58]: And if Varun's first episode on this podcast was any indication, we like infrastructure problems. Yeah, okay.

Swyx [00:22:03]: Oh, so you're going there.

Anshul [00:22:04]: All right.

Alessio [00:22:05]: Well, that's funny, right? It's like, when we first had you, you were doing so much on, like, actual model inference, optimization, all these things. And today, it's almost like... It's cloud, it's 4.0. It's like, you know, people are, like, forgetting about the model, you know, and now it's all about at a higher level of extraction. Yeah.

Varun [00:22:21]: So, maybe I can say, like, a little bit about how our strategy on this has, like, evolved. Because it objectively has, right? I think I would be lying if I said it hasn't. The things like autocomplete and supercomplete that run on every keystroke are entirely, like, our own models. And, by the way, that is still because properties like FIM, fill-in-the-middle capabilities, are still quite bad with the current... Non-existent. They're very bad. Non-existent. They're not good, actually, at it.

Swyx [00:22:46]: Because FIM is an actual, like, how you order the tokens.

Varun [00:22:49]: Yes, it's how you order the tokens, actually, in some ways. And this is sort of, if you look at what these products have sort of become, and this is great, is a lot of the clods in the opening ads have focused on kind of the chat-like assistant API, where it's, like, complete pieces of work, message, another complete piece of work. So, multi-turn, kind of back-and-forth systems. In fact, like, actually, even these systems are not that good at making point changes. When they make point changes, they kind of are, like, off here and there by a little bit. Because, yeah, when you, like, are doing multi-point kind of, like, conversations, it's, you know, exact GIFs getting applied is not, like, even a perfect science still yet. So, we care about that. The second piece where we've actually sort of trained our models is actually on the retrieval system. And this is not even for embedding, but, like, actually being able to use high-powered LLMs to be able to do much higher-quality retrieval across the code base, right? So, this is actually what Anshul said. For a lot of the systems, we do believe embeddings work, but for complex questions, we don't believe embeddings can encapsulate all the granularity of a particular query. Like, imagine I have a question on a code base of, find me all quadratic time algorithms in this code base. Do we genuinely believe the embedding can encapsulate the fact that this function is a quadratic time function? No, I don't think it does. So, you are going to get extremely poor precision recall at this task. So, we need to apply something a little more high-powered to actually go out and do that. So, we've actually built, like, large distributed systems to actually go out and run this. Run these at scale. Run custom models at scale across large code bases. So, I think it's more a question of that. The planning models right now, undoubtedly, I think the CLODS and the OpenAIs have the best products. I think LLAMA4, depending on where it goes, it could be materially better. It's very clear that they're willing to invest a similar amount of compute as the OpenAIs and the Anthropics. So, we'll see. I would be very happy if they got really good, but unclear so far.

Swyx [00:24:32]: Don't forget Grok.

Varun [00:24:33]: Hey, dude, I think Grok is also possible. Yeah. Right? I think, don't doubt Elon.

Swyx [00:24:37]: Okay. I didn't actually know. It's not obvious when I use Cascade. I should also mention that, you know, I was part of the preview. Thanks for letting me in. And I've been maining Windsurf for a long time. It's not actually obvious. You don't make it obvious that you are running your own models. Yeah. I feel like you should. So that, like, I feel like it has more differentiation. Like, I only have exclusive access to your models via your IDE than having the drop-down as is cloud and 4.0.

Varun [00:25:01]: Because I actually thought that was what you did. No. So, actually, the way it works is the high-level planning that is going on in the model is actually getting done with products like the cloud. But the extremely fast retrieval, as well as the ability to, like, take the high-level plan and actually apply it to the code base, is proprietary systems that are running internally.

Swyx [00:25:18]: And then the stuff that you said about embeddings not being enough. Are you familiar with the, I'd call it the late interaction?

Varun [00:25:24]: No, I actually have never heard of it. Yeah.

Swyx [00:25:25]: So, this is Colbert, or, like, the guy, Omar Khattab, from, I think, Stanford, has been promoting this a lot. It is basically what you've done. Okay. It's sort of embedding on retrieval rather than pre-embedding. Okay. In a very loose sense.

Varun [00:25:38]: I think that sounds like a very good idea that is very similar to what we're doing.

Anshul [00:25:42]: Sounds like a very good idea.

Varun [00:25:43]: I think we'd say that. That's like the meme of Obama giving himself a medal right there.

Swyx [00:25:49]: Well, I mean, there might be something to learn from contrasting the ideas and seeing where, like, the study opinion differences. It's also been applied very effectively to vision understanding. Because vision models tend to just consume the whole image, if you are able to sort of focus on images based on the query, I think that, like, can get you a lot of extra performance.

Anshul [00:26:09]: The basic idea of using compute in a distributed manner to do, you know, operations over a whole set of, like, raw data rather than, like, a materialized view is not anything new, right? Like, I think it's just, like, how does that look like for LLMs?

Swyx [00:26:21]: When I hear you say, like, build large distributed systems, like, you have a very strange product strategy of going down to the individual developer, but also to the large enterprise. Yeah. Is it the same infra that serves everything?

Varun [00:26:31]: I think the answer to that is yes. The answer to that is yes. And the only reason why for a yes, the answer is yes. To be honest, our company is a lot more complex than I think if we just wanted to serve the individual. And I'll tell you that because we don't really, like, pay other providers to do things for our indexing. We don't pay, like, other providers to do our serving of our own customer models, right? And I think that's a core competency within our company that we have decided to build, but that's also enabled us to go and, like, make sure that when we're serving these products in an environment that works for these large enterprises, we're not going out and being like, we need to build this custom system for you guys, right? This is the same system that serves our entire user, our user base. So that is a very unique decision we've taken as a company, and we admit that there are probably faster ways that we could have done this.

Swyx [00:27:15]: I was thinking, you know, when I was working with you for your enterprise piece, I was thinking, like, this philosophy of go slow to go fast, like, build deliberately for the right level of abstraction that can serve the market that you really are going after.

Anshul [00:27:26]: Yeah, I mean, I would say, like, when writing that piece, looking back and reading it back, it sounds so, like, almost obvious in a sense. Not all of those are really conscious decisions we made. Like, I'll be the first to admit that. But, like, it does help, right? When we go to, like, an enterprise that has tens of thousands of developers, and they're like, oh, wow, like, you know, we have tens of thousands of developers, and, like, does your infrastructure work for tens of thousands of developers? We can turn around and be like, well, we have hundreds of thousands of developers on an individual plan that we're serving. Like, I think we'll be able to support you, right? So, like, being able to do those things, like, we started off by just, like, let's give it to individuals, let's see what people like and what they don't like and learn. But then those become value propositions when we go to the enterprise.

Alessio [00:28:02]: And to recap, when you first came on the pod, it was like, auto-completion is free. And Copalo was 10𝑎𝑚𝑜𝑛𝑡ℎ.𝐴𝑛𝑑𝑦𝑜𝑢𝑠𝑎𝑖𝑑,𝑙𝑜𝑜𝑘,𝑤ℎ𝑎𝑡𝑤𝑒𝑐𝑎𝑟𝑒𝑎𝑏𝑜𝑢𝑡𝑖𝑠𝑏𝑢𝑖𝑙𝑑𝑖𝑛𝑔𝑡ℎ𝑖𝑛𝑔𝑠𝑜𝑛𝑡𝑜𝑝𝑜𝑓𝑐𝑜𝑑𝑒𝑐𝑜𝑚𝑝𝑙𝑒𝑡𝑖𝑜𝑛.𝐻𝑜𝑤𝑑𝑖𝑑𝑦𝑜𝑢𝑑𝑒𝑐𝑖𝑑𝑒𝑡𝑜,𝑙𝑖𝑘𝑒,𝑗𝑢𝑠𝑡𝑛𝑜𝑡𝑓𝑜𝑐𝑢𝑠𝑜𝑛,𝑙𝑖𝑘𝑒,𝑠ℎ𝑜𝑟𝑡−𝑡𝑒𝑟𝑚,𝑘𝑖𝑛𝑑𝑜𝑓,𝑙𝑖𝑘𝑒,𝑔𝑟𝑜𝑤𝑡ℎ𝑚𝑜𝑛𝑒𝑡𝑖𝑧𝑎𝑡𝑖𝑜𝑛𝑜𝑓,𝑙𝑖𝑘𝑒,𝑡ℎ𝑒𝑖𝑛𝑑𝑖𝑣𝑖𝑑𝑢𝑎𝑙𝑑𝑒𝑣𝑒𝑙𝑜𝑝𝑒𝑟𝑎𝑛𝑑,𝑙𝑖𝑘𝑒,𝑏𝑢𝑖𝑙𝑑𝑠𝑜𝑚𝑒𝑜𝑓𝑡ℎ𝑖𝑠?𝐵𝑒𝑐𝑎𝑢𝑠𝑒𝑡ℎ𝑒𝑎𝑙𝑡𝑒𝑟𝑛𝑎𝑡𝑖𝑣𝑒𝑤𝑜𝑢𝑙𝑑ℎ𝑎𝑣𝑒𝑏𝑒𝑒𝑛,ℎ𝑒𝑦,𝑎𝑙𝑙𝑡ℎ𝑒𝑠𝑒𝑝𝑒𝑜𝑝𝑙𝑒𝑎𝑟𝑒𝑢𝑠𝑖𝑛𝑔𝑖𝑡,𝑠𝑜𝑤𝑒′𝑟𝑒𝑔𝑜𝑖𝑛𝑔𝑡𝑜𝑚𝑎𝑘𝑒𝑡ℎ𝑖𝑠𝑜𝑡ℎ𝑒𝑟,𝑙𝑖𝑘𝑒,10amonth.Andyousaid,look,whatwecareaboutisbuildingthingsontopofcodecompletion.Howdidyoudecideto,like,justnotfocuson,like,short−term,kindof,like,growthmonetizationof,like,theindividualdeveloperand,like,buildsomeofthis?Becausethealternativewouldhavebeen,hey,allthesepeopleareusingit,sowe′regoingtomakethisother,like,5 a month plan, monetize.

Varun [00:28:29]: I think this might be a little bit of, like, a little bit of, like, commercial instinct that the company has and unclear if the commercial instinct is right. I think that right now, optimizing for making money off of individual developers is probably the wrong, actually, strategy. Largely because I think individual developers can switch off of products, like, very quickly. And unless we have, like, a very large lead trying to optimize for making a lot of profit off of individual developers, it's probably something that someone else could just vaporize very quickly and then they move to another product. And I'm going to say this very honestly, right? Like, when you use a product like Codeium on the individual side, there's not much that prevents you to switch onto another product. I think that will change with time as the products get better and better and deeper and deeper. I constantly say this, like, there's a book in business called, like, Seven Powers, and I think one of the powers that a business like ours need to have is, like, real switching costs. But, like, you first need something in the product that makes people switch on and stay on before you think about how do you make people switch off. And I think for us, we believe that there's probably much more differentiation we can derive in the enterprise by working with these large companies in a way that is, like, that is interesting and scalable for them. Like, I'll be maybe more concrete here. Individual developers are much more sort of tuned towards small price changes. They care a lot more, right? Like, if our product is 10,10,20 a month instead of 50𝑜𝑟50or100 a month, that matters to them a lot. And for a large company where they're already spending billions of dollars on software, this is much less important. So you can actually solve maybe deeper problems for them and you can actually kind of provide more differentiation on that angle. Whereas, if you're a large company I think individual developers could be churning as long as we don't have the best product. So focus on being the best product, not trying to, like, take price and make a lot of money off of people. And I don't think we will, for the foreseeable future, try to be a company that tries to make a lot of money off individual developers.

Swyx [00:30:15]: I mean, that makes sense. So why $10 a month for Windsurf?

Anshul [00:30:18]: Why 10𝑎𝑚𝑜𝑛𝑡ℎ?10amonth?10 a month was actually the pro plan. So we launched our individual pro plan before Windsurf existed. Because I think there's, let's all shut up, we also had to be financially responsible. Right? Yeah, yeah. We can't run out of money. It's a lot of things because of our infrastructure background. We can give, like, for essentially free, like, unlimited autocomplete, unlimited chat on, like, our, you know, faster models. Like, we have a lot of things actually out for free. But yeah, when we start doing things like the super completes and really large amounts of indexing and all these things, like, there is real cogs here. Like, we can't ignore that. And so we just created a $10 a month pro plan mostly just to cover the cost. Like, we're not really, like, operating, I think, on much of a margin there either. But, like, okay. Like, just to cover us there. So for Windsurf, it just ended up being the same thing. And everyone who downloads Windsurf in the first, like, I forget, like, a couple of weeks, they get, like, two weeks for free. Let's just have people try it out. Let us know what they like, what they don't like. And that's how we've always operated.

Alessio [00:31:16]: I've talked to a lot of CTOs in, like, the Fortune 100 where most of the engineers they have, they don't really do much anyway. The problem is not that the developer costs 200𝐾𝑎𝑛𝑑𝑦𝑜𝑢′𝑟𝑒𝑠𝑎𝑣𝑖𝑛𝑔200Kandyou′resaving8K. It's like, that developer should not be paid 200𝐾.𝐵𝑢𝑡𝑡ℎ𝑎𝑡′𝑠𝑘𝑖𝑛𝑑𝑜𝑓,𝑙𝑖𝑘𝑒,𝑡ℎ𝑒𝑏𝑎𝑠𝑒𝑝𝑟𝑖𝑐𝑒,𝑦𝑜𝑢𝑘𝑛𝑜𝑤?𝐵𝑢𝑡𝑡ℎ𝑒𝑛𝑦𝑜𝑢ℎ𝑎𝑣𝑒𝑑𝑒𝑣𝑒𝑙𝑜𝑝𝑒𝑟𝑠𝑔𝑒𝑡𝑡𝑖𝑛𝑔𝑝𝑎𝑖𝑑200K.Butthat′skindof,like,thebaseprice,youknow?Butthenyouhavedevelopersgettingpaid200K that should be paid 500𝐾.𝑆𝑜𝑖𝑡′𝑠𝑎𝑙𝑚𝑜𝑠𝑡𝑙𝑖𝑘𝑒𝑦𝑜𝑢′𝑟𝑒𝑎𝑣𝑒𝑟𝑎𝑔𝑖𝑛𝑔𝑜𝑢𝑡𝑡ℎ𝑒𝑝𝑟𝑖𝑐𝑒𝑏𝑒𝑐𝑎𝑢𝑠𝑒𝑚𝑜𝑠𝑡𝑝𝑒𝑜𝑝𝑙𝑒𝑎𝑟𝑒𝑎𝑐𝑡𝑢𝑎𝑙𝑙𝑦𝑛𝑜𝑡𝑡ℎ𝑎𝑡𝑝𝑟𝑜𝑑𝑢𝑐𝑡𝑖𝑣𝑒𝑎𝑛𝑦𝑤𝑎𝑦.𝑆𝑜𝑖𝑓𝑦𝑜𝑢𝑚𝑎𝑘𝑒𝑡ℎ𝑒𝑚20500K.Soit′salmostlikeyou′reaveragingoutthepricebecausemostpeopleareactuallynotthatproductiveanyway.Soifyoumakethem2050K, you know? And it's like the bottom of the end gets kind of, like, squeezed out and then the top end gets squeezed up.

Varun [00:31:56]: Yeah, maybe, Alessio, one thing that I think about a lot, because I do think about this, the Perseat, anything, all of this stuff, I think about a good deal. Let's take a product like Office 365. I will say a lawyer at Codeium uses Microsoft Word way more than I do. I'm still footing the same bill. But the amount of value that he's driving from Office 365 is probably, you know, tens of thousands of dollars. By the way, everyone, you know, Google Docs, great product. Microsoft Word is a crazy product. It made it so that the moment you review anything in Microsoft Word, the only way you can review it is with other people in Microsoft Word. It's like this virus that penetrates everything. And it's not only penetrates it within the company, it penetrates it across company too. The amount of value it's driving is way higher for him. So for these kinds of products, there's always going to be for these kinds of products, this variance between who gets value from these products, right? And you're right. It's almost like a blended because you're actually totally right. Probably this company should be paying that one developer maybe like four times as much. But in weird ways, software is like this team activity enough that there's a bunch of blended outcomes. But hey, like 20% of the four times and there are four people is still going to cover the cost. Right? Across the four individuals, right? And that's how roughly these products kind of get priced out.

Alessio [00:33:01]: I mean, more than about pricing, this is about like the future of like the software engineer. Like we could be very wrong also. Yeah. I think nobody knows.

Swyx [00:33:09]: Reserve the right to be incredibly off.

Anshul [00:33:11]: Yeah.

Swyx [00:33:12]: I mean, business model does impact the product. Product does impact the user experience. So it's all of a kind. I don't mind. We are, we do, are as concerned about the business of tech as the tech itself. That's cool. Speaking of which, there's other listener questions. Shout out to Daniel Imfeld, who's pretty active in our Discord, just asking all these things. Multi-agent, very, very hot and popular, especially from like the Microsoft research point of view. Have you made any explorations there?

Varun [00:33:36]: I think we have. I don't think we've called it a multi-agent, which is more so like once you, this notion of having many trajectories that you can spawn off, that kind of like validate sort of some different hypotheses and you can kind of pick the most interesting one. This is stuff that we've actually analyzed internally at the company. By the way, the reason why we have not put these things in, actually, is partially because we can't go out and execute some random stuff in parallel in the meantime. In the meantime, on other sides. Because of the side effects. Because of the side effects, right? So there's some things that are a little bit dependent on us unlocking more and more functionality internally. And then the other thing is, in the short term, I think there is like also a latency component. And I think all of these things can kind of be solved. I actually believe all of these things are solvable problems. They're not unsolvable problems. And if you want to run all of them in parallel, you probably don't want N machines to go out and do it. I think that's unnecessary, especially if most of them are IO-bound kind of operations where all you're doing is reading a little bit of data and writing out a little bit of data. It's not extremely compute-intensive. I think that it's a good idea and probably something we will pursue and is going to be in the product.

Swyx [00:34:36]: I'm still processing what you just said about things being IO-bound. So for a certain class of concurrency, you can actually just run it all in one machine. Yeah, why not?

Varun [00:34:44]: Because if you look at the changes that are made, right, for some of these, it's writing out like, what, a couple thousand bytes? Maybe like tens of thousands of bytes on every tree. That's not a lot. Very small.

Swyx [00:34:54]: What's next for Cascade or Windsurf?

Anshul [00:34:56]: Oh, there's a lot. I don't know. We did an internal poll and we were just like, are you more excited about this launch or the launch that's happening in a month? Or what we're going to come out with in a month? And it was almost uniformly in a month. I think there's some obvious ones. I don't know how much more you want to say. I don't want to speak up. But I think you'd look at all the same axes of the system. How can we improve the knowledge retrieval? We'll always keep on figuring out how to improve knowledge retrieval. In our launch video, we even showed some of the early explorations we have about looking to other data sources. That might not be the coolest thing to the individual developer building a zero-to-one app, but you can really believe that the enterprise customers really think that that's very cool. I think on the tool side, I think there's a whole lot more that we can do. I mean, of course, Vern's talked about not just suggesting the terminal command, but actually executing them. I think that's going to be huge. On lock, you look at the actions that people are taking, like the human actions, the trajectories that we can build. How can we make that even more detailed? And I think all of those things, and you make some even cleaner UI, like the idea of looking at future trajectories, trying a few different things, and suggesting potential next actions to be taken. That doesn't really exist yet, but it's pretty obvious, I think, how that would look like. You open up Cascade, and instead of starting typing, it's just like, here's a bunch of things that we want to do. We kind of joke that Clippy's coming back, but maybe now's the time for Clippy to really shine. So I think there's a lot of ways that we can take this, which I think is the very exciting part. We're calling each of our launches Waves, I believe, because we want to really double down on the aquatic themes.

Swyx [00:36:25]: Oh yeah, does someone actually windsurf at the company? Is that...

Varun [00:36:28]: I

Swyx [00:36:30]: We're living out our dream

Varun [00:36:31]: of being cool enough to windsurf through the rocks.

Anshul [00:36:33]: Yeah, I don't think we can.

Varun [00:36:35]: Yeah, all right.

Anshul [00:36:36]: That was actually something we learned, because I don't think any of us are windsurfers. Like in our launch video, we have someone using windsurf on a windsurf. You saw that, right? You saw that in the beginning of the video. Someone's at the computer. And we didn't realize, now apparently is the time of the year where there's not enough wind to windsurf. So we were trying to figure out how to do this launch video with a windsurf on the windsurf. Every windsurf we talked to, we're like, yeah, it's not possible. And there was one crazy guy who was like, yeah, I think we can do this. And we made it happen. Oh, okay.

Swyx [00:37:02]: That's funny. Is there anything that you want feedback on? Maybe there's a fork in the road. You want feedback. You want people to respond to this podcast and tell you what they want.

Varun [00:37:11]: Yeah, I think there's a lot of things that I think could be more polished about the product that we'd like to improve. Lots of different environments that we're going to improve performance on. And I think there's a lot of things that I think we would love to hear from folks across the gamut. Like, hey, if you have this environment, you use Windows and X version, it didn't work. Or this language, it was very poor. I think we would like to hear it. Yeah, I gave Preb and Kevin a lot of shit for my Python issues. Yeah, yeah, yeah. And I think there's a lot to kind of improve on the environment side. I think, for instance, even just a dumb example, and I think sort of Swyx, this was a common one, is like, yeah, the virtual environment, where is the terminal running? What is all this stuff? These are all basic things that, to be honest, this is not rocket science, but we need to just fix it, right? We need to fix it. So we would love to hear all the feedback from the product. Like, was it too slow? Where was it too slow? What kind of environments could it work way more in? There's a lot of things that we don't know. Luckily, we're daily users of the product internally, so we're getting a lot of feedback inside. But I will say, there's a little bit of Silicon Valley-ism in that a lot of us develop on Mac. A lot of people, once again, over 80% of developers are on Windows. So yeah, there's a lot to learn and probably a lot of improvements down the line.

Swyx [00:38:18]: Have you personally attempted, as your CEO of the company, to switch to Windows just to feel something?

Varun [00:38:24]: the Windows. You know what?

Swyx [00:38:26]: To feel the pain.

Varun [00:38:27]: You know what? Maybe I should.

Swyx [00:38:29]: Actually, I think I will. I mean, your customers, everyone says it's 89% on Windows, right? You live in Windows, you would never not see something that missed.

Varun [00:38:40]: So I think in the beginning, part of the reason why we were hesitant to do that was a lot of our architectural decisions to work on across every IDE was because we were like, we built a platform-agnostic way of running the system on the user's local machine that was only buildable, easily buildable on dev containers that lived on a particular type of platform, so Mac was nice for that. But now there's not really an excuse if I can also make changes to the UI and stuff like that. And yeah, WSL also exists. That's actually something that we need to add to the product. That's how early it is that we have not actually added that. We don't have a remote.

Swyx [00:39:12]: Anything else about Codeium at large? You still have your core business of, the enterprise Codeium? Yeah. Anything moving there or anything that people should know about?

Anshul [00:39:23]: I think a lot are still moving there, right? I think it would be a little bit like, you know, very kind of egotistical as to being like, oh, we have Windsurf now, all of our enterprise customers are going to switch to Windsurf and this is the only, like, no, we still support the other IDE. I was going to say,

Swyx [00:39:34]: you just talked about your Java guys loving JetBrains. They're never going to leave JetBrains.

Anshul [00:39:38]: They're not. Like, I mean, forget JetBrains, there's still tons and tons of enterprise people on Eclipse. Like, we're still the only code assistant that has an extension in Eclipse. That's still true years in, right? But like, that's because that's our enterprise customers. And the way that we always think about it is like, how do we still maximize the value of AI for every developer? I don't think that part of who we are has changed since the beginning, right? And there's a lot of like, meeting the developers where they are. So, I think on the enterprise side, we're still pretty invested in doing that. We have like a team of engineers dedicated just to making enterprise successful and thinking about the enterprise problems. But really, if we think about it from the really macro perspective, it's like, if we can solve all the enterprise problems for an enterprise and we have products that developers themselves just truly, truly love, then we're solving the problem from both sides. And I think it's one of those things where I think when, you know, we started working with enterprise and we started building like dev tools, right? We started as an infrastructure company. Now we're building dev tools for developers. You really quickly understand and realize just how much developers loving the tool make us successful in an enterprise. There's a lot of enterprise software that developers hate. I want to draw this flywheel. But like, we're giving a tool for people where they're doing their most important work. They have to love it. And it's not like we're like trying to convince, the executives at this company also ask their developers a lot. Do you love this? Like that is like almost always a key aspect of whether or not Codeium is accepted into the organization. I don't think we go from zero to 10 million ARR in less than a year in an enterprise product if we don't have a product that developers love. So I think that's why we're just, the IDE is more of a developer love kind of play. It will eventually make it to the enterprise. We still solve the enterprise problems. And again, we could be completely wrong about this, but we hope we're solving the right problems.

Swyx [00:41:17]: It's interesting. I asked you this before we started rolling, but like, it's the same team. That's the same edge team. Like I, in any normal company or like, you know, my normal mental model of company construction, if you were to have like effectively two products like this, like you would have two different teams serving two different needs, but it's the same team. Yeah.

Varun [00:41:34]: I think one of the things that's maybe unique about our company is like, this has not been one company the whole time, right? Like we were first, like this GPU virtualization company pivoted to this. And then after that, we're making some changes and like, I think there's like a versatility of the company and like this, this ability to move where we think the instinct, we have this instinct where, and by the way, the instinct could be wrong, but if we smell something, we're going to move fast. And I think it's more a testament to I think the engineering team rather than any one of us.

Alessio [00:42:02]: And sure, you had December 19, 2022. You have one of our guests post what building Copilot for X really takes. Estimate inference to figure out latency quality. Build first party instead of using third party as ABI. Okay. Figure out real time because chat GBT and DALI DALI at the RFP are too slow. Optimize prompt because context window is limited, which is maybe not that true anymore. And then merge model outputs with the UX to make the product more intuitive. Is there anything you would add? I'd give myself

Anshul [00:42:34]: like a B minus on that. Some parts of that are accurate. Even like the context like the one that you called out. Yeah, models have larger context links now. That's absolutely true. It's grown a lot. But look at an enterprise code base. They have tens of millions of lines of code that's hundreds of billions of tokens. Never going to change. Still being really good at being able to piece together this distributed knowledge is important. So I think there are figures there that I think are still pretty accurate. There's probably some that are less so. First party versus third party. I think we're wrong there. I think I would nuance that to be like, there are certain things that it's really important to first party. Like autocomplete. You have a really specific application that you can't just prompt engineer your way out of or just maybe even fine tune afterwards. You just can't do that. I think there's truth there. But let's also be realistic. The stuff that's coming out for the third model providers like Cascade and Windsurf would not have been possible if it wasn't for the rapid improvements with 4.0 and 3.5. That just wouldn't have been possible. So I'll give myself a B minus. I'll say I passed. But yeah, it's two hours and two years later.

Alessio [00:43:33]: Just to be clear, we're not grading. It's more of a what would you, you know, where are they now? What would you have added? What would you like? Yeah, I mean,

Anshul [00:43:42]: that first post, right? That was when we had literally, I think that was like a few weeks after we had launched Codeium. I think that's like Swyx and I were talking like, maybe we can write this because we're like one of the first products that people can actually use with AI. That's cool.

Swyx [00:43:53]: I specifically liked the co-pilot for X thing because everyone, it was so hot. At that time,

Anshul [00:43:58]: everyone was just like, you know, that's all that was. But I think like, you know, we didn't have an enterprise product. I don't even think we were necessarily thinking of an enterprise product at that point. Right? So like, all of the learnings that we've had from the enterprise perspective, which is why I loved coming back for like a third time now on the blog. Some of those, I think we kind of like figured. Some of those, we just honestly walked backwards into. Had to get lucky a lot of the ways. Like we had many, we just did a lot. Like there's so many opportunities and deals we had that we like lost for a variety of reasons that we had to like learn from. There's just so much more to add that there's no way I would have gotten that right in 2022.

Varun [00:44:35]: Can I mention one thing that I think is, hopefully this is not very controversial, but it's like true about our engineering team as a whole. I don't think most of us got much value from ChatGPT. Largely because I think the problem was, and this is maybe a little bit of a different thing. It's like a lot of the engineers at the company who have been writing software for like over eight years, and this is not to say

Varun [00:44:58]: that they're not searching for stack overflow. Invested a lot in searching code base. Right? They can very quickly grab through the code. Incredibly fast. Like every tool. And they've spent like eight years mastering that skill. And ChatGPT being this thing on the side that you need to provide a lot of context to, we were not able to actually get, like my co-founder just basically never used ChatGPT at all. Literally never did. And because of that, probably at the time, one of our incorrect sort of assumptions was probably that, hey, like a lot of these passive systems need to get good because they're always there and these active systems are going to be behind. I think actually, Cascade was the big thing. It's a company where everyone is now using it. Literally everyone. Biggest skeptics. And we have a lot of people at the company that are skeptical of AI. I think this is actually important. Why do you hire them? No, I think, here's the important thing. Those people that were skeptical about AI previously worked in autonomous vehicles. These are not crypto people. These are people that care about technology and want to work on the future. Their bar for good is just very high. They will not form a cult of,

Varun [00:45:56]: I'm not going to be the kind of people on Twitter that are like, this changes everything. Like, softwares, we know it is dead. No, there are people that are going to be incredibly honest and we know if we hit the bar that it is good for them, we found something special. And I think at that time we probably had a lot of sentiment like that. That has changed a lot now. And I think it's actually important that you have believers that are incredibly future looking and people that kind of reign it in. Because otherwise, you just have, you know, this is like autonomous vehicles. You have a very discrete problem. People are just working in a vacuum and there's no signal to kind of bring you down to reality. You have no good way to kill ideas. And there are a lot of ideas we're going to come up with that are just terrible ideas. But we need to come up with terrible ideas. Otherwise, how does anything good come on? And I don't want to call these skeptics. Skeptics suggest that they don't know. They're realists. They're the type of people that when they see waitlist on a product online, they just will not believe it. They will not think about it at all.

Swyx [00:46:46]: Kudos for launching without a waitlist.

Varun [00:46:48]: By the way, we will never launch with a waitlist. We will never launch with a waitlist. That's the thing at the company. We'd much rather be a company that's considered the boring company than a company that launches once in a while and hopefully it's good.

Swyx [00:46:59]: My joke is generative AI has gotten really good at generating waitlists. Also,

Anshul [00:47:05]: just to clarify, both of us used to work in autonomous vehicles so it doesn't come across as like autonomous vehicles. We love that technology.

Varun [00:47:13]: We love it. I love hard technology problems.

Swyx [00:47:15]: That's what I live for. Amazing. Just to push back on the first-party thing, I accept that the large model labs have just done a lot of work for you that you didn't need to duplicate. But you now are sitting on so much proprietary data that it may be worth training on the trajectories that you're collecting. So maybe it's a pendulum back to first-party. Yeah,

Anshul [00:47:38]: I mean, I think like, I mean, we've been pretty clear from like a security posture perspective. I think there's like both like, you know, customer trust and like, I mean, I kind of want, like, let me opt in. I think that there is signals that we do get from our users that we can utilize. Like, there's a lot of preference information that we get, for example. Which is effectively

Varun [00:47:53]: what you're saying of like, our trajectories, our trajectories good. We, like, I will say this, the super complete product that we have has gone materially better because of us not only using synthetic data, but also getting the preference data from our users of like, hey, given these set of trajectories, here's actually what a good outcome is. And in fact, one of the really beautiful parts about our product that is very different than a chat GPT is

Varun [00:48:18]: that the acceptance happened and it happened even more than that. Right? Like, let's say you accepted it, but then after accepting it, you deleted three or four items in there. We can see that. So that actually lets us get to even better than acceptance as a metric. Because we're in the ultimate work

Anshul [00:48:33]: output of the developer. It's the preference between the acceptance and what actually happened. If you can actually get ground truth of what actually happened, this is the beauty of being in the ID, then like, yeah, you get a lot of information there. Did you have this

Swyx [00:48:44]: with the extension or is this pure Windsurf?

Anshul [00:48:46]: We had this with the extension. Yeah, okay, great.

Swyx [00:48:48]: The Windsurf just gives you more of the ID. Yes.

Varun [00:48:50]: So that means you can also start getting more information. Like, for instance, the basic thing that Anshul said, we can see if like a file explorer was opened. It's actually just a piece of information we just cannot see previously. Sure. Yeah.

Anshul [00:49:02]: A lot of intent in there. A lot of intent. Second one. Oh boy.

Alessio [00:49:05]: How to make AI UX

Anshul [00:49:06]: your mode. Oh man. Isn't that funny that we now created like the full UX experience and then ID? I think that one is pretty accurate.

Swyx [00:49:14]: That one's an A?

Anshul [00:49:14]: I think that one I'll give myself. I think like we were doing that within the extension. I still think that's true within the extensions as well, right? Like, we got very, very creative with things. Like, Ruin mentioned the idea of just like, you know, essentially rendering images to display things. Like, we get creative to figure out what the right UX is doing there. Like, we could create a really like dumb UX that's like a side panel like whatever. Like, but no. Actually going that extra mile does make that experience as good as it possibly can there. But yeah, now like look at some of the UX that we're able to build in like in Windsurf and it's just like it's fun. The first time I saw because now we can do command in the terminal like you can not have to search for a bash command. The first time I saw that I was like I just started smiling and like it's like it's not it's not like Cascade. It's not like a Gentic system right in the lab. But I'm like that is just a very, very cool experience. We literally couldn't do that in VS Code.

Swyx [00:49:59]: Yeah, I understand that. Yeah, I've implemented a 60 line bash command called please

Swyx [00:50:08]: Yeah, so please

Varun [00:50:08]: English and then You know, that's actually really cool because one of the things I think we believe in is actually I like products like autocomplete more than command purely because I don't even want to open anything up. So that thing where I just can type and not have to press some button shortcuts to go in a different place I actually like that too.

Swyx [00:50:25]: Yeah, and I actually adopted warp the terminal warp initially for that because they gave that away for free. But now it's everywhere so I can turn off a warp and not give Sequoia my bash commands.

Swyx [00:50:37]: I'm with you.

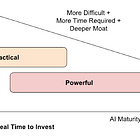

Alessio [00:50:40]: No, I use I use warp No, no, look I use warp Okay, I don't know I'm going to go on a rant Hopefully somebody This is like warp product feedback but they basically had this thing where you can do kind of like pound and then write in natural language but then they have also the auto infer of what you're typing is natural language and those are different. When you do the pound it's only like it gives you a predetermined command when you like talk to it it generates a flow Okay. It's a bit confusing of a UX. But going back to your post you had the three P's Yes. of a UX. What were they again? Present, practical, powerful.

Swyx [00:51:15]: Actually, that was really good.

Alessio [00:51:17]: I liked it. And I think I think like in the beginning being present was enough maybe you know even when you launch it's like oh you have AI like that's cool other people don't have it. Do you think we're still in the practical where like the experience is actually like the model doesn't even need to be that powerful like just having better experience is enough or like do you think like really the being able to do the whole because your point was like you're powerful when you generate a lot of value for the customer you're like practical when like you're busy like wrapping it in a nicer way. Yeah.

Anshul [00:51:47]: Where are we in the market? I think there's always going to be room for like practical UX like getting it like I mean the command terminal that's like a very practical UX right? Like I do think with things like Cascade and these agentic systems like we are starting to get onto powerful because like there's so many pieces like from a UX perspective that make Cascade really good like it's just really like micro things that are like just all over the place but as you know we're streaming in we're showing like the changes we're like allowing you to jump and open diffs and see it we can run background terminal commands you can see what like what's running background processes that are running like there's all these like small UX things that together come to a really powerful and intuitive UX I think we're starting to get there. It's definitely just the start and that's why we're so excited about where all this is going to go. I think we're starting to see the glimpses of it. I'm excited. It's going to be a whole new ballgame. Yeah.

Swyx [00:52:36]: Awesome. First of all it's just been really nice to work with you. I do work with a number of guest posters and you know not everyone makes it through to the end and nobody else has done it three times so kudos.

Anshul [00:52:49]: Remember our hat trick.

Swyx [00:52:52]: This one was more like the money one which you know it's funny because I think developers are like quite uninterested in money. Isn't it weird?

Anshul [00:53:01]: Yeah I mean I think like I don't know if this is just the nature of our company like you know I think there's something you said like there's all like the San Francisco AI companies and like everyone's like hyping each other like on the tech and everything which is like great the tech's really important. We're here in Mountain View beautiful office we just really care about like actually driving value and making money which is kind of like a core part of the company.

Varun [00:53:20]: I think maybe the selfish way of saying that or like a little more of the selfless way is like yeah we can be kind of like this VC funded company forever but ultimately speaking you know if we actually want to transform the way software happens we need this part of the business that's cash regenerative that enables us to actually invest tremendously in the software and that needs to be durable cash be cash that like churns the next year and we want to set ourselves up to be a company that is durable and can actually solve these problems.

Swyx [00:53:49]: Yeah, yeah excellent. So for people obviously we're going to link in the show notes but for people who are listening to this for the first time I had a lot of trouble naming this piece so we originally called it you had like how to make money something

Anshul [00:54:02]: I forget it was super I apologize I was super I think it was like on a plane flight so I apologize for that

Swyx [00:54:12]: he had like three dollar signs in the title I was like I can't do that so it's either building AI for the enterprise and then I also said the worst the most dangerous thing an AI startup can do is build for other AI startups which I think both of you will co-sign and I think basically the main thesis which I really liked was like go slow to go fast like here's the if you actually build for like security, compliance, personalization, usage analytics, latency budgets, and scale from the start then you're going to pay that cost now but eventually it's going to pay off in the long run and this is the actual insight you cannot do this later like if you build the easy thing first as an MVP it's like just ship it with whatever's easy to do and then you tack on the enterprise ready.io set of like 12 things that you have you actually end up with the different products or you end up worse off than if you had started from the beginning so that I had never heard before