You Are Not Too Old (To Pivot Into AI)

Everything important in AI happened in the last 5 years, and you can catch up

Translated into Chinese on InfoQ.

A developer friend recently said “If I was 20 now I’d drop everything and jump into AI.” But he’s spent over a decade building expertise, network, and reputation to be at the very top of his field. So he’s staying put for now.

Another, older, college friend is a high flying exec at a now-publicly-listed tech startup. He’s good at what he does, has the perfect resume, the rest of his career could be easily extrapolated toward enviable positions. Yet he’s pivoting because, as he told me, “life is short” and he doesn’t want to end it wondering “what if?”

I’ve had many similar conversations with both technical and non-technical friends in recent days. As much as I’d like this newsletter to be about concrete technical developments and soaring SOTA advancements, I think it’s worth spending one issue on the fuzzy wuzzy topic of career pivots, because this is one topic that I’m coincidentally uniquely qualified to offer commentary.

Pivoting in my thirties

I remember how scary my first career pivot felt, also at age 30. I was 6-7 years into the finance career I had wanted since I was 16, jet-setting around the world, grilling CEOs and helping to run a billion dollars at one of the top hedge funds in the world. Externally I was hot shit but I knew deep down it was unsatisfying and not my endgame. Making some endowments and pensions a bit richer paled in comparison to making something from nothing. I decided to pivot from finance to software engineering (and devrel). The rest is history.

6-7 years later, I am again pivoting my career. I think a SWE → AI pivot is almost as much of a pivot as going from Finance → SWE, just in terms of superficial similarity while also requiring tremendous amount of new knowledge and practical experience in order to get reasonably productive. My pivot strategy follows the same playbook as last time; study nights and weekends as much as possible for 6 months to get confidence that this is a lasting interest1 where I can make meaningful progress, then cut ties/burn bridges/go all in and learn it in public2.

But that’s just what works for me; your situation will be different. I trust that you can figure out the how if you wished; I write for the people who are looking to get enough confidence about their why that they actually decide to take the leap.

I think there’s a lot of internalized ageism and sunk cost fallacy in tech career decisionmaking. So here’s a quick list of reasons why you are not too old to pivot.

Reasons you should still get into AI

Broad Potential of AI/Rate of Growth

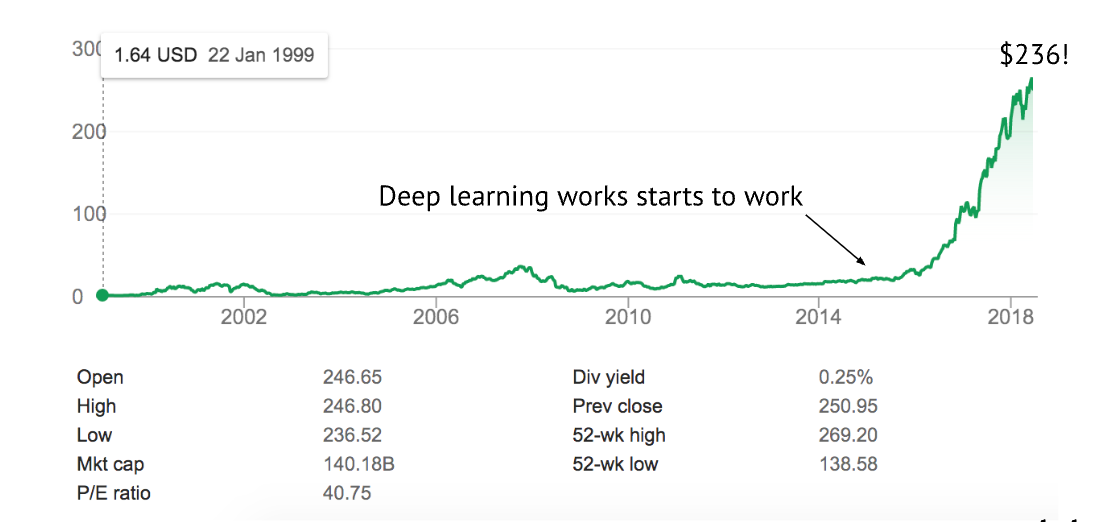

Jeff Bezos famously quit finance to start Amazon at age 30.

He did this because Internet usage was growing 2,300% a year in 1994.

Rollouts of general purpose technologies3 span decades.

Imagine being a “latecomer” to tech in the year 2000 or 2010 and concluding it was “too late” for you to get into Internet businesses.

ChatGPT usage at work has grown 1,000% in the 3 months since January.4

Ramp up time is shorter than you think

The traditional non-PhD path to ML is taking some version of the Andrew Ng Coursera course for 3 months and then realizing you’ll still need years of self study and practical experience to do anything interesting.

But we are getting better at streamlining paths to cutting edge generative AI5.

Jeremy Howard’s fast.ai course started by getting developers into AI in 7 weeks in 2016. In 2022 he’s already taking people up to reimplementing Stable Diffusion in ten 90-minute lessons. Suhail Doshi took this course in June 2022 and launched Playground.ai by November.

This is in part driven by the Transformer architecture, introduced in 2017, but since taking over almost every field of AI offering a strong/flexible baseline such that knowledge of prior architectures becomes optional. So there isn’t decades of research to catch up on; it’s just the last 5 years.

Some readers have asked about the mathematics involved. Whether or not AI is “just matrix multiplication” is debated, and you could learn it in one semester of college level linear algebra and calculus if you wished, but my main answer is that you don’t have to; modern frameworks like pytorch help you do any backpropagation and matrix manipulations that you need.

Of course, taking shortcuts does not make you equivalent to a traditional PhD capable of pushing the state of the art. But even looking at top researcher careers gives you an idea of how much time it could take you to get to the very top. Yi Tay contributed to/led many of the major recent LLM advancements at Google, but you may be surprised to learn he just has about 3.3 years of experience since his PhD. Ashish Vaswani was 3 years out of his PhD when he published the Transformer paper, and Alec Radford was 2 years out of undergrad when he published the GPT and GPT-2 papers at OpenAI.

Career trajectories like this do not happen in more mature fields like physics, mathematics, medicine, because their foom years were centuries ago. AI’s “foom” is clearly happening now.

All of which to say; this is still an incredibly young field and nobody is likely to care how “late” you felt 20 years from now.

Many areas of contribution apart from becoming a professional ML Researcher

Prompt and Capabilities Research: Riley Goodside’s career blew up in 2022, going from being a data scientist at Grindr to becoming the world’s first Staff Prompt Engineer by tweeting GPT-3 tricks until finding and popularizing “prompt injection” as a major LLM security concern. Many others have since caught on that finding interesting usecases for GPT-3 and -4 does well on social media, which is great and a layperson corollary of how academics do formal capabilities research.

Software engineering: Whisper.cpp and LLaMA.cpp have inspired many people recently to the future of running large models on-device, but I was surprised to listen to Georgi Gerganov’s interview on the Changelog and learn that he was a self described “non-AI-believer” in September 2022 and merely ported Whisper to C++ for fun. LLaMA.cpp itself has taken off faster than Stable Diffusion, which itself was one of the fastest growing open source projects of all time. Despite not doing the model training, Georgi’s software expertise has made these foundation models a lot more accessible. Harrison Chase’s Langchain has captured a ton of mindshare by building the first developer-friendly framework for prompt engineering, blending both prompt and software improvements to pretrained LLM models. A raft of LLM tooling from Guardrails to Nat.dev have also helped bridge the gap for these models from paper to production. ChatGPT itself is largely a UX innovation delivered with the GPT 3.5 family of models, which is good news for frontend/UI developers.

Productization: Speaking of Stable Diffusion, Emad Mostaque was a hedge fund manager right up to 2019, who didn’t seem to have any prior AI expertise beyond working on “literature review of autism and biomolecular pathway analysis of neurotransmitters” for his son. But his participation in the EleutherAI community in 2020 led him to realize that something like Stable Diffusion was possible, find Patrick and Robin of the CompVis group at Heidelberg University, and put up the ~$600k it took to train and deliver the (second) most important AI release of 2022. Nobody wants to cross examine who did what but it makes sense that a former hedge fund manager would add a lot of value by spotting opportunities and applying financial (and organizational) leverage to ideas whose time had come. More broadly, Nat Friedman has been vocal about the capability overhang from years of research not being implemented in enough startups, and it seems that founders willing to jump on the train early, like Dave Rogenmoser taking Jasper from 0 to $75m ARR in 2 years, will reap disproportionate rewards.

More broadly, the way that both incumbents are startups across every vertical and market segment are embracing AI is showing us that the future is “AI-infused everything” - therefore understanding foundation models will more likely be a means to an end (making use of them) rather an end in itself (training them, or philosophizing about safety and sentience). Perhaps it might be better to think of yourself and your potential future direction less like “pivoting INTO AI”, and rather “learning how to make use of it” in domains you’re already interested or proficient in.

My final age-related appeal is a generic one - Challenging yourself is good for your brain. Neuroplasticity is commonly believed to stop after 25, but this is debated. What is much more agreed upon is that continuous learning helps build cognitive reserve, which helps stave off nasty neurodegenerative diseases like dementia and Alzheimer’s. This 72 year old congressman is doing it, what’s your excuse?

Are you working on anything nearly as challenging as understanding how AI works, and figuring out what you can do with it?

How I’m learning AI

there is a method to this madness; this is a quick process dump but the actual structure of how I recommend learning will be fleshed out in a future post. Subscribe!

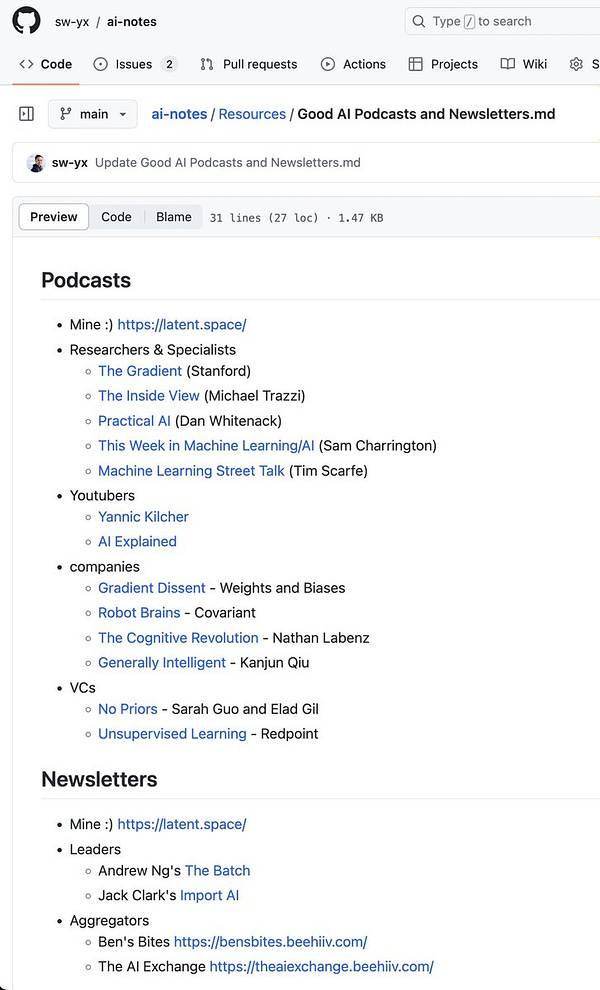

I’ve done the fast.ai course content, but also am following my curated Twitter list, and adding notes to my public GitHub AI repo and to the Latent Space Discord. Important new papers get read the week they come out, and I try to run through or read the code of highly upvoted projects and products. We’re also about to release “Fundamentals 101” episodes on the podcast where we cover AI basics, which has forced me to read the papers and understand the history of some of the things we take for granted today6.

I’ve also recently written down the podcasts and newsletters I’m using to keep up - share and add suggestions if you have them!

In both career pivots I am not starting from total scratch - I had exposure to programming BASIC at age 13, and at 26 had written ultra basic NLP code to parse broker pricing while working as an options trader - I wish I could show you but those are lost to time.

And this AI wave is of that magnitude. Don’t take my word for it, take Bill Gates’ word when he says the only other advance as big in his memory is the creation of the Graphical User Interface.

Winter is coming. At some point this AI summer shall pass and AI winter will be on us again. The magnitude of this wave is important for understanding that it will probably survive any winter, just like the Internet industry only briefly paused after the 2001 recession.

Obligatory wince at using the term when we agree it is overhyped… but it’s stuck so far

Again, doing this in public is important because my skin on the line makes me try to be as right as possible, and to feel extra when I inevitably get something wrong in public.

The older you are, the more cynical you get. I myself had a head start in both cynicism and being burned out, I feel like a 60 year old, whenever I face any contemporary trash that's supposed to excite me.

I even quit software development - or so I hoped - in 2019. (Hell, I even had a dance with ML 3 years ago and thought I quit it for good, around the announcement of GPT-3, thinking that it moved beyond my level and I will only be a spectator from now on.)

Yet here I am, in 2023, being excited by AI. It's not overhyped.

Thanks for the resources!

First, just wanna thank the immense value you put out there. This post is amazing!

I'm 24 and pretty happy with the position I'm right now (Senior Full Stack, leading a team and creating a GPT integration in our app). But your post makes me wonder if I should actually study AI core and foundations. GPT makes it so easy to jump into AI that it kinda makes me feel I don't need to, tbh.

The phrase that made me start studying how to code (at 14) was "The programmers of tomorrow are the wizards of the future". Reading your post makes me think that not learning AI will make me feel less of a wizard 🧙♂️ lol

Thanks!