Inference, Fast and Slow

When System 1/System 2 analogies are not enough: The 6 types of LLM inference

We are experimenting with a “screenshot essay” series with visuals we find helpful for putting things in context, for Latent Space supporters. Let us know your feedback!

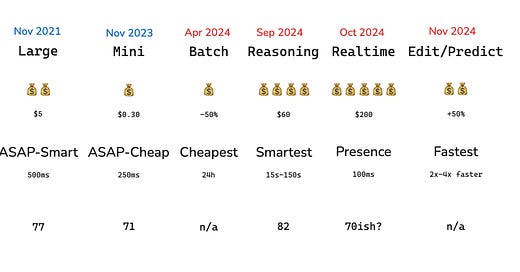

o1 made it consensus that thoughtful, slower “System 2” models are a great complement to the standard fastest-time-to-first-token “System 1” models. However, there are more than just “fast” and “slow” needs arising in AI engineered systems, and we can benefit from a useful model of tradeoffs in cost, speed, and intelligence.

With the release of OpenAI’s new Predicted Output API, which allows you to submit draft responses and then leverages prompt lookup/lookahead speculative decoding for a 2x-5.8x faster response at ~50% more token costs, the year 2024 has now produced have a full map of inference paradigms for the discerning AI engineer:

Plotting these use cases on a rough indicative price-latency tradeoff curve, we get a nice kinked chart:

Keep reading with a 7-day free trial

Subscribe to Latent.Space to keep reading this post and get 7 days of free access to the full post archives.