Latent Space is heating up! Our paper club ran into >99 person Discord limits, oops.

We are also introducing 2 new online meetups: LLM Paper Club Asia for Asia timezone (led by Ivan), and AI in Action: hands-on application of AI (led by KBall).

To be notified of all upcoming Latent Space events, subscribe to our new Luma calendar (sign up for individual events, or hit the RSS icon to sync all events to calendar).

In the halcyon open research days of 2022 BC (Before-ChatGPT), DeepMind was the first to create a SOTA multimodal model by taking a pre-existing LLM (Chinchilla 80B - now dead?) and pre-existing vision encoder (CLIP) and training a “glue” adapter layer, inspiring a generation of stunningly cheap and effective multimodal models including LLaVA (one of the Best Papers of NeurIPS 2023), BakLLaVA and FireLLaVA.

However (for reasons we discuss in today’s conversation), DeepMind’s Flamingo model was never open sourced. Based on the excellent paper, LAION stepped up to create OpenFlamingo, but it never scaled beyond 9B. Simultaneously, the M4 (audio + video + image + text multimodality) research team at HuggingFace announced an independent effort to reproduce Flamingo up to the full 80B scale:

The effort started in March, and was released in August 2023.

We happened to visit Paris last year, and visited HuggingFace HQ to learn all about HuggingFace’s research efforts, and cover all the ground knowledge LLM people need to become (what Chip Huyen has termed) “LMM” people. In other words:

What is IDEFICS?

IDEFICS is an Open Access Visual Language Model, available in 9B and 80B model sizes. As an attempt to re-create an open-access version of Flamingo, it seems to track very well on a range of multimodal benchmarks (which we discuss in the pod):

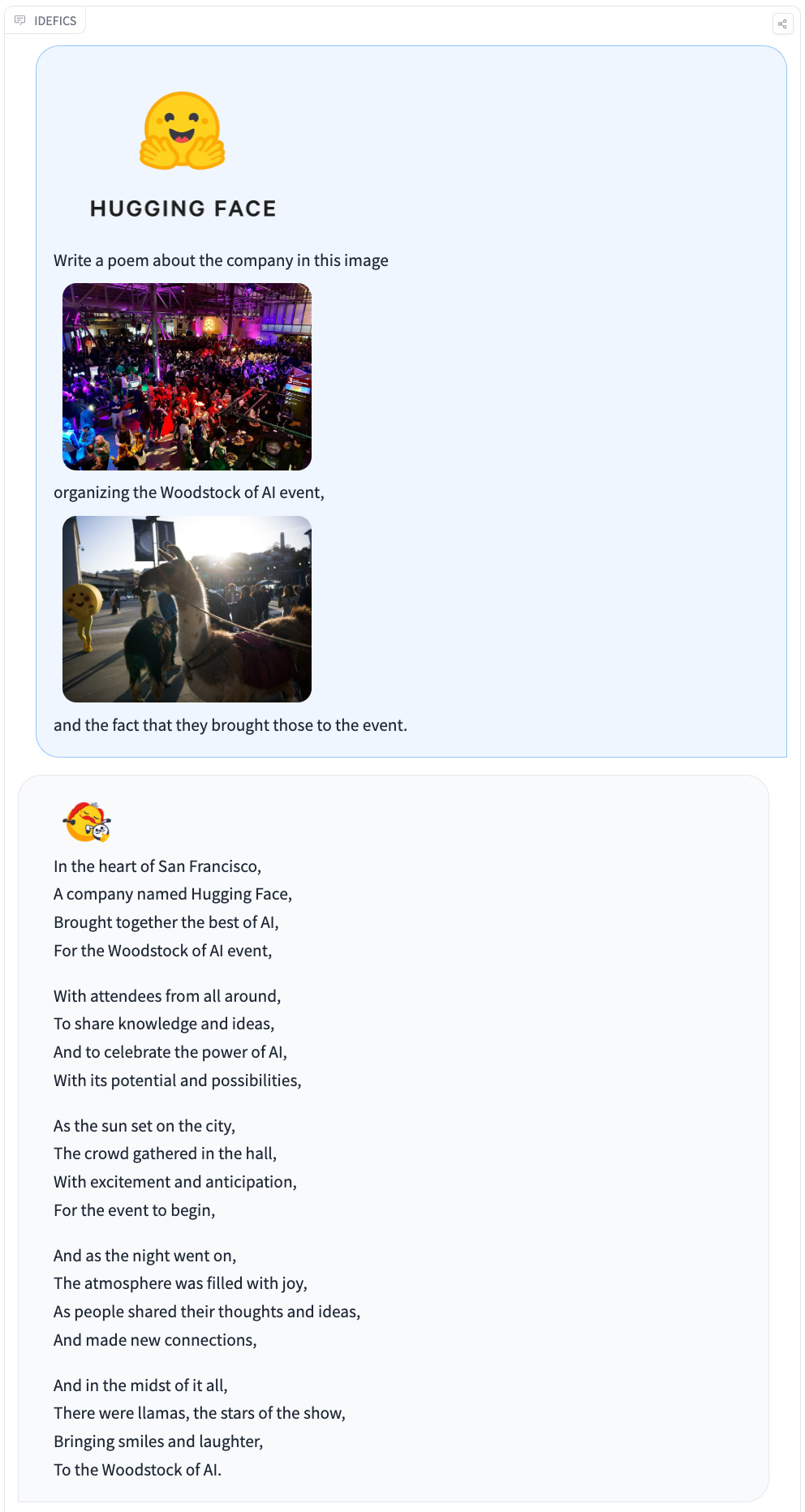

You can see the reasoning abilities of the models to take a combination of interleaved images + text in a way that allows users to either describe images, ask questions about the images, or extend/combine the images into different artworks (e.g. poetry).

📷 From IDEFICS’s model card and blog post

The above demo screenshots are actually fine-tuned instruct versions of IDEFICS — which are again in 9B and 80B versions.

IDEFICS was built by connecting two unimodal models together to provide the multi-modality you see showcased above.

Llama v1 for language (specifically huggyllama/llama-65b) - the best available open model at the time, to be swapped for Mistral in the next version of IDEFICS

A CLIP model for vision (specifically laion/CLIP-ViT-H-14-laion2B-s32B-b79K - after a brief exploration of EVA-CLIP, which we discuss on the pod)

OBELICS: a new type of Multimodal Dataset

IDEFICS’ training data used the usual suspect datasets, but to get to par with Flamingo they needed to create a new data set.

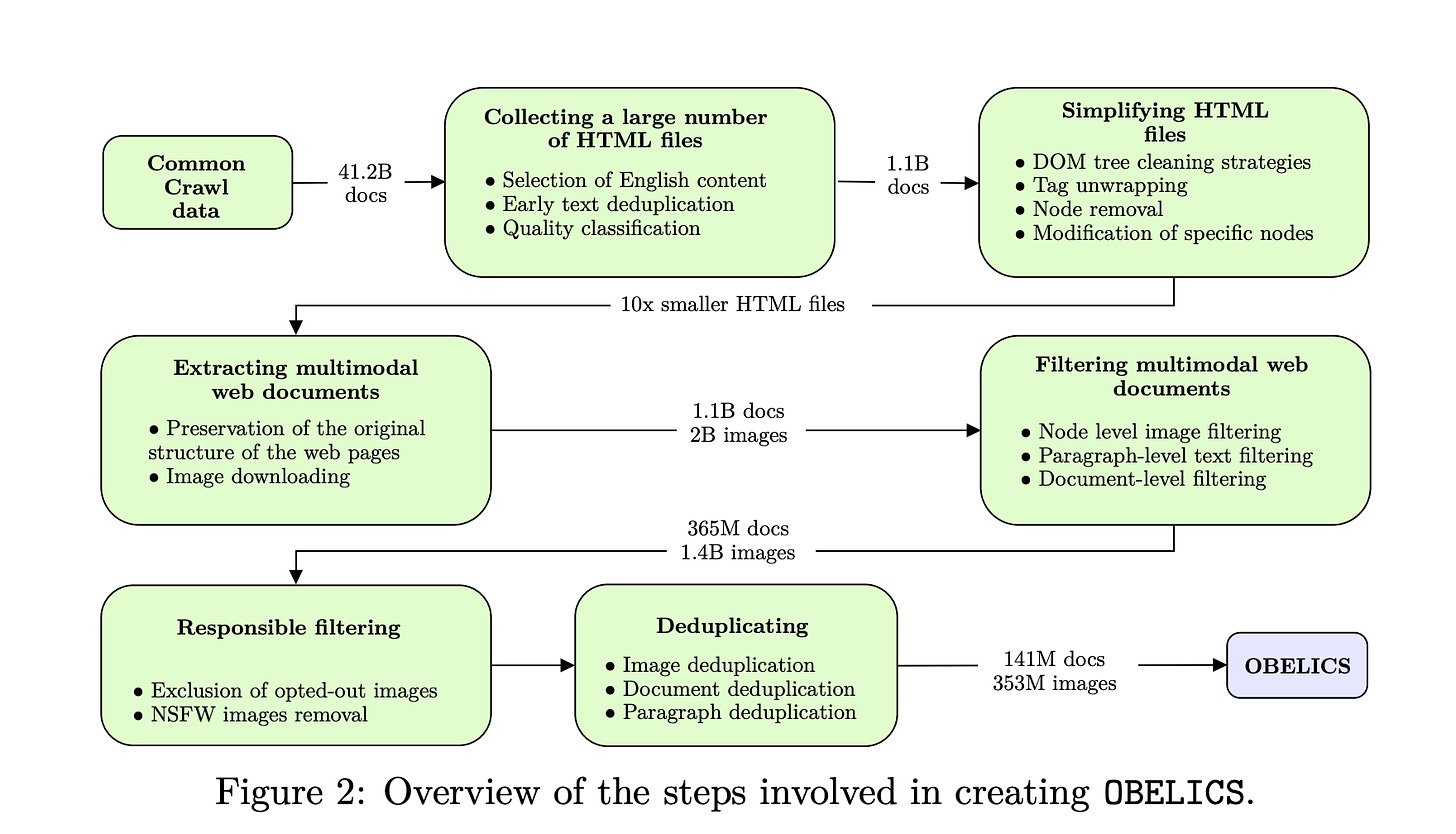

Enter OBELICS: “An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents”:

115B text tokens

141M English documents

353M images

These bullets are carefully curated and filtered by going through Common Crawl dumps between FEB 2020 - FEB 2023. We discuss the 2 months of mindnumbing, unglamorous work creating this pipeline:

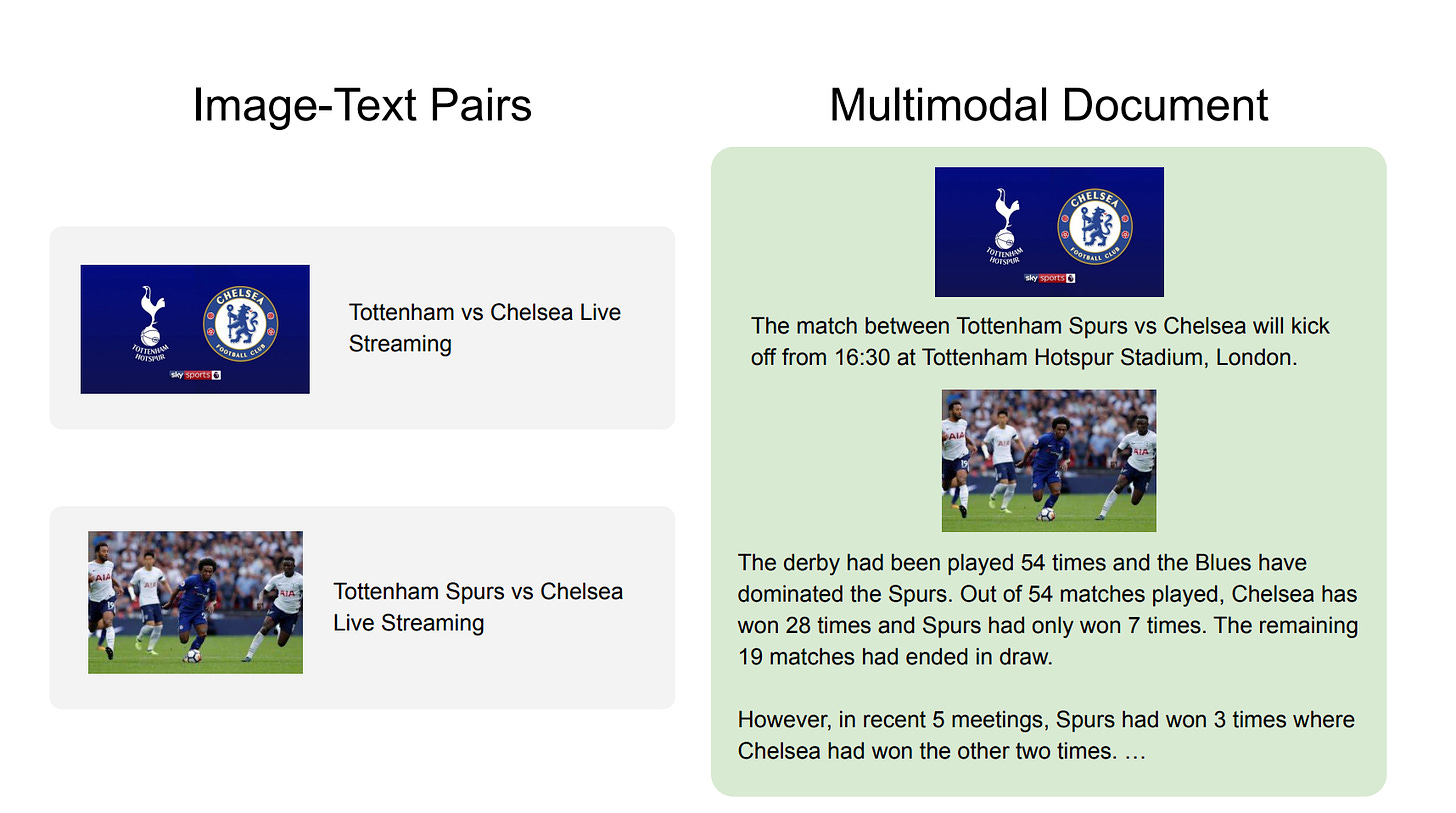

There’s a lot of mentions of ‘multi-modal' web documents’ which deserves some explanation. We’ll show you instead of tell you:

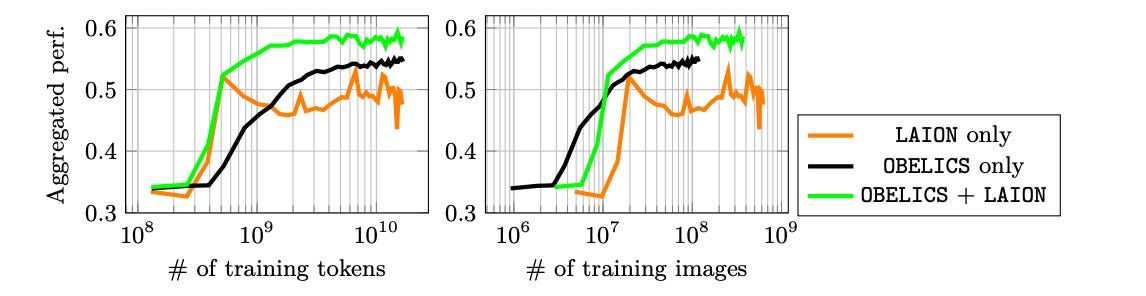

You can see from this graph that OBELICS ends up outperforming the other image-text pairs dataset (LAION in this case) when stacked head-to-head.

You can view a subset of OBELICS and perform visualizations on them here:

2024 Update: WebSight et al

Most of this interview was recorded on Halloween 2023 at HuggingFace’s headquarters in Paris:

In anticipation of an IDEFICS v2 release. However, several roadblocks emerged, including a notable scandal around CSAM in LAION-5B, which affected all models using that dataset. The M4 team have adopted a strategy of shipping smaller advancements in 2024, and the first ship of the year is WebSight, a dataset of 823,000 HTML/CSS codes representing synthetically generated English websites, each accompanied by a corresponding screenshot (rendered with Playwright). This is intended for tasks like screenshot-to-code workflows like Vercel’s V0 or TLDraw, and will be part of the dataset for IDEFICS-2.

As noted in our Best Papers recap, synthetic data is emerging as one of the top themes of 2024, and the IDEFICS/OBELICS team have wasted no time enabling themselves with it.

Timestamps

[0:00:00] Intro

[0:00:00] Hugo, Leo’s path into multimodality

[0:09:16] From CLIP to Flamingo

[0:12:54] Benchmarks and Evals

[0:16:54] OBELICS dataset

[0:34:47] Together Redpajama v2

[0:37:12] GPT4 Vision

[0:38:44] IDEFICS model

[0:40:57] Query-Key Layernorm for training

[0:46:40] Choosing smaller vision encoders - EVA-CLIP vs SIG-GLIP

[0:49:02] IDEFICS v2

[0:52:39] Multimodal Hallucination

[0:59:12] Why Open Source Multimodality

[1:05:29] Naming: M4, OBELICS, IDEFICS

[1:08:56] 2024 Update from Leo

Show Notes

Introducing IDEFICS: An Open Reproduction of State-of-the-Art Visual Language Model

OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents

Papers cited:

BLOOM: A 176B-Parameter Open-Access Multilingual Language Model

Barlow Twins: Self-Supervised Learning via Redundancy Reduction

CLIP paper: Learning Transferable Visual Models From Natural Language Supervision

Vision Transformers paper: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

Flamingo paper: a Visual Language Model for Few-Shot Learning

VQAV2 paper: Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering

OK-VQA: A Visual Question Answering Benchmark Requiring External Knowledge (https://okvqa.allenai.org/)

Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond

Sig-GLIP paper: Sigmoid Loss for Language Image Pre-Training

Nougat: Neural Optical Understanding for Academic Documents

MMC4 (Multimodal C4): An Open, Billion-scale Corpus of Images Interleaved With Text

Dall-E 3 paper: Improving Image Generation with Better Captions

GPT-4V(ision) system card from OpenAI

Query-Key Layernorm trick: paper (Scaling Vision Transformers to 22 Billion Parameters), tweet

EVA-CLIP: Improved Training Techniques for CLIP at Scale

“We intially explored using a significantly bigger vision encoder (the biggest in open-access at that time) with EVA-CLIP. However, we ran into training instabilities very quickly. To lower the risks associated to the change of vision encoder, we decided to continue with laion/CLIP-ViT-H-14-laion2B-s32B-b79K which we have been using until that point. We will leave that swap for future iterations and will also consider using higher resolution images.”

Datasets

Together’s RedPajama-Data-v2: An open dataset with 30 trillion tokens for training large language models

HuggingFace timm: library containing SOTA computer vision models, layers, utilities, optimizers, schedulers, data-loaders, augmentations, and training/evaluation scripts. It comes packaged with >700 pretrained models, and is designed to be flexible and easy to use.

Logan Kilpatrick declaring 2024 the year of Multimodal AI at AI Engineer Summit

Share this post