Why GPT Wrappers Are Good, Actually

Introducing the Smiling Curve of AI and why -both- model labs and their wrappers are becoming hilariously rich

We are experimenting with short essays at higher frequency, for Latent Space supporters. It gives you a more realtime peek into our thinking and work.

Let us know your feedback!

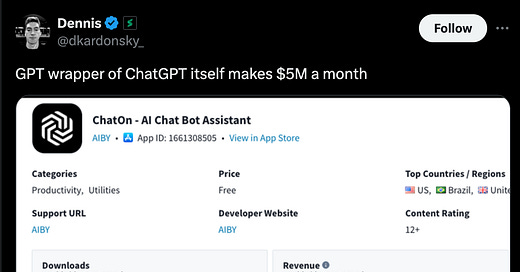

Let’s talk about this phenomenon:

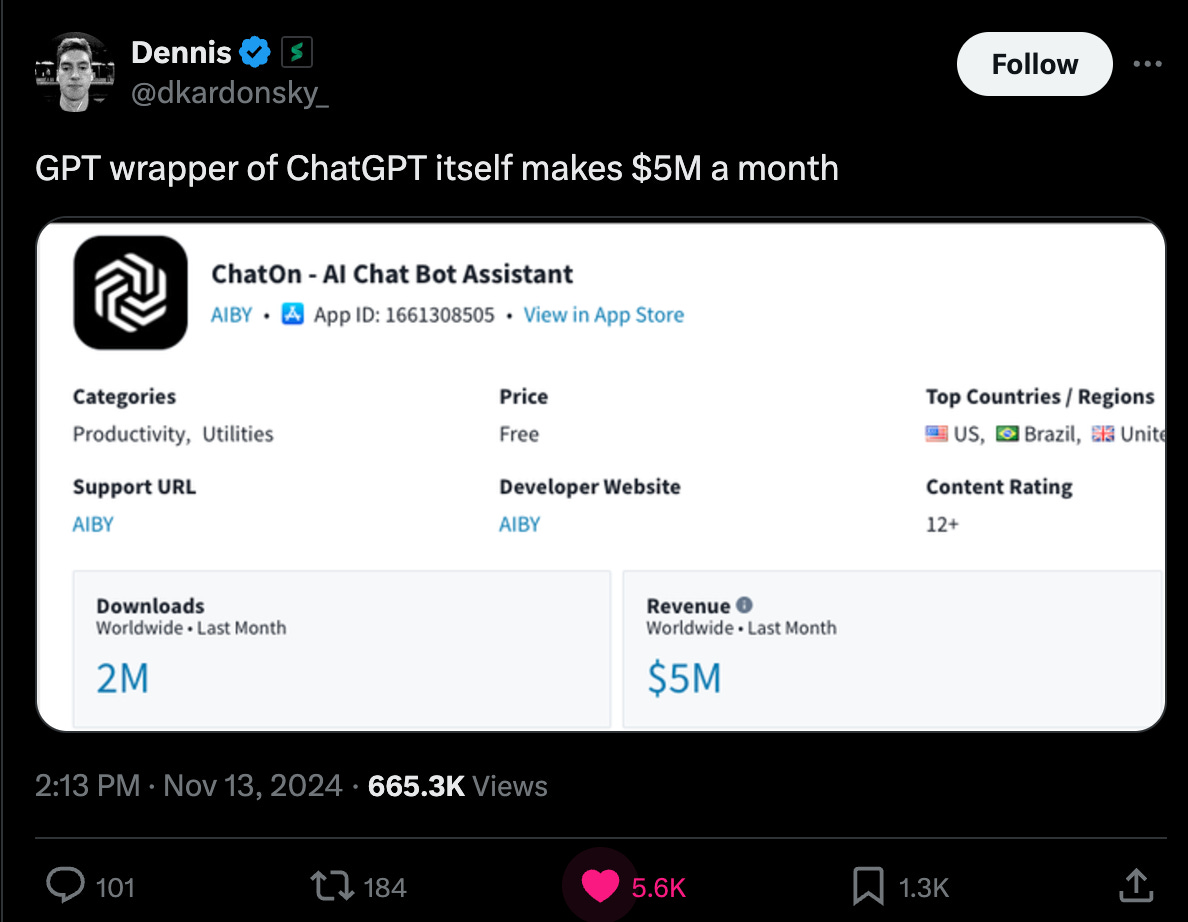

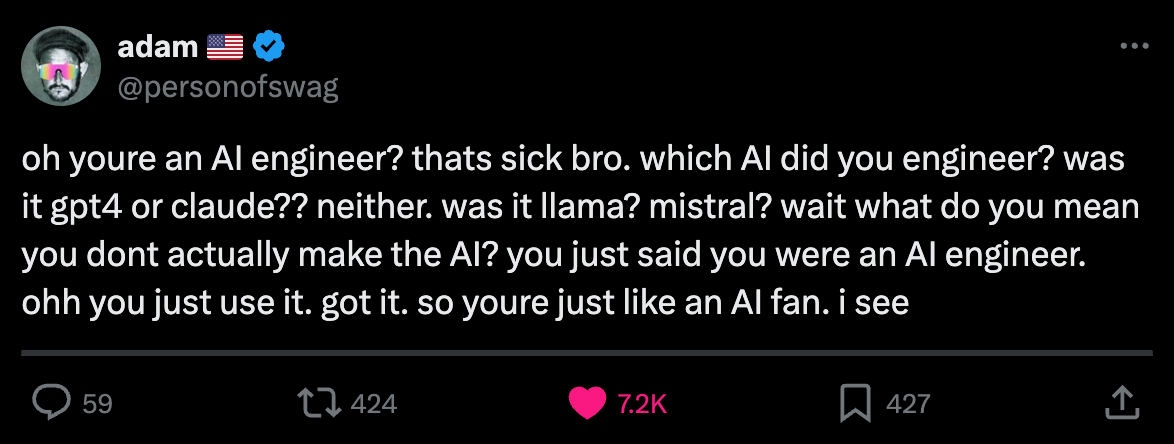

When I wrote the AI Engineer essay in 2023, I was writing partially to counter the prevailing narrative of the rise of the “prompt engineer” title. However, I was also countering a narrative on the other side of the technical spectrum, the idea that you had to train models or otherwise “do serious ML” to be of any worth in the new AI economy. This attitude persists to this day, in various forms.

In 2023 people constantly derided GPT wrapper companies (where is the moat?), and startups launched raising $30-$100m in funding just to hand it over to NVIDIA for GPUs to train their own proprietary models. The final nail in the GPT Wrapper coffin came at DevDay when OpenAI seemingly let anyone create their own GPT Wrappers inside of ChatGPT.

And yet, here we are in 2024:

the model training startups Character, Adept, Inflection, and Stability have been execuhired, and custom GPTs are generally regarded to have failed to reach PMF,

while model wrappers like Perplexity, Harvey, Cognition and Cursor are valued >$2B and going from strength to strength

Codeium (now $1.25B, upcoming episode) now considers the strategy of training their own first party models a mistake, and

Bolt.new (upcoming episode) went from $0 to >$4m ARR in 1 month wrapping Claude Sonnet1.

If you have really understood the AI Engineer thesis, you will have internalized the value of fashioning raw LLM capability into polished AI products, and in particular we have been champions of AI UX, AI Devtools, and the emerging LLM OS stack.

But there’s a handy visual that I presented at AGI House recently that further explains why “GPT Wrappers” are actually doing well:

Keep reading with a 7-day free trial

Subscribe to Latent.Space to keep reading this post and get 7 days of free access to the full post archives.