Every Google vs OpenAI Argument, Dissected

Let's talk the AI elephant in the room. Bonus: Meditations on Moloch

The dominoes continue to fall from OpenAI’s launch of ChatGPT 2 months ago:

“Google may be only a year or two away from total disruption.” - Paul Buchheit, creator of Gmail

“OpenAI engineer calculates the cost to build a Google killer and comes to $50m [to build an AI clone of Google].” (tweet; this is nothing compared to MSFT’s $100b in cash)

“Microsoft is planning to use ChatGPT to power Bing, and plans to launch it in a couple of months.” (The Information)

“Microsoft will close a $10 billion investment into OpenAI at a valuation of 29 billion” (Fortune) (This is ~2x of its 2021 $14B valuation, and catapults OpenAI to the #6 most valuable startup in the US)

“If Microsoft actually succeeds in driving adoption of Bing using AI to steal material share from Google then there’s no question Satya becomes the greatest CEO of all time” (tweet)

“Microsoft, and particularly Azure, don’t get nearly enough credit for the stuff OpenAI launches… they have built by far the best AI infra out there.” - Sam Altman

There’s a well known phenomenon in tech press cycles known as Silicon Valley Time, and OpenAI speedran 2:00 to 4:00 SVT with this crowning glory from the NYT:

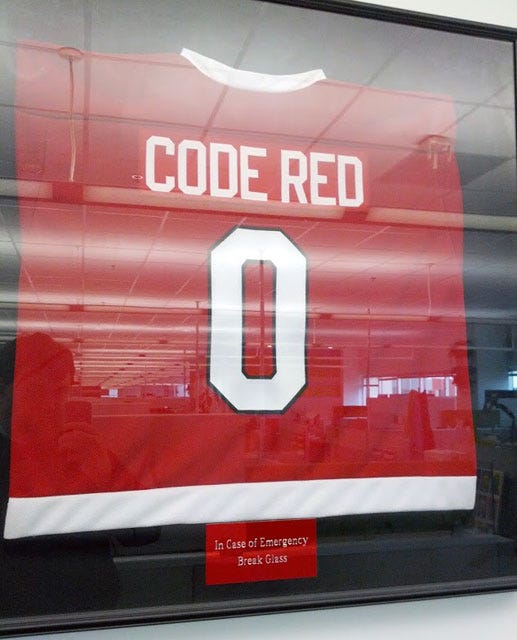

The “Code Red” is probably a reference to one of the greatest movie monologues of all time1, but is a very real Google practice as well. The term last came up in 2020 on a Justice Department antitrust suit, where losing default search engine status would have been a “Code Red” for Google.

There’s even a commemorative plaque! (not kidding)

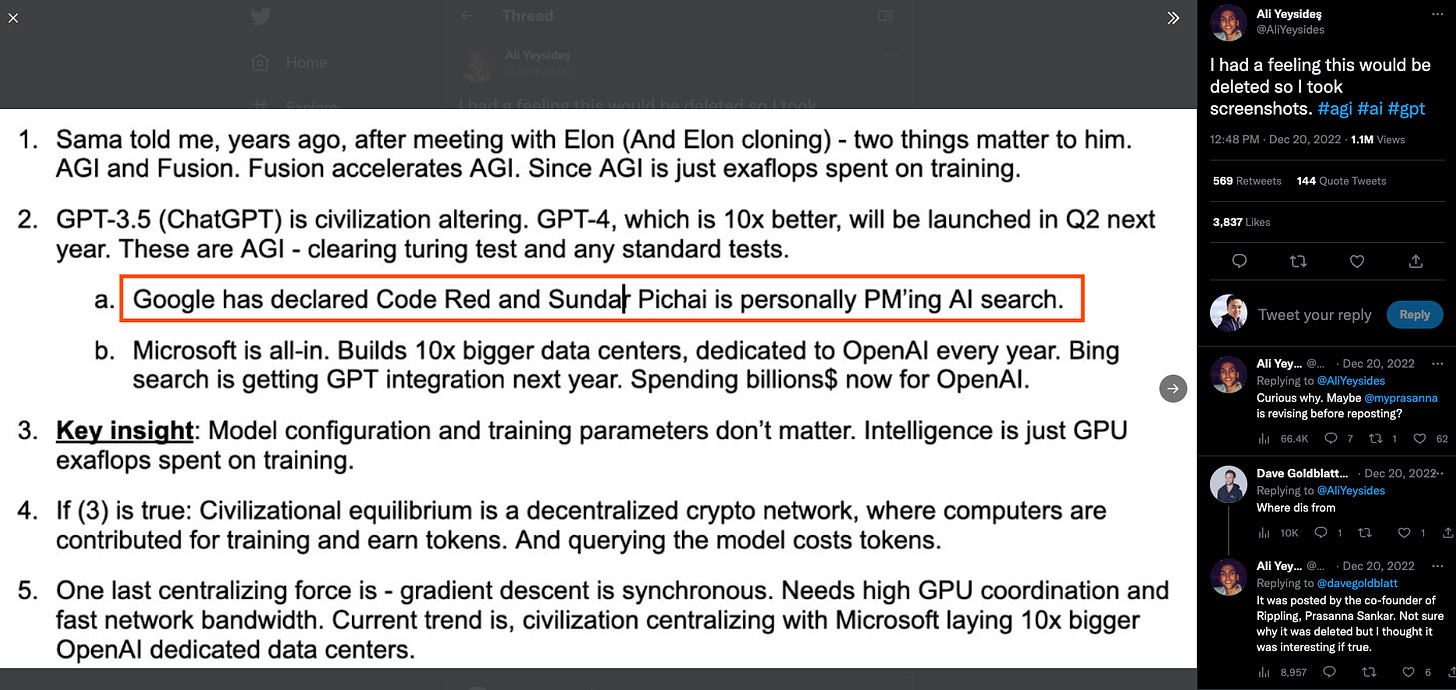

The NYT article cites unnamed “Google management” as ordering the Code Red, but there is some evidence that none other than Sundar Pichai, Alphabet CEO, called it. The biggest smoking gun came from this (now deleted) tweet from Rippling co-founder Prasanna Sankar:

Which comes across as particularly credible because the tweet pre-dated the NYT story that broke it. (There’s also some interesting politics here if Sundar is personally involved and not his SVP of both Google Search and Google Assistant, who as of 4 months ago was still “not so sure about” the potential of chat…)

Should Sundar have ordered the Code Red?

Kremlinology aside, the Google vs AI debate has been brewing nonstop since the launch of ChatGPT. We discussed this on my recent appearance on the (wonderful) Changelog podcast, but it deserves a fuller treatment here.

So let’s play a game: you are CEO of Alphabet, and ChatGPT has just come on the scene. Do you order the “Code Red”? Let’s think step by step:

Why the “Code Red” was unwarranted:

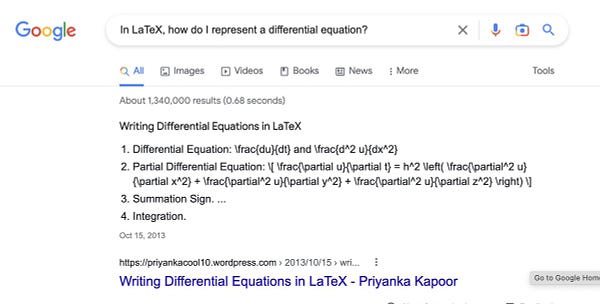

Google has more research expertise (Transformers, PaLM, LaMDA, Chinchilla)

Google has more operational AI experience at scale (BERT, MUM)

Google has more hardware expertise than Microsoft (TPUs)

Google has more data than Microsoft + OpenAI (Googlebot vs Bingbot)

Google has 4 billion more users (who already know how to prompt Google)

Chat isn’t Search (AI hallucination is unreliable, 10x cost/latency, uncertain UX)

Panicking Google employees is extremely distracting (and a sign of weakness)

AI is a feature, not a product (like Google+, it’s hard to win with a reactive clone)

Why ChatGPT was worth a “Code Red”:

Chat eliminates links (and the ads-based search business model)

AI generated spam just got 100x easier to make (and it’s harder to tell)

“Google Search is already Dying” popular sentiment (and Google knows it)

Any nonzero threat to Google dominance is a Code Red (Total war)

Google does not have the permission to innovate (Innovator’s Dilemma)

Opportunity: AI is a new type of platform and new type of computer. (LFG!)

I’ve written this so you can use the above as a simple guide to decide where you land, but I will elaborate below.

Why the “Code Red” was unwarranted

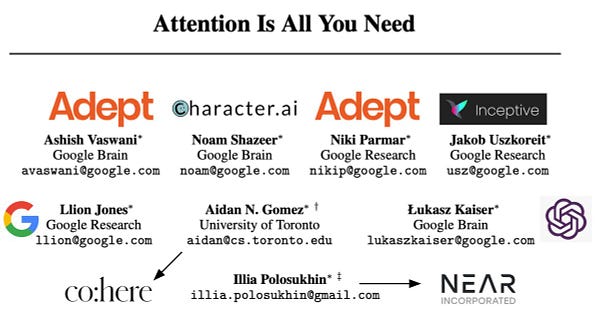

Research expertise. Google has unquestionable AI expertise2. Peter Norvig, author of the top college AI textbook, was Director of Research and Search Quality in the 2000s. Google Brain was founded by Jeff Dean and Andrew Ng in 2011. Geoff Hinton led the AlexNet team that revived deep learning in 2012. 6 of the 8 authors of the 2017 Attention is All You Need paper that launched the latest transformers-driven revolution in large language models had Google email addresses3. Google's expertise isn't fading either; in 2022 both LaMDA (the chatbot that convinced a Google engineer it was sentient) and PaLM (the 540b parameter LLM that beats GPT3 and average humans on a 58-task benchmark), while the Chinchilla paper (one of the most important AI papers of 2022) came out of DeepMind, a prescient 2014 AI acquisition by Larry Page. OpenAI has its own experts indeed (many of whom are ex-Google) but the research bench does not seem as deep.

Operational experience. In theory, there is no difference between theory and practice, but in practice, there is. Google has run AI models for over a decade at scale - following Hummingbird and Rankbrain, BERT has powered search and email since 2018, replaced by MUM (using the T5 model) since 2021. No one else has run AI models at this scale, much less OpenAI.

Hardware. To paraphrase Alan Kay4, people serious enough about AI should make their own hardware. Google introduced custom Tensor Processing Units in 2015, which are now on their 6th generation, while Microsoft and OpenAI are likely relying on commodity Nvidia A100s. Being able to evolve hardware inline with software in the same company is an advantage, though of course it is not a guarantee of superior results5. Microsoft probably has some research ongoing, and there are real AI compute startups like Cerebras and Graphcore that Microsoft will probably buy (you heard it here first!), but they are still at least 5 years behind Google on the hardware game.

Data. The Chinchilla paper confirmed intuitions that LLM progress is largely data-constrained. It’s easy to say, like I did, that Google has access to vast corpuses of data nobody else has, but it is unclear if Google will ever admit or deny mining your email for data despite it being within its terms of service. It’s much fairer game to transcribe YouTube, or mine public Google Reviews comments, or the over 40 million titles in Google Books. Every Google search corresponding with a click that ends a Journey is golden data for RLHF. But even without all that, Google’s index of the web is estimated to be at least 8 times larger than Bing, not least because of the incumbent effect of the standard practice of SEO practitioners submitting their sites to be indexed by Google. In other words, if Microsoft’s new Google Killer is really a reskinned Bing, which may not have what you want in its corpus, no amount of prompt engineering can produce answers it doesn’t know.

Users. It’s clear that Google has an inherent distribution advantage. More than 2 million people have tried ChatGPT, but that leaves 7.8 billion who have not. Google has 4.3 billion users, and Bing claims 1.1 billion. Outside of our tech bubble, most people have not heard of OpenAI or ChatGPT, and Google will be able to reach them faster and sooner than Microsoft realistically will. The Google brand is synonymous with search, and billions of people are already formally and informally trained to “prompt engineer” Google for their work and personal lives.

Chat isn’t Search. There are three subpoints here in increasing severity:

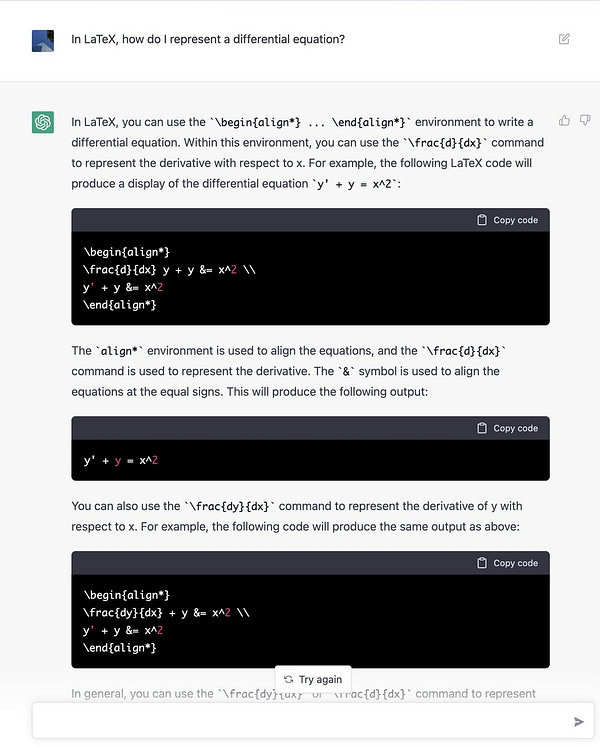

The first is the obvious - Generative AI hallucinates facts and is “confidently incorrect”. LLMs are merely Stochastic Parrots (Emily Bender, Timnit Gebru) producing results that have “no real relationship with the truth” (Gary Marcus). Users have spent months gleefully documenting its failures at math, disobeying spatial logic, agreeing with leading questions, jailbreaking safety measures, and even getting tricked into thinking it is the human conversing with AI. Hallucination can be addressed with attributive answers that link back to their source, as competing search engine Neeva AI has done, but the attributions still need to be checked so there is ultimately not that much time saved.

Let’s say we solve the hallucination problem (which may be AGI-hard). The next issue is one of economics - LLMs are very expensive compared to search queries. Sam Altman has estimated that it costs "single-digit cents per chat” (equal to or more than Google’s estimated 3-3.5 cents of revenue per search), and ChatGPT as a whole was estimated at $3 million per month. Google has not been asleep at the wheel on this; a Googler noted that there have been internal studies on deploying this at Google scale and costs need to go down by at least 10x. A close cousin of cost is latency - ChatGPT is 1-2 orders of magnitude slower than a Google search. There is of course plenty of optimization research being done into making GPUs go brrr, and the talking head types might handwave this work away, but it is intuitive that there is some irreducible cost disadvantage of running billion-parameter black boxes on centralized GPU clusters vs 50 years of distributable database indexing and information retrieval research.

Assume facts and costs are solved. Then there’s the product question. Let’s wave a magic wand and assume Google bought and integrated ChatGPT. Actually, we already have that - chrome extensions that sideload ChatGPT answers alongside your Google searches (here and here)! Go ahead and install them… and notice that it isn’t actually that good. Benedict Evans is typically insightful in his thread on the topic - we use Google for much more than just “answer a question” and perhaps do followup clarifications. Sometimes, we really do want a link instead of an answer, which may expose us to information that answers questions we didn’t know we had, or may give us a resource that we were searching for. We’re wildly extrapolating from admittedly a very successful tech demo, and haven’t actually solved the form factor of “AI search” that billions of people can use on a daily basis.

Panicking. A “Code Red” is incredibly distracting to the majority of 150,000 employees of Google who are not working on search. They are getting a constant reminder that their work is no longer a priority of the company, and a formal acknowledgement that in fact there is a 7 year old startup that is credibly threatening their work and perhaps they should go work there instead (or start their own).

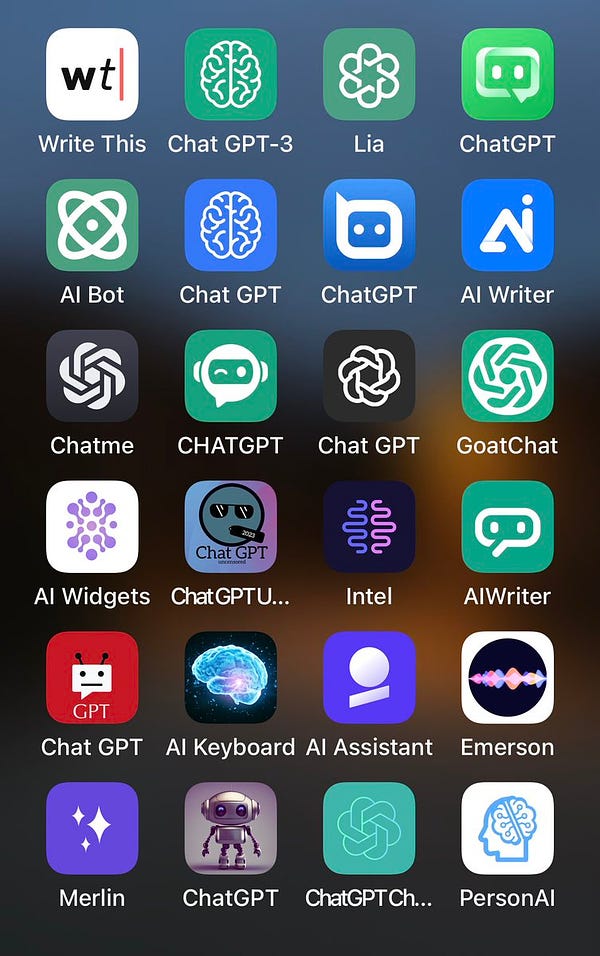

AI is a feature, not a product. Given a proof of concept, any developers can churn out features all day long, but it takes real user insight and good design to turn a bunch of features into a tractable product. Ultimately you cannot ignore the fact that Google is rushing into this “Code Red” reactively, with no fundamental product or UX insight other than ChatGPT’s success which has only some bearing to the existing Google Search business. Google has done this once before, when a little social network called Facebook was coming up in the world, and Google+ was the sad result. Google’s 16-year run of uninspired chat apps doesn’t give much confidence it can nail this next response to ChatGPT, especially when there is also an army of clones already out in the wild with no success (which is to say, the pending Microsoft BingGPT may also fade out once people discover enough holes).

If you have read to here you might be tempted to agree that, like Colonel Jessup, Sundar Pichai should not have ordered the “Code Red” for a litany of reasons. This is a popular opinion among the antihypers, of which there are few:

Let’s now steelman the other side.

Why ChatGPT was worth a “Code Red”

The Death of Links. Google is built on serving you links, and its ads business is based on making ad results as relevant as (and perhaps as visually indistinguishable as) organic results. But you as a user click through links to get through to answers. ChatGPT skips all that and just serves you the answers directly. For a solid set of usecases, this is all you need.

There was a famous Google TGIF all-hands where Larry Page once said that Google does better that you can serve user queries, up to and including zero latency (I’m Feeling Lucky) and negative latency (answering questions before they are typed, e.g. the famous autocompletes that are now the basis of popular WIRED videos). Google Answer Boxes are the most prominent legacy of that mode of thinking, but it is now apparent that ChatGPT is capable of producing more readable answers and, whether or not Google was capable of matching ChatGPT’s generative capabilities before, it was asleep at the wheel in being first to market with this.

AI Spam. Anyone who has read enough GPT output can start to intuit the dry, not-very-quirky-or-insightful-but-overly-long “high school essay” type of writing it tends to favor. This kind of content spam just got 100x easier to create, and Google will have to wade through it all to maintain quality. Worse still, there are already documented evidence of AI plagiarism where the copied article “won” on popular metrics.

There will be no time to litigate this in human courts of law, so Googlebot will have to be the judge, jury, and executioner of this brave new world. There are existing attempts at AI text detection (OpenAI Detector, GPT-Zero, Originality.ai) and OpenAI itself is working on watermarking GPT output, but it is still extremely easy to evade this detection. This is the great Perplexity race: the better AI text detectors get, the faster that AI text generators adversarially improve.

Google Search is Dying. People are hungry for a Google competitor. Google Search is Dying was one of the top posts of the year on Hacker News, and appending “site:reddit.com” to searches to get around heavily SEOed junk was a top meme:

“If you can make God bleed, people will cease to believe in Him”. The worsening quality of Google has been an open secret for a while, but it took the rise of a credible alternative (in a new form factor) to actually draw first blood.

Total domination or bust. It could be that the rational Code Red policy for Google is overwhelming and disproportionate response to any nonzero probability of a threat. It is worth remembering that Google pays Apple $18-20 billion a year to be their default search engine, knowing full well that most users would manually select Google out of brand loyalty anyway. If Google is willing to pay 7% of overall Google revenue to do that, what will it not do to ensure total and complete domination of the search market6?

And it's not just Google Search at stake - the future of Google Cloud Platform is too. Reports have said that OpenAI was spending $70m/year on GCP before the 2019 Microsoft deal that lured it away; now the AI crown is firmly on Azure’s head and GCP is once again the forgotten child of the big three clouds. This cannot continue.

Permission to Innovate. No tech strategy blogpost would be complete without citing the late great Clay Christensen. It is the popular view that Google is subject to the Innovator’s Dilemma where incumbent companies unavoidably get disrupted by startups while making entirely rational choices given their situation. The previously quoted discussion at Google that concluded that LLMs weren’t ready to deploy at Google scale assumed a profit-making incentive at Google scale. This was a rational conclusion in peacetime, but the “Code Red” serves as a declaration of war that suspends normal logic in service of an existential threat. That said, this declaration is only unilteral — it is unclear if Google’s users (who have high expectations of privacy, speed, and quality) will give Google the permission to innovate as fast as an independent startup.

If you were playing the public perception game, letting the news of a “Code Red” leak to, I don’t know, say the New York Times, that could be exactly what you might do if you were trying to obtain such permission from the general public.

Opportunity. Lastly, from the Google point of view, I think it would be myopic and not constructive to solely look at the increased public interest in AI and LLMs as purely a threat. It is also an opportunity to showcase the decades of groundwork that Google has been laying, to wow them and to earn their trust for another generation. The consensus is that ChatGPT is as foundational of a platform shift as the Internet did in the 90’s and the iPhone did in the 2000s. There is also another, more niche (Masad, Karpathy) view that transformers are a new type of computer. Put those two together and it is ground for a Cambrian explosion in new tech.

AI Moloch

The final analysis that is worth mentioning when discussing Google’s handling of AI is the domain of AI Ethics. OpenAI and ChatGPT may have set in motion an unstoppable rollercoaster that results in AGI that we don't understand or control7.

When I mentioned the Stochastic Parrots paper above, I didn’t mention the wave of drama around Timnit Gebru and Margaret Mitchell’s firing from Google over it. The full story is told in this podcast with Emily Blender, but this story repeated in 2022 with Satrajit Chatterjee who was questioning a paper on AI accelerator chip design published in Nature. We may never know the full story from both sides, but from the outside it seems that every time AI progress has been questioned inside Google, there is an ever present threat of firing instead of support.

Now the “Code Red” has been declared with Microsoft and OpenAI, who have both made efforts towards AI safety, but in the race to ship products and make money, may also abandon rigor.

The adversarial AI arms race against a looming backdrop of existential AGI danger makes me think often about Scott Alexander’s Meditations on Moloch, elegantly explained by Liv Boeree:

Safe or not, it’s out of our control now; Sundar Pichai’s “Code Red” has birthed AI Moloch, and you and I are in for the ride.

Acknowledgements

Thanks to Alessio and Paul from the devtools-angels Discord for reading drafts and providing valuable feedback.

Share this post on Twitter, Mastodon and Hacker News if you enjoyed it!

I was surprised to learn that the movie A Few Good Men was based on real events and a real life Marine “Code Red”. And now you know!

We discuss only the generative language AI progress here, but Google has also been publishing state of the art research on other modalities, including Imagen, Imagen Video, Phenaki, Parti, AudioLM, and more.

Although they have mostly moved on and only Llion Jones remains:

To explain poorly, the basic principle is that most software runs on commodity hardware and therefore is constrained by lowest common denominator assumptions. There are very few people willing to and capable of making custom hardware, therefore it is a relative advantage to be so good at optimizing your software that you also optimize the hardware it runs on.

Quoted by no less than Steve Jobs at the 2007 iPhone launch:

In other compute hardware news, Google is also working on Coral, an on-device AI chip, and Sycamore claimed quantum supremacy in 2019.

No, Senator, sorry, I didn’t mean that! Google has plenty of competitors!

If you ask Sam Altman, he’d respond that it was inevitable anyway. Sam reportedly has 0% equity in the for-profit entity and OpenAI is still a nonprofit at its core, however much the outside world tends to brush over the stated mission of alignment and safety.

Tagging followup links here; a google-focused essay on what is ailing Google (and perhaps why it needs a hard reset): https://medium.com/@pravse/the-maze-is-in-the-mouse-980c57cfd61a