The Unbundling of ChatGPT (Feb 2024 Recap)

Have we seen Peak ChatGPT? Also: our usual highest-signal recap of top items for the AI Engineer from Feb 2024!

This is the 7th in our monthly recaps for supporters. Jan 2024 is now archived. See the rest of the month-by-month must-reads here! Also don’t miss our audio discussion of Feb 2024 news on the Jan-Feb Audio Recap episode.

Our next SF event is AI UX 2024 - let’s see the new frontier for UX since last year!

Remember the heady days of early 2023, when ChatGPT was going through the roof, and shameless #foomers were breathlessly tweeting about how it reached 100m users in 2 months? And remember your eyebrow raise when, nine whole months later, sama reported the same user numbers at Dev Day?

Would it surprise you if ChatGPT had zero growth for the past year?

If these estimates are true, “Peak ChatGPT” was heralded by the release of LLaMA 1 (leading to an epic $830B jump in Meta’s valuation), the first “good enough” (GPT3-level) open source LLMs, soon followed by Llama 2 in July (our coverage here), while LLaMa 1’s lead Guillame Lample departed and became the New Kings of Open Source AI with Mistral 7B in September then Mixtral just ahead of NeurIPS in December. We’ve just celebrated Latent Space’s first anniversary by with “Compute Month” - an oral history of the GPU Rich landscape with Together, Modal, Replicate, and Meta enabling the “open AI” counterweight to OpenAI.

Fast forward to 2024 (past a quiet January), and people get hundreds of likes when they cancel ChatGPT, and trust in the brand-new GPTs are at an all-time low1. There are hints of encouraging new features with Advanced Data Analysis V2 and new persistent memory, but neither are shipped more than a month later. While incidents for a rapidly scaled service are inevitable, ChatGPT’s reputation has been impacted by persistent accusations of laziness (fixed with no explanation), a bloated system prompt, and new cases of going off the rails (fixed with an unsatisfying explanation). The competition has heated up, too — both Claude 3 and Gemini Pro 1.5 (and even Magic.dev?) now offer up to 8x more context length and far better recall than GPT-4T.

But perhaps all the AI growth in the past year has gone somewhere else2.

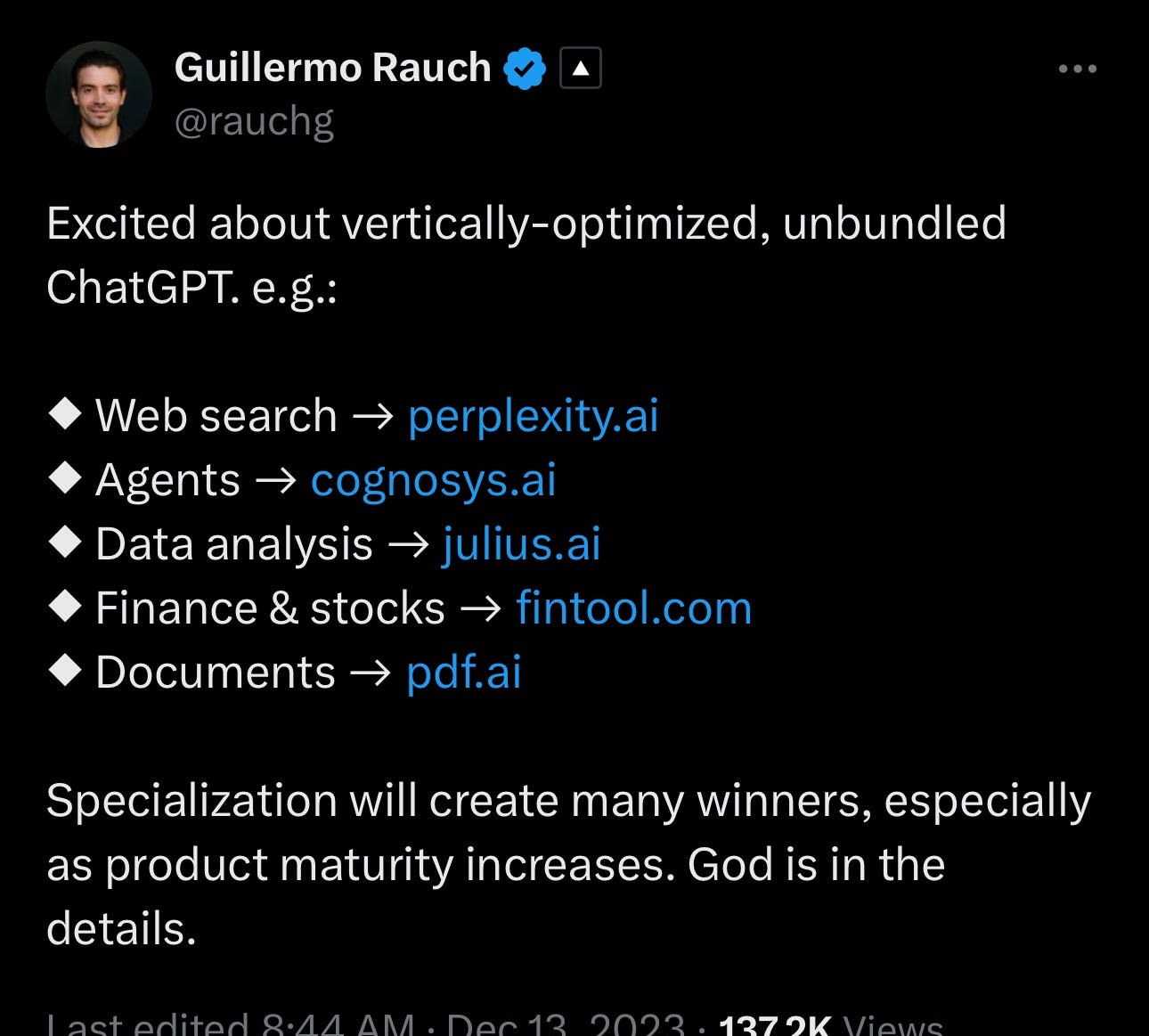

In almost every ChatGPT usecase3 there are now a wealth of verticalized4 AI startups dedicated toward addressing the needs of users better than the stock ChatGPT interface and bundled tools can — better UI options (eg IDEs and image/document editors), better native integrations (eg for cron-repeating actions), better privacy/enterprise protections (eg for healthcare and finance), more fine-grained control (the default RAG of GPTs are naive and non-configurable). We’ve used the current ChatGPT GPT Store as a basis for identifying the biggest use case categories, but of course there are many not pictured that are also riding the new specialization meta:

Heads I win, Tails I win — slightly less

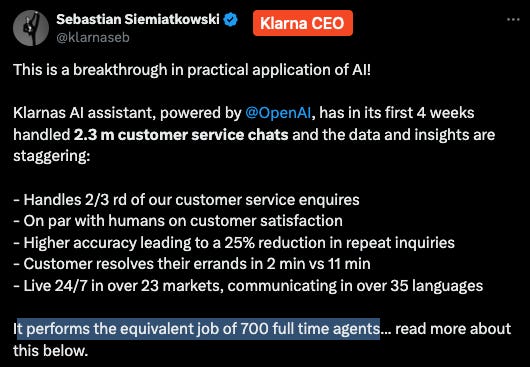

One might observe that there are 2 ways to make money in AI - bundling and unbundling AI capabilities. OpenAI is winning in its representation of bundled AGI - aesthetic image generation included - but it also wins when verticalized startups build with the OpenAI API anyway. Klarna was a notable success story this month having the power of ChatGPT inside their app rather than having Klarna inside ChatGPT:

In some sense the B2B and B2C halves of OpenAI compete with each other, and that, I am sure, is somewhat healthy competition — the only “downside” being that OpenAI gets to train on RLHF data from ChatGPT, and loses out on that the more traffic migrates outside those walls, and becomes easier to swap for open source models.

You could view the new GPT store as an attempt to recapture those verticalized needs — instead of moving off platform and paying $20/month all over the place, why not stay inside ChatGPT and pay once and let OpenAI split the as-yet-just-theoretical revenue to GPT creators? But GPT creators are wise to this - most have figured out monetization (just as plugins did before them) and only publish “lite” versions to ChatGPT to serve as funnels to their main platforms.

In the game console business, it is well understood that purchase decisions are often driven by platform exclusive games. In some sense, the future of ChatGPT features on platform exclusive models. If (not when) Sora gets a public release, and when GPT-5 gets a public release, they will almost certainly debut on ChatGPT before anywhere else, and that will drive the next leg up in demand and adoption.

Latent Space News

Feb 1: Why StackOverflow usage is down 50% — with David Hsu of Retool

Feb 8: Cloud Intelligence at the speed of 5000 tok/s - with Ce Zhang and Vipul Ved Prakash of Together AI

Feb 16: Truly Serverless Infra for AI Engineers - with Erik Bernhardsson of Modal

Feb 28: A Brief History of the Open Source AI Hacker - with Ben Firshman of Replicate

We also held Latent Space Final Frontiers and appeared at 3 SF/SG meetups.

And here’s last month’s recap if you missed it:

Industry News

The raw notes from which we draw everything above. You can always see the raw material on Github and of course we don’t see everything, check the other newsletters and podcasters that do.

We are pivoting the format of the news recap, adding only commentary on notable events not covered by the theme of the month - you can always read the raw Monthly Note for items that didn’t make our cut but still caught our attention.

Frontier Models

OpenAI Sora: If you haven’t read the official blog, details and examples, and HN, you should. Also note the imperfections and the disagreements between Yann LeCun and Jim Fan on world models. The live demos were also notable:

and on OpenAI’s new Tiktok and Instagram accounts (dog, pizza)

Bill Peebles (diffusion transformer author) selection

The Sora team did 2 public interviews we know of - one with MKBHD and at UC Berkeley. Neither dropped significant knowledge. WSJ did another more controversial one with Mira Murati that also contained little technical detail.

Gemini Pro 1.5: RIP Bard, Gemini 1.5 rocked the world (official blogpost, technical report, HN):

“Gemini 1.5 Pro comes with a standard 128,000 token context window. But starting today, a limited group of developers and enterprise customers can try it with a context window of up to 1 million tokens via AI Studio and Vertex AI in private preview.” — we don’t know exactly what these innovations are but the community is focusing on RingAttention.

Tons of user hype — however it was slow and not magical when I tried it

Gemini's performance improves as I add dozens of examples. There doesn't seem to be an upper limit. Many-example prompting is the new fine-tuning.

"just tried with a paper and asked it "what does Figure 5 show?" it contextualized the whole thing and answered based on that tiny section + figure." - Sully Omarr

"I uploaded an entire codebase directly from github, AND all of the issues. Not only was it able to understand the entire codebase, it identified the most urgent issue, and IMPLEMENTED a fix." tweet

"I fed an entire biology textbook into Gemini 1.5 Pro. 491,002 tokens. I asked it 3 extremely specific questions, and it got each answer 100% correct." tweet

"I recorded myself lifting weights. I fed the video into Gemini 1.5 Pro and asked it to write JSON for each exercise’s name, set count, rep count, weight, and to generate form critiques. Worked perfectly." tweet

"I fed Google Gemini 1.5 Pro the last 8 quarters of Amazon shareholder reports and call transcripts. Wow. "What was an Amazon focus for 2022 that is weirdly absent from the 2023 shareholder calls and reports?"" tweet

"I uploaded the source code for the text adventure game, Zork, and asked Gemini 1.5 to play it with me. The model walked me through the game play -- but also made changes and modifications based on the questions I asked." tweet

Open Models

Mistral Large (decent benchmarks, beats GPT3.5 but just shy of GPT4)

Google Gemma (HN, good Interconnects recap)

labeled “strong” by Yi Tay

Stable Diffusion 3 - just announced not released

StarCoder 2 and The Stack v2 - sequel to one of most popular open source code models

Open Source Tooling

"My benchmark for large language models" - Carlini

NotesGPT: OSS voice notetaking app. Powered by Convex, Together.ai, and Whisper.

Danswer (YC W24) – Open-source AI search and chat over private data an open source and self-hostable ChatGPT-style system that can access your team’s unique knowledge by connecting to 25 of the most common workplace tools (Slack, Google Drive, Jira, etc.).

Other Launches

Groq runs Mixtral 8x7B-32k with 500 T/s. groq employees were all over HN and Reddit

swyx recap vs dylan patel - groq employees insist dylan is wrong? stratechery recap

Vercel streaming RSC UI components - swyx capture of live demo

Retell AI launch - Conversational Speech API for Your LLM talked up by Garry Tan and Aaron Levie

Fundraising

Moonshot AI raised $1b on $2.5b valuation (aka YueZhiAnMian in China) "for an LLM focused on long context".

Anthropic raised $750m at $18b valuation from Menlo Ventures

Together AI raised $100m at $1.25b valuation - 2x from previous round 4 months ago - later confirmed by Together

Lambda Labs raised $320m series C (twitter) with USIT

Figure robotics AI $675m round at roughly $2b valuation led by OpenAI and Microsoft joined by Bezos and Nvidia (twitter)

raised $70m seed last year and was previously rumored raising $500m

Magic.dev $117m series A with Nat and Dan

Photoroom.ai raised $43m (bubble?)

Langchain $25m series A with sequoia

and LangSmith GA

covered in Forbes

DatologyAI $12m seed (tweet) - a data curation startup

investors such as Jeff Dean and Yann LeCun. notable list

Meme of the Month

With the Gemini image generation fiasco taking up a lot of public outrage, this was a very competitive month, and some are too spicy for us to feature but deserve an honorable mention. Our family friendly Meme of the Month award has to go to the people behind GOODY-2, the safest, most ethical, actually working, AI chat model in the world, complete with industry-leading responsible model card disclosures:

:

I also conducted an unscientific Product Market Fit poll, and ChatGPT currently falls short of Sean Ellis’ recommended 40% “very disappointed” level for PMF. Choosing to stick this datapoint in footnotes because 1) at 38% ish it’s close enough, and 2) it is just anecdata among my followers who are overly-indexed on ChatGPT alternatives and flaws (or are they canaries for what the general population will think?).

We recognize that our unbundling diagram is “unbalanced” - but put that version as the canonical one because of how important GPT RAG and the mobile app voice chat are to some people dear to us. Here’s the “balanced” version without the extras that will probably look better for thumbnailing.

For those who are unfamiliar with the “vertical” vs “horizontal” split, I wrote an intro to this terminology in my book. Here ChatGPT is “horizontal”.