The Most Dangerous Thing An AI Startup Can Do Is Build For Other AI Startups

How Codeium went from 0 to >$10m in ten months, What enterpriseready.io got wrong. A comprehensive braindump on how to be Enterprise Infra Native!

From swyx: I’m glad to welcome back Anshul as our first ever “returning returning” guest author! His first and second posts on AI product thinking were hits on Latent Space and Codeium has >10x’ed its install base and raised a $65m Series B and $150m Series C since our last catch up. Today, they are launching Windsurf, their own Agentic IDE - at first glance “just” a VS Code fork like many before them. I can vouch that today’s piece was written without promoting Windsurf in mind, but as an outside observer it is remarkable how the principles here align — move more deliberately and build for AI in the Enterprise on Day 1. Yet many other “Enterprise AI” companies also feel soulless and vague, bc it is incredibly hard to thread the needle of staying interesting but safe.

In a world of many similar-looking AI products (they literally started by building another Copilot), Codeium is literally “built different”, and we are incredibly lucky to have them explain how they are building -as they build-.

We are still taking questions and messages for our next big recap episode, and organizing OpenAI DevDay Singapore, NeurIPS Vancouver, and other online meetups on our calendar. Join us!

The rest of this piece is from Anshul. All illustrations are my poor attempts to visualize.

In November 2022, I wrote my first guest blog post for Latent Space, and (admittedly optimistically) hoped it would be the first of a three part series:

Part 3: How to make money with your AI product ← we are here!

Well, our enterprise product went from 0 to over $10M in ARR in less than a year, so it’s time for Part 3. And perhaps surprisingly, it isn’t simply what sites such as enterpriseready.io say to do.

I had theses for all three of these back in November 2022, but when I wrote the first post, I had a slight problem. We had only just launched Codeium, so while I could speak to the first thesis that a strong product strategy comes from a deep understanding of the economics and use case details, we were not necessarily differentiated from other AI code assistants at the time (every tool was just autocomplete, if you can remember what that was like) and we were definitely not making money. Two years later, it has become more and more clear that companies need to go much farther from just a model API call for both quality and cost reasons, and topics like task-specific models, hosting open source models, RAG, and more have all taken off.

Summer 2023 rolled around, and we had already GA’d our in-IDE chat experience, with features like code lenses and direct insertion into code that were completely novel at the time (again, try remembering what not having these things was like). It would be months before GitHub Copilot would GA chat. By that time, I was confident enough that I was right in the second thesis - that UX would be key to creating differentiated moats, both in the short and long term. A bit over a year later, I would argue this is also more true than ever, with people really picking up on how important it is to have a very intuitive and powerful user experience that somewhat hides the complexities of the underlying model and reasoning. Take a look at Perplexity or Glean, or even in our space, a Zed or Cursor. Good tech, but great UX.

At the time though, there was still a slight problem when it came to writing about my third thesis. Codeium for individuals was, and still primarily is, free, and we had just launched our paid enterprise product, so we weren’t necessarily making money yet. So I wasn’t in a position to write about how to make money with an AI product. Opinion without evidence is speculation.

Well, as mentioned earlier, our enterprise product hit eight figures in ARR in less than a year. In the B2B software world, that’s… fast. So, here I am, a year later, hoping to go three for three on my insights about generative AI products.

So, what’s the third thesis? If you want to make sustained money in the generative AI world, you must be “enterprise infrastructure native.”

What is Enterprise Infrastructure Native?

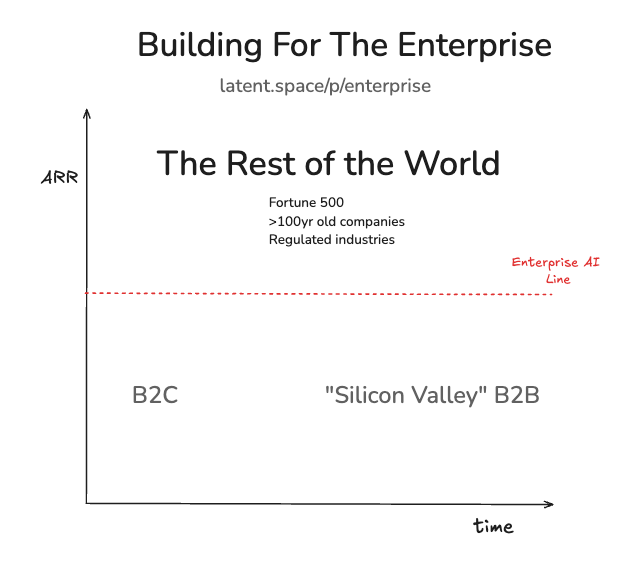

Being enterprise infrastructure native means that the company has built the muscle from the very beginning to make its product available in even the gnarliest enterprise environments. The Fortune 500. The regulated industries. The centuries-old companies.

In San Francisco and Silicon Valley, we often get stuck in a bubble. For example, if we were building a code assistant for enterprises, and just thought about Silicon Valley companies, we would be missing where the money is. The top 10 US banks employ far more software developers than all of FAANG. And that’s just a handful of banks. And just the US. JPMorgan Chase alone has over 40k technologists. A quick Google search shows that Meta has around 32k software engineers in its workforce.

And the reality is, these non-tech enterprises have a lot of constraints that do not appear for an individual user or a “digitally native” Silicon Valley tech company. To get an MVP out, iterate as fast as possible on the product, and still have users, it is natural to choose to eliminate these constraints and just focus on the Silicon Valley user.

The secret of an “enterprise infrastructure native” company is that they do not cave into this nature to ignore these constraints in the beginning. Why? It is way harder to introduce these constraints later.

Of course, it is harder because you might make the incorrect design decisions. For example, you may have architected the system in a way that is hard to containerize and deploy in a self-hosted system later (classic SaaS to on-prem problem), which is what a lot of these large regulated companies want. Or forgotten about rule-based access controls and other security considerations because you just assumed every employee could see all data. Or used systems that wouldn’t pass HIPAA compliance, forgot to add auditing systems, or ignored building deep telemetry.

But there are other meta reasons why there are difficulties to correct the ship later. For one, you may not iterate against the full state of reality, and an enterprise simply looks different from a consumer. For example, in our AI coding space, if you only iterate for the individual developer, you will never realize the importance of a very advanced reasoning system that can process a large, sprawling, and often antiquated codebase. That’s not what your hobbyist, Silicon Valley developer building a zero-to-one project is working on.

And for another, you may grow your team fast enough that you don’t have the right expertise to iterate on these constraints. For example, to do generative AI on-prem in order to satisfy that security constraint, we need GPUs on-prem, but this means we will have limited compute (noone is buying a cluster of H100s for most applications). This means we need to do a lot of really important infrastructure optimization tricks to make up for the fact that we can’t just “scale compute” in the background. If the entire team looks like a product team at this point, not a vertical team with expertise in GPU infrastructure, then you are not set up to succeed. If you move faster early on due to ignoring a bunch of constraints, you will build the wrong architecture and team for the inevitable enterprise shift later. This is similar to why pre-generative AI companies are generally having difficulty adapting to this new paradigm - they don’t have the workforce that thinks about generative AI as a core part of its DNA.

Some products require “enterprise infrastructure native” teams by default, since they are, by definition, only for these kinds of enterprises. For example, Harvey doesn’t make sense for individuals. But the vast majority of problem areas have a choice on whether to consider these constraints in the beginning or not. Enterprise infrastructure native companies consciously make the choice to work against the totality of these constraints.

I do want to be clear about one thing - being “enterprise infrastructure native” does mean it takes more effort to iterate on a product. There are simply more constraints. That being said, if this instinct is built into the DNA of the company from the beginning, it simply becomes a way of doing things, you will end up building the systems to make it easier to iterate with these constraints from the beginning, and you eliminate a massive looming cultural challenge. At the same time, this does not mean that you have to wait on releasing a feature until it works for every enterprise. For example, we have SaaS enterprise tiers that are faster to deploy features to and get feedback on. To be “enterprise infrastructure native” means that at least some people in the company should be actively thinking about how to eventually make the feature available to all enterprises.

The reason why I call this enterprise infrastructure native and not just enterprise native is to, unsurprisingly, emphasize the infrastructure piece. The vast majority of additional constraints manifests as technical software infrastructure problems, and so this highlights the part of the stack that needs outsized focus if you were to do this well, which we will get to shortly.

This is why I have always thought that advice on sites such as enterpriseready.io miss the mark. While yes, you do have to think about things such as change management, rule based access controls, SLA & support, and more in order to make your SaaS tool enterprise-ready, these guides come across as “how do you patch a product that you have already built” rather than “how do you build the right product from the beginning.” The reality is that sometimes premature optimization DOES pay off. I think in the age of generative AI, it is even more dangerous than traditional SaaS products to take the “patch your product to be enterprise ready” approach because the introduced constraints are even gnarlier, and the space is moving so fast that you may not have time to patch your tool before someone who has been thinking about the product correctly captures the addressable market.

Why the “Non-Tech” Enterprises?

If you’ve reached this point, you may be holding on to one core question - all of this talk of selling to non-tech-native enterprises, but what about the B2C market or more digitally native tech companies? Why does there seem to be a disregard to making money in these markets? It is important to discuss why the revenue for generative AI startups for the foreseeable future will come from the non-tech enterprises.

The first argument for enterprises rather than individuals is that enterprises have always had the money for software. Microsoft obviously has a large B2B arm, but Google and Facebook also make a gigantic chunk of their revenue from enterprise advertisers. If you are building a product with generative AI today, the value proposition today is like something about getting more work done or doing more creative work. Enterprises are willing to put big bucks behind that value proposition, and convincing just a few big enterprises will be equivalent to convincing many thousands to tens of thousands of individuals.

The first argument for non-tech enterprises over tech enterprises is that all of the big tech companies are taking AI very very seriously, and have massive war chests to try to build themselves. It isn’t just the FAANG companies - the availability of open source LLMs and easy-to-use LLM-adjacent frameworks make build more attractive than buy to companies that have the DNA of building. And all of the big tech companies are trying to capture the market opportunity themselves. Imagine us trying to sell Codeium to Microsoft. Not great.

This interest from big tech to build generative AI products should also scare anyone going B2C because big tech already has gigantic B2C bases and phenomenal distribution. We see this already. Perplexity is trying to take on Google in the B2C search market, and has to do some questionable things, like using Google in the backend. On the other hand, Glean is going for the B2B search market, and is doing quite well at it with not a whole lot of movement from big tech just yet.

This interest from big tech also makes it tougher to compete for tech-native enterprise business, since these often have fewer constraints than the non-tech-native companies. Big tech companies are fast enough to solve for the easier constraints, but startups can differentiate by using their speed to solve the more complex constraints that a non-tech-native enterprise would have. We see this at Codeium all the time. Even though almost every big tech company has a code assistant product, we end up not competing with any of them for many of the non-tech-native companies because our product uniquely works with the constraints that these companies have. We’ll elaborate in the next section on what those constraints are and how to solve for them.

I know what folks may be thinking about right now. But what about OpenAI? They have been wildly successful at B2C with ChatGPT. I will be the first to admit that OpenAI is a really big exception given how long they have been working on this technology, which, if you are building a product starting now, is something you will not have. And actually, people are now wondering whether OpenAI really will be able to sustain customers in the long run as users try other models (ex. Anthropic’s Claude 3.5 Sonnet seems like a hit with developers) and as users try chat-based products other than ChatGPT (ex. Perplexity). The B2C market is unforgiving.

How to be Enterprise Infrastructure Native

Ok, hopefully by now I have convinced you of the logic behind being an enterprise infrastructure native company. But what does that actually mean? What are the considerations to actually be aware of from the beginning?

Luckily, this is where I can fall back to our learnings at Codeium.

Data is one of the underlying reasons for a lot of the enterprise infrastructure native considerations. Unlike existing SaaS solutions where the data, while perhaps sensitive, is traceable through the system, the big black box of the LLM adds a lot of uncertainty, both in terms of lots of data being used to train and lack of clarity on the traceability of the data being generated.

Security

For a generative AI tool to work, you are processing some private data. It is guaranteed that every enterprise has existing policies on the privacy and security of the particular data that your tool is processing. For example, we at Codeium process code, and if the company uses a self-hosted version of an SCM, then they have already demonstrated that their default policy for their code is to not send it out to third-party servers, even if the third-party has all of the accreditations such as SOC2 compliance.

The most important aspect to think about infrastructurally is the set of deployment options you should provide. Very often, you will either need a fully airgrapped self-hosted deployment option, or at the least, a hybrid version where any persisted private data (or information derived from private data) stays within the customer’s tenant. You should think about containerizing the server side of your solution so that you can deploy it simply (Docker compose for a single node system, Kubernetes/Helm for multi node systems), as well as making sure there is functionality from the client side to securely point to the customer’s server instance. There are many challenges with these deployments, especially the self-hosted one. Some of these include:

Making sure you set up your own image scanning so that there are no security vulnerabilities, even something simple like Google Artifact Registry. The customer will use their own scanners too, and there are a bunch of different ones, so expect that.

Setting up a system of continuous updates and releases (most customers prefer a “pull image” update solution rather than a “push image” approach from the vendor). The frequency is really up to you on how quickly you believe your product evolves - if there is a lot of UX change, maybe better to cut a release every couple of weeks, but if the UX is relatively stable and it is mostly more research-y open-ended reasoning improvements, then monthly or less should be fine. The one general benefit of faster release cycles is that if there is a bug in a release, there is less time before a fix is in a stable release (as opposed to some separate patch if it is that serious).

Making sure that you can work on machines that the hyperscalers and on-prem OEMs can provide. There is a whole article I can write about the underlying politics of who gets what GPUs, but you often see disparities like 1xA100 and 2xA100 instances being available on Azure and GCP, but only 8xA100 instances being available on AWS. Right-sizing expensive GPU hardware to minimize your solution’s TCO is critical to create a better ROI story.

Simply helping customers through what will be one of their first GPU deployments.

However, even if you think that self-hosted or hybrid deployments are too complex, there are a number of attestations and certifications that you will need to think about for your SaaS solution. SOC2 Type 2 is table stakes, and this requires setting up controls and being audited for adherence to those controls over a time period, so expect for a multi-month lead time towards getting this attestation. ISO 27001 takes this one step further, and is often asked about from European companies (although because of GDPR requirements, even with this attestation you might want to consider hosting your solution from servers located in the EU). Finally, if the federal government is a potential customer (there is $$$ here), you will need to think about Fedramp (for civilian agencies) and Impact Levels (for the DoD). To get those certifications, you will need to do a bunch of things like containerizing your application that you would have to do for a self-hosted deployment, and this is a lot harder to do if you build your SaaS application without this in mind.

Finally, this is a bit obvious, but if you end up processing customer data on your side, just don’t train on that data. It sounds simple, but that is still the biggest fear of customers as that risk still dominates media headlines. This will be asked for in every contract (or added in redlines if you don’t include it). We have never even tried to give discounts in exchange for the right to train our general models on the customer’s private data, because there has never been any indication that people would be open to this. This software is not so expensive where a company would even consider the tradeoff between discounts and IP privacy, especially in a world where case law is yet to be established.

There’s also a moment of what some may view as an unfair reality. While a lot of companies do use cloud services from the big tech companies, potential customers have a lot less trust in the security of a startup, and so may require more from a security aspect than they would with the big tech companies. This requires you to be honest about your solution’s capabilities across deployment options. Very often your self-hosted option will not be as feature rich as your SaaS hosted option, and so are you putting yourself in a tough situation by piloting your self-hosted version versus one of the big tech competitor’s SaaS version?

Compliance

All you have to do is search up “generative AI lawsuit” and you will know what I mean here. Because these LLMs are trained on vast amounts of data, and because it is impossible to trace the outputs of these probabilistic systems to particular training data examples, there are a whole host of new compliance issues that did not exist beforehand.

For one, many models are trained on data that they likely shouldn’t be, such as copyrighted images or non-permissively licensed code. Many enterprise legal teams have apprehension of using such systems if that would open them to being implicated in a lawsuit down the road (this space is very behind in terms of case law). So, if you are enterprise infrastructure native, you will have the ability to control the data that you use to train models, whether you are training your own small models or performing any additional task-specific pretraining on open-source models. Think about building data sanitization measures so that you can confidently state to enterprises that you do not train on copyrighted material. Go the extra mile here - for example, we remove all non-permissively licensed code from our training data, but also remove all remaining code that has similar edit distance to the code that was explicitly non-permissively licensed (in case someone else copy-pasted code without attributing it properly). Demonstrating proactive thoroughness, even though the models are inherently probabilistic, adds a lot of credibility with an end customer, whose legal team could shoot you down before you deliver any value.

Oh, and on that topic, don’t violate other terms of services. OpenAI’s terms of service states that you cannot use the outputs of their models as training material. So don’t do that, you’d be surprised how many people ignore this.

This is a lot of proactive work that you can do, but discerning enterprises will catch the fact that these models are probabilistic, and will want some sort of guarantees on the data that is produced. This is where you have to get clever with building attribution systems, likely based on some heuristics of edit distances and similarity scores. For example, we built more advanced attribution filtering than just the naive string match approaches that other tools take. This is often still not enough. We built attribution logging for any permissive code matches, audit logging for particular regulated industries, built in support to provide a BAA to healthcare companies, and more. All of these turn into infrastructure problems very quickly.

You actually do need to take this very seriously, because as a startup, similar to security, you will be expected to have industry leading indemnity clauses, or at least those that match what the big tech companies provide, to even be considered from a risk perspective. This would be dangerous to do unless you have built real confidence in this side of the solution.

None of these workstreams are necessarily “exciting” because from the end user’s perspective, the product is the same, but critical nevertheless.

Personalization

Thought that a generic system trained on a lot of publicly available data would do the trick for enterprises?

Most enterprises think that their work is special, and the reality is, even if their tech stacks are not that unique, they do have a lot of private data that would be highly relevant to an AI system that wants to generate more private data. For us, existing private codebases would be the most relevant information to create high quality results with minimal hallucinations, as they contain knowledge of existing libraries, semantics, best practices, and more.

You probably would not be surprised that security comes back again. You will not be able to process all of the raw private data on every inference, so there is likely some pre-processing of the data that you will have to perform, and you will have to persist that information somewhere. Well, this is where the issues with purely SaaS solutions come back. It’ll take a lot more effort, if even possible, to convince an enterprise customer to be ok with a startup to have this kind of access and control of their data, creating a limit to the amount of personalization you can perform.

However, it is not just the security of their IP to external sources. Role based access controls (RBAC) are doubly critical with AI applications because the AI tool itself could provide a pathway for broadly leaking data that can only be accessed by certain employees to the rest of the employee base. Again, this subtlety is new because generative AI is one of the first technologies that creates data that looks like the data that already exists. As an extreme example, it turns out that for government projects, a bunch of data may be unclassified on their own, but become classified when combined together1. Personalization and AI as a whole has the power of consolidating information, but that should be treated carefully.

And whenever you are planning to utilize private data, there are a number of considerations that come into play, especially for older enterprises. The importance is heightened with AI because the quality of data going in very strongly determines the quality of data produced. What is the age of the existing data? How relevant is the data to the current tasks at hand? What data sources are more important? How do you balance information from multiple data sources?

Personalization is an axis that every startup should exploit since it adds differentiated value that most larger competitors will be slower to roll out - these systems are challenging and a mistake here will easily lead to a massive PR nightmare for them. However, it should be clear that there is a lot of infrastructure to make this work, and work well.

Analytics & ROI Reporting

Analytics are not new for any enterprise software tool, but they are critical for generative AI applications given the outsized, inflated expectations that the market has created around this technology. We may be past the inflated expectations soon, but that does not change the fact that most potential customers don’t fully know exactly what to expect from generative AI, or how to quantify its value.

Proving ROI is perhaps the hardest problem. Even for something as specific as code generation, where the outputs of a generative AI tool can be verified (the code has to compile, run, and do the expected task), it is highly unclear what developer productivity even means. Is it pull request cycle time? Well maybe, but there are so many confounding variables there that it is hard to truly evaluate whether the individual tool was responsible for any change in that value. Is it the amount of code accepted? Well maybe that is a good proxy for value, but it becomes pretty apparent that half the code coming from AI does not mean the developer is twice as productive. I can imagine that this is even harder with applications such as marketing copy, where the outputs are not necessarily verifiable in the first place. I will be the first to admit that I don’t really have an answer here. Proving ROI is a very hard, elusive problem.

That being said, generative AI does benefit from the fact that most leaders do believe that the technology will add value, and trust their employees when they say that they feel more productive by using such tools. So, as a vendor, you should be thinking about how to enable your enterprise customers to progressively realize more value. For example, a good start would be to split up usage statistics by team. That way, an administrator could learn which groups are getting more value to glean best practices and which groups are getting less value and may need more enablement.

Latency

We talk about latency quite a bit in the Codeium blog, but latency constraints are so critical to generative AI applications because they directly influence things such as choice of models. For example, autocomplete for code generation needs to run in hundreds of milliseconds for a developer to even see the suggestions in the first place, but because of the autoregressive nature of LLMs, this eliminates the usage of all of the largest foundational models. No amount of quantization, speculative decoding, etc, will solve for a base model that is just too big.

And model inference is not the only source of latency. All of the personalization work? Any retrieval or reasoning before inference needs to fit in the latency budget. Sending data to a separate server? Factor in the network latency, especially if you are dealing with dense data such as video. Doing post-processing work on the model outputs such as checking against attribution filters? Add that to the list of systems that need to be low latency.

It turns out, unsurprisingly, that these are all software infrastructure problems, yet again. You can build the smartest system in the world, but if it does not run fast enough for what the workload and user demands, it really does not matter.

Scale

This one is a bit of a meta point that accentuates all of the challenges listed above. All of these simply become harder when you look at the scale of users/employees at these enterprises, the scale of the data that they have, and the complexities of their private infrastructure. We have customers that have tens of thousands of repositories, hundreds of millions of lines of code, and tens of thousands of developers. These will stretch every aspect of the system that you build.

Have you created a self-hosted system that indexes codebases for personalization? Cool, now what if the customer has tens of thousands of repositories - are they all actually helpful for personalization? How can the customer specify what is good and bad code? How do you manage updates to this indexing at this scale? How does this scale to tens of thousands of developers without impacting latency? How do you manage thousands of user groups and access controls?

I unfortunately don’t have the time or space to answer every one of these questions, but these are the kinds of questions to be asking yourself as you design and build your solution if you don’t want to be surprised and scrambling later.

Wrapping Up

In many ways, this article is an explanation for Codeium’s B2B success so far, an under-the-hood look at how we have thought through and rationalized the space. At the same time, and this might come as a surprise, I hope this is the one thesis where I am wrong.

I want to see startups be able to successfully take on big tech even in the B2C and tech-native B2B markets. I want to see enterprises of all forms eventually view AI as a reason to become more tech-native themselves. And selfishly, I want to see successful B2B companies like Codeium be viewed as the innovative AI companies with the best AI products, not just “the enterprise-ready solution.”

But provided that you want to make sustainable revenue with generative AI today as a new startup, I hope this helps! Be comfortable with being uncomfortable as an enterprise infrastructure native company.

Codeium Plug

swyx again. Just as in the last post, you can check out Codeium for Enterprises, but you should also watch the launch video for Codeium’s latest product, Windsurf. Yes, it is another VS Code Fork, but it is an Agentic IDE built with the principles Anshul and Varun have laid out in their time with us. We’ll be recording a followup discussion with them so questions and thoughts are welcome!

swyx note: this is also called mosaic theory but is legal in finance

Great insights here Anshul!

I was wondering, if this process to build up the infrastructure ready for enterprise (or at least a part of it), can itself be automated by agentic systems?

Seems like a space another agentic AI startup could go after.

Very interesting article which showed me a different software development perspective. Thanks!

Are there other well known GenAI startups following this path?