CEOs of publicly traded companies are often in the news talking about their new AI initiatives, but few of them have built anything with it. Drew Houston from Dropbox is different; he has spent over 400 hours coding with LLMs in the last year and is now refocusing his 2,500+ employees around this new way of working, 17 years after founding the company.

Bottling up cognitive energy

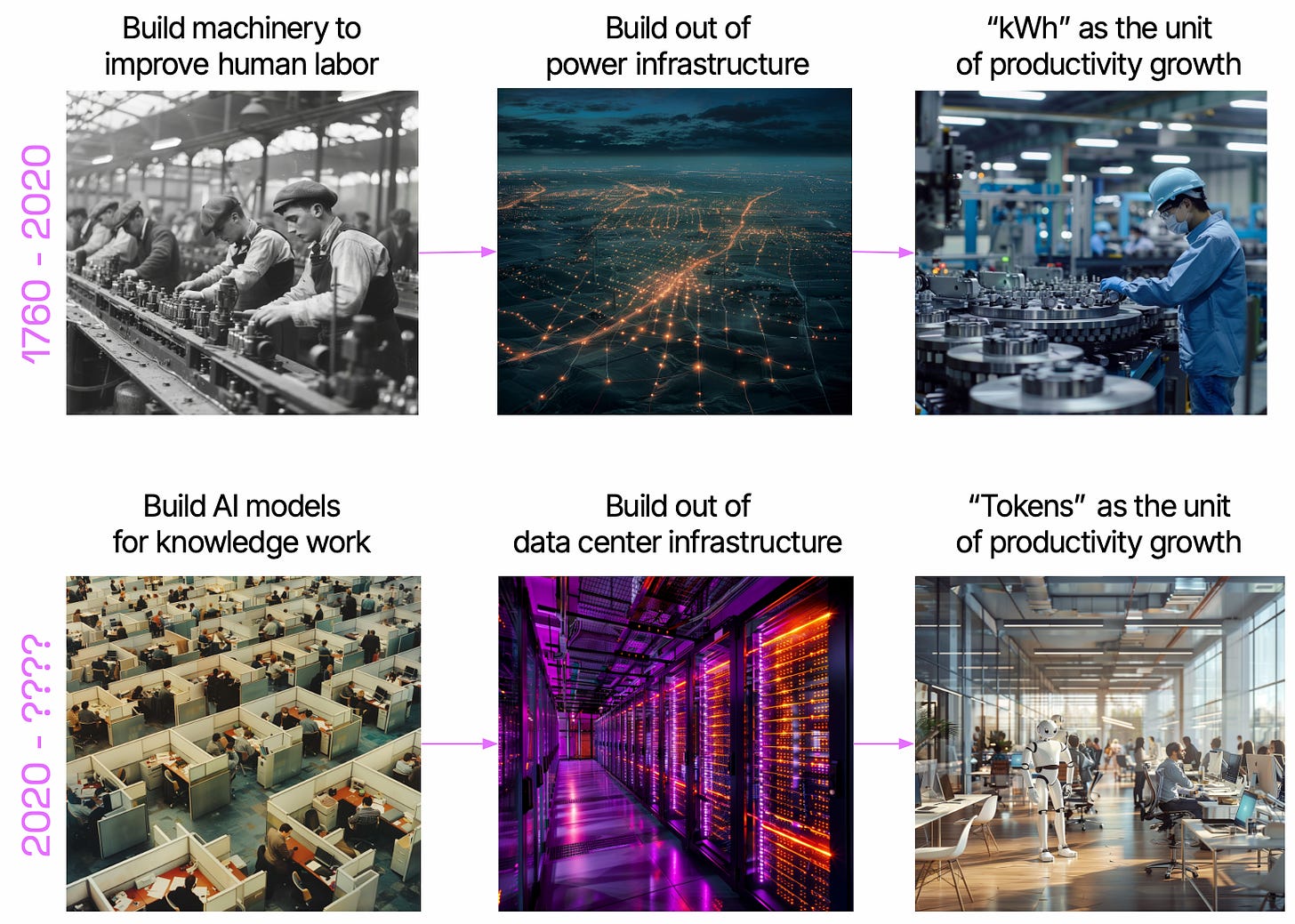

In our Winds of AI Winter episode we briefly talked about Sovereign AI and how AI is becoming the next driver of GDP growth for many countries. The last 300 years were driven by the kWh, the next 300 will be driven by the token:

Drew’s vision is to take this new source of “bottled up cognitive energy” and empower every human with a silicon brain that can replace a lot of the menial work that we do:

We talk a lot about like human versus machine being like substituting; it's like CPU, GPU, it's not like one is categorically better than the other, they're complements. Like if you have something really parallel, use a GPU, if not, use a CPU. […] And so I think we need to think about knowledge work in that context, like what are human brains good at? What's our silicon brain good at? Let's resegment the work. Let's offload all the stuff that can be automated. Let's go on a hunt for like anything that could save a human CPU cycle.

We have some early winners in this re-segmentation: writing code, summarizing content (see AINews), and customer support agents are three of the most widespread AI use cases, but they are all synchronous. We called agents the “next AI killer app” back in April 2023, but we have yet to see non-demo applications of them. Delegating to your silicon brain should feel more like handing off work to a colleague, and the shape of products will have to change accordingly.

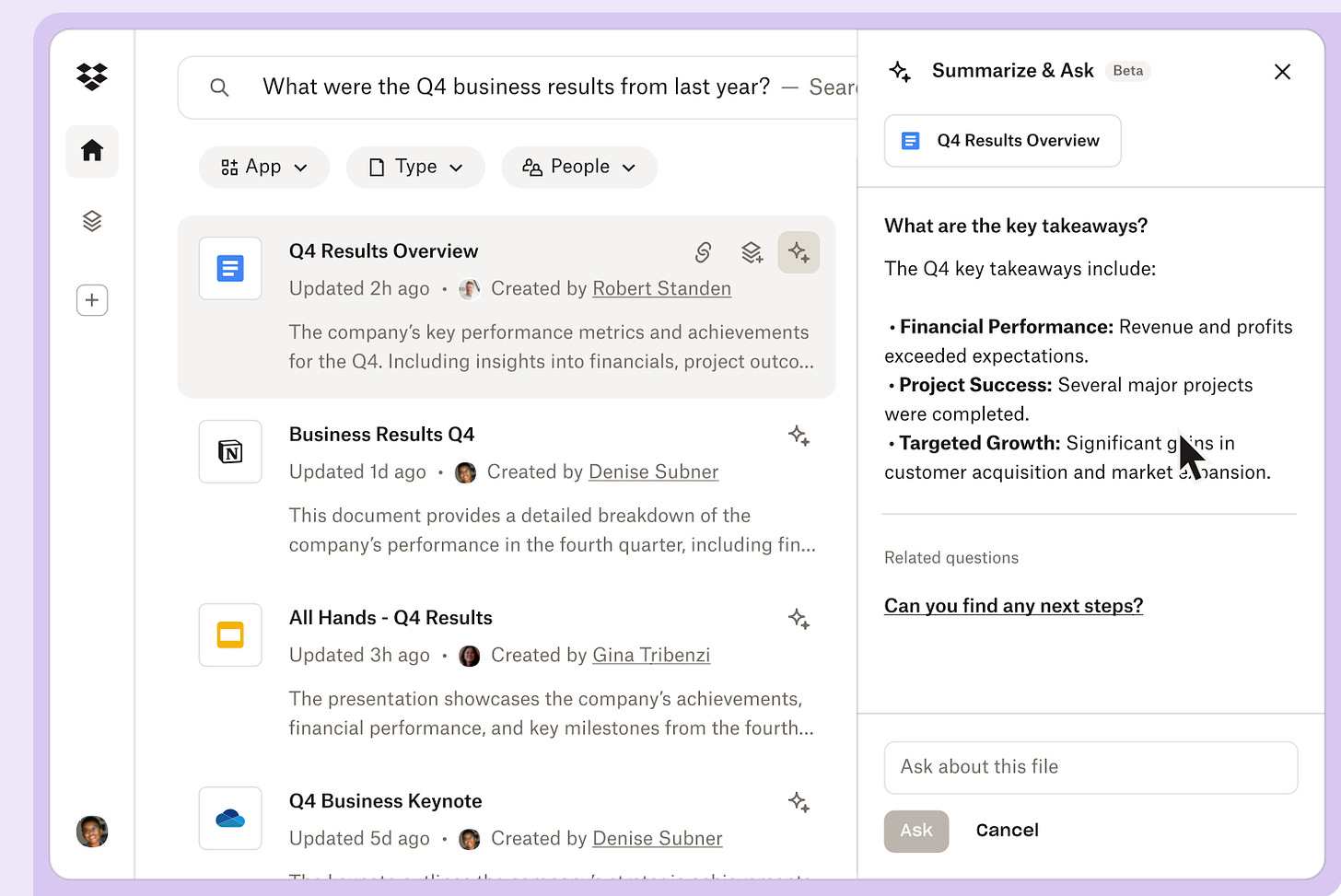

Dropbox is going from a company that syncs your files, to the place you go to work. You can connect Dash to all your applications as well as other storage providers like Google Drive, OneDrive, etc without having to move your files over, which is a big shift for a company that used to charge by the gigabyte of storage. They don’t want to own the file, but rather the interface to all of them. This might end up being one of the great examples of overcoming the Innovator’s Dilemma, and cannibalizing your legacy business to move on to the next chapter of the story.

Founder Mode

We really focus on AI Engineering on the podcast, but we also have a lot of founders who listen. Dropbox is one of the iconic companies of Silicon Valley and we spent the last ~20 minutes talking about his learnings from building Dropbox, growing from an engineer to being a public company CEO, how to build and coach a team, and why “Founder Mode” is basically a Rorschach test.

If I had to pick one takeaway, it’d be this:

The next chess move for them (i.e. the incumbents) is just like copy, bundle, kill. So they're going to copy your product. They'll bundle it with their platforms and they'll like give it away for free or no added cost. And so with AI, I think, you know, there's been a lot of focus on the large language model, but it's like large language models are a pretty bad business, […] models only have value if they're kind of on this like Pareto frontier of size and quality and cost. […] And then you have to like be like, alright, where's the value going to accrue in the stack or the value chain? And, you know, certainly at the bottom with Nvidia and the semiconductor companies, and then it's going to be at the top, like the people who have the customer relationship who have the application layer.

Greetings from our 2024 AI Pioneers Summit to wrap up :)

Show Notes

AI tools

Timestamps

00:00 Introductions

00:43 Drew's AI journey

04:14 Revalidating expectations of AI

08:23 Simulation in self-driving vs. knowledge work

12:14 Drew's AI Engineering setup

15:24 RAG vs. long context in AI models

18:06 From "FileGPT" to Dropbox AI

23:20 Is storage solved?

26:30 Products vs Features

30:48 Building trust for data access

33:42 Dropbox Dash and universal search

38:05 The evolution of Dropbox

42:39 Building a "silicon brain" for knowledge work

48:45 Open source AI and its impact

51:30 "Rent, Don't Buy" for AI

54:50 Staying relevant

58:57 Founder Mode

01:03:10 Advice for founders navigating AI

01:07:36 Building and managing teams in a growing company

Transcript

Alessio [00:00:00]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel Partners, and there's no Swyx today, but I'm joined by Drew Houston of Dropbox. Welcome, Drew.

Drew [00:00:14]: Thanks for having me.

Alessio [00:00:15]: So we're not going to talk about the Dropbox story. We're not going to talk about the Chinatown bus and the flash drive and all that. I think you've talked enough about it. Where I want to start is you as an AI engineer. So as you know, most of our audience is engineering folks, kind of like technology leaders. You obviously run Dropbox, which is a huge company, but you also do a lot of coding. I think that's how you spend almost 400 hours, just like coding. So let's start there. What was the first interaction you had with an LLM API and when did the journey start for you?

Drew [00:00:43]: Yeah. Well, I think probably all AI engineers or whatever you call an AI engineer, those people started out as engineers before that. So engineering is my first love. I mean, I grew up as a little kid. I was that kid. My first line of code was at five years old. I just really loved, I wanted to make computer games, like this whole path. That also led me into startups and eventually starting Dropbox. And then with AI specifically, I studied computer science, I got my, I did my undergrad, but I didn't do like grad level computer science. I didn't, I sort of got distracted by all the startup things, so I didn't do grad level work. But about several years ago, I made a couple of things. So one is I sort of, I knew I wanted to go from being an engineer to a founder. And then, but sort of the becoming a CEO part was sort of backed into the job. And so a couple of realizations. One is that, I mean, there's a lot of like repetitive and like manual work you have to do as an executive that is actually lends itself pretty well to automation, both for like my own convenience. And then out of interest in learning, I guess what we call like classical machine learning these days, I started really trying to wrap my head around understanding machine learning and informational retrieval more, more formally. So I'd say maybe 2016, 2017 started me writing these more successively, more elaborate scripts to like understand basic like classifiers and regression and, and again, like basic information retrieval and NLP back in those days. And there's sort of like two things that came out of that. One is techniques are super powerful. And even just like studying like old school machine learning was a pretty big inversion of the way I had learned engineering, right? You know, I started programming when everyone starts programming and you're, you're sort of the human, you're giving an algorithm to the, and spelling out to the computer how it should run it. And then machine learning, here's machine learning where it's like actually flip that, like give it sort of the answer you want and it'll figure out the algorithm, which was pretty mind bending. And it was both like pretty powerful when I would write tools, like figure out like time audits or like, where's my time going? Is this meeting a one-on-one or is it a recruiting thing or is it a product strategy thing? I started out doing that manually with my assistant, but then found that this was like a very like automatable task. And so, which also had the side effect of teaching me a lot about machine learning. But then there was this big problem, like anytime you, it was very good at like tabular structured data, but like anytime it hit, you know, the usual malformed English that humans speak, it would just like fall over. I had to kind of abandon a lot of the things that I wanted to build because like there's no way to like parse text. Like maybe it would sort of identify the part of speech in a sentence or something. But then fast forward to the LLM, I mean actually I started trying some of like this, what we would call like very small LLMs before kind of the GPT class models. And it was like super hard to get those things working. So like these 500 parameter models would just be like hallucinating and repeating and you know. So actually I'd kind of like written it off a little bit. But then the chat GPT launch and GPT-3 for sure. And then once people figured out like prompting and instruction tuning, this was sort of like November-ish 2022 like everybody else sort of that the chat GPT launch being the starting gun for the whole AI era of computing and then having API access to three and then early access to GPT-4. I was like, oh man, it's happening. And so I was literally on my honeymoon and we're like on a beach in Thailand and I'm like coding these like AI tools to automate like writing or to assist with writing and all these different use cases.

Alessio [00:04:14]: You're like, I'm never going back to work. I'm going to automate all of it before I get back.

Drew [00:04:17]: And I was just, you know, ever since then, I mean, I've always been like coding like prototypes and just stuff to make my life more convenient, but like escalated a lot after 22. And yeah, I spent, I checked, I think it was probably like over 400 hours this year so far coding because I had my paternity leave where I was able to work on some special projects. But yeah, it's a super important part of like my whole learning journey is like being really hands-on with these things. And I mean, it's probably not a typical recipe, but I really love to get down to the metal as far as how this stuff works.

Alessio [00:04:47]: Yeah. So Swyx and I were with Sam Altman in October 22. We were like at a hack day at OpenAI and that's why we started this podcast eventually. But you did an interview with Sam like seven years ago and he asked you what's the biggest opportunity in startups and you were like machine learning and AI and you were almost like too early, right? It's like maybe seven years ago, the models weren't quite there. How should people think about revalidating like expectations of this technology? You know, I think even today people will tell you, oh, models are not really good at X because they were not good 12 months ago, but they're good today.

Drew [00:05:19]: What's your project? Heuristics for thinking about that or how is, yeah, I think the way I look at it now is pretty, has evolved a lot since when I started. I mean, I think everybody intuitively starts with like, all right, let's try to predict the future or imagine like what's this great end state we're going to get to. And the tricky thing is like often those prognostications are right, but they're right in terms of direction, but not when. For example, you know, even in the early days of the internet, 90s when things were even like tech space and you know, even before like the browser or things like that, people were like, oh man, you're going to have, you know, you're going to be able to order food, get like a Snickers delivered to your house, you're going to be able to watch any movie ever created. And they were right. But they were like, you know, it took 20 years for that to actually happen. And before you got to DoorDash, you had to get, you started with like Webvan and Cosmo and before you get to Spotify, you had to do like Napster and Kazaa and LimeWire and like a bunch of like broken Britney Spears MP3s and malware. So I think the big lesson is being early is the same as being wrong. Being late is the same as being wrong. So really how do you calibrate timing? And then I think with AI, it's the same thing that people are like, oh, it's going to completely upend society and all these positive and negative ways. I think that's like most of those things are going to come true. The question is like, when is that going to happen? And then with AI specifically, I think there's also, in addition to sort of the general tech category or like jumping too fast to the future, I think that AI is particularly susceptible to that. And you look at self-driving, right? This idea of like, oh my God, you can have a self-driving car captured everybody's imaginations 10, 12 years ago. And you know, people are like, oh man, in two years, there's not going to be another year. There's not going to be a human driver on the road to be seen. It didn't work out that way, right? We're still 10, 12 years later where we're in a world where you can sort of sometimes get a Waymo in like one city on earth. Exciting, but just took a lot longer than people think. And the reason is there's a lot of engineering challenges, but then there's a lot of other like societal time constants that are hard to compress. So one thing I think you can learn from things like self-driving is they have these levels of autonomy that's a useful kind of framework in driving or these like maturity levels. People sort of skip to like level five, full autonomy, or we're going to have like an autonomous knowledge worker that's just going to take, that's going to, and then we won't need humans anymore kind of projection that that's going to take a long time. But then when you think about level one or level two, like these little assistive experiences, you know, we're seeing a lot of traction with those. So what you see really working is the level one autonomy in the AI world would be like the tab auto-complete and co-pilot, right? And then, you know, maybe a little higher is like the chatbot type interface. Obviously you want to get to the highest level you can to build a good product, but the reliability just isn't, and the capability just isn't there in the early innings. And so, and then you think of other level one, level two type things, like Google Maps probably did more for self-driving than in literal self-driving, like a billion people have like the ability to have like maps and navigation just like taken care of for you autonomously. So I think the timing and maturity are really important factors to include.

Alessio [00:08:23]: The thing with self-driving, maybe one of the big breakthroughs was like simulation. So it's like, okay, instead of driving, we can simulate these environments. It's really hard to do when knowledge work, you know, how do you simulate like a product review? How do you simulate these things? I'm curious if you've done any experiments. I know some companies have started to build kind of like a virtual personas that you can like bounce ideas off of.

Drew [00:08:42]: I mean, fortunately in a company you generate lots of, you know, actual human training data all the time. And then I also just like start with myself, like, all right, I can, you know, it's pretty tricky even within your company to be like, all right, let's open all this up as quote training data. But, you know, I can start with my own emails or my own calendar or own stuff without running into the same kind of like privacy or other concerns. So I often like start with my own stuff. And so that is like a one level of bootstrapping, but actually four or five years ago during COVID, we decided, you know, a lot of companies were thinking about how do we go back to work? And so we decided to really lean into remote and distributed work because I thought, you know, this is going to be the biggest change to the way we work in our lifetimes. And COVID kind of ripped up a bunch of things, but I think everybody was sort of pleasantly surprised how with a lot of knowledge work, you could just keep going. And actually you were sort of fine. Work was decoupled from your physical environment, from being in a physical place, which meant that things people had dreamed about since the fifties or sixties, like telework, like you actually could work from anywhere. And that was now possible. So we decided to really lean into that because we debated, should we sort of hit the fast forward button or should we hit the rewind button and go back to 2019? And obviously that's been playing out over the last few years. And we decided to basically turn, we went like 90% remote. We still, the in-person part's really important. We can kind of come back to our working model, but we're like, yeah, this is, everybody is going to be in some kind of like distributed or hybrid state. So like instead of like running away from this, like let's do a full send, let's really go into it. Let's live in the future. A few years before our customers, let's like turn Dropbox into a lab for distributed work. And we do that like quite literally, both of the working model and then increasingly with our products. And then absolutely, like we have products like Dropbox Dash, which is our universal search product. That was like very elevated in priority for me after COVID because like now you have, we're putting a lot more stress on the system and on our screens, it's a lot more chaotic and overwhelming. And so even just like getting the right information, the right person at the right time is a big fundamental challenge in knowledge work and these, in the distributed world, like big problem today is still getting, you know, has been getting bigger. And then for a lot of these other workflows, yeah, there's, we can both get a lot of natural like training data from just our own like strategy docs and processes. There's obviously a lot you can do with synthetic data and you know, actually like LMs are pretty good at being like imitating generic knowledge workers. So it's, it's kind of funny that way, but yeah, the way I look at it is like really turn Dropbox into a lab for distributed work. You think about things like what are the big problems we're going to have? It's just the complexity on our screens just keeps growing and the whole environment gets kind of more out of sync with what makes us like cognitively productive and engaged. And then even something like Dash was initially seeded, I made a little personal search engine because I was just like personally frustrated with not being able to find my stuff. And along that whole learning journey with AI, like the vector search or semantic search, things like that had just been the tooling for that. The open source stuff had finally gotten to a place where it was a pretty good developer experience. And so, you know, in a few days I had sort of a hello world type search engine and I'm like, oh my God, like this completely works. You don't even have to get the keywords right. The relevance and ranking is super good. We even like untuned. So I guess that's to say like I've been surprised by if you choose like the right algorithm and the right approach, you can actually get like super good results without having like a ton of data. And even with LLMs, you can apply all these other techniques to give them, kind of bootstrap kind of like task maturity pretty quickly.

Alessio [00:12:14]: Before we jump into Dash, let's talk about the Drew Haas and AI engineering stuff. So IDE, let's break that down. What IDE do you use? Do you use Cursor, VS Code, do you use any coding assistant, like WeChat, is it just autocomplete?

Drew [00:12:28]: Yeah, yeah. Both. So I use VS Code as like my daily driver, although I'm like super excited about things like Cursor or the AI agents. I have my own like stack underneath that. I mean, some off the shelf parts, some pretty custom. So I use the continue.dev just like AI chat UI basically as just the UI layer, but I also proxy the request. I proxy the request to my own backend, which is sort of like a router. You can use any backend. I mean, Sonnet 3.5 is probably the best all around. But then these things are like pretty limited if you don't give them the right context. And so part of what the proxy does is like there's a separate thing where I can say like include all these files by default with the request. And then it becomes a lot easier and like without like cutting and pasting. And I'm building mostly like prototype toy apps, so it's like a front end React thing and a Python backend thing. And so it can do these like end to end diffs basically. And then I also like love being able to host everything locally or do it offline. So I have my own, when I'm on a plane or something or where like you don't have access or the internet's not reliable, I actually bring a gaming laptop on the plane with me. It's like a little like blue briefcase looking thing. And then I like literally hook up a GPU like into one of the outlets. And then I have, I can do like transcription, I can do like autocomplete, like I have an 8 billion, like Llama will run fine.

Alessio [00:13:44]: And you're using like a Llama to run the model?

Drew [00:13:47]: No, I use, I have my own like LLM inference stack. I mean, it uses the backend somewhat interchangeable. So everything from like XLlama to VLLM or SGLang, there's a bunch of these different backends you can use. And then I started like working on stuff before all this tooling was like really available. So you know, over the last several years, I've built like my own like whole crazy environment and like in stack here. So I'm a little nuts about it.

Alessio [00:14:12]: Yeah. What's the state of the art for, I guess not state of the art, but like when it comes to like frameworks and things like that, do you like using them? I think maybe a lot of people say, hey, things change so quickly, they're like trying to abstract things. Yeah.

Drew [00:14:24]: It's maybe too early today. As much as I do a lot of coding, I have to be pretty surgical with my time. I don't have that much time, which means I have to sort of like scope my innovation to like very specific places or like my time. So for the front end, it'll be like a pretty vanilla stack, like a Next.js, React based thing. And then these are toy apps. So it's like Python, Flask, SQLite, and then all the different, there's a whole other thing on like the backend. Like how do you get, sort of run all these models locally or with a local GPU? The scaffolding on the front end is pretty straightforward, the scaffolding on the backend is pretty straightforward. Then a lot of it is just like the LLM inference and control over like fine grained aspects of how you do generation, caching, things like that. And then there's a lot, like a lot of the work is how do you take, sort of go to an IMAP, like take an email, get a new, or a document or a spreadsheet or any of these kinds of primitives that you work with and then translate them, render them in a format that an LLM can understand. So there's like a lot of work that goes into that too. Yeah.

Alessio [00:15:24]: So I built a kind of like email triage system and like I would say 80% of the code is like Google and like pulling emails and then the actual AI part is pretty easy.

Drew [00:15:34]: Yeah. And even, same experience. And then I tried to do all these like NLP things and then to my dismay, like a bunch of reg Xs were like, got you like 95% of the way there. So I still leave it running, I just haven't really built like the LLM powered version of it yet. Yeah.

Alessio [00:15:51]: So do you have any thoughts on rag versus long context, especially, I mean with Dropbox, you know? Sure. Do you just want to shove things in? Like have you seen that be a lot better?

Drew [00:15:59]: Well, they kind of have different strengths and weaknesses, so you need both for different use cases. I mean, it's been awesome in the last 12 months, like now you have these like long context models that can actually do a lot. You can put a book in, you know, Sonnet's context and then now with the later versions of LLAMA, you can have 128k context. So that's sort of the new normal, which is awesome and that, that wasn't even the case a year ago. That said, models don't always use, and certainly like local models don't use the full context well fully yet, and actually if you provide too much irrelevant context, the quality degrades a lot. And so I say in the open source world, like we're still just getting to the cusp of like the full context is usable. And then of course, like when you're something like Dropbox Dash, like it's basically building this whole like brain that's like read everything your company's ever written. And so that's not going to fit into your context window, so you need rag just as a practical reality. And even for a lot of similar reasons, you need like RAM and hard disk in conventional computer architecture. And I think these things will keep like horse trading, like maybe if, you know, a million or 10 million is the new, tokens is the new context length, maybe that shifts. Maybe the bigger picture is like, it's super exciting to talk about the LLM and like that piece of the puzzle, but there's this whole other scaffolding of more conventional like retrieval or conventional machine learning, especially because you have to scale up products to like millions of people you do in your toy app is not going to scale to that from a cost or latency or performance standpoint. So I think you really need these like hybrid architectures that where you have very like purpose fit tools, or you're probably not using Sonnet 3.5 for all of your normal product use cases. You're going to use like a fine tuned 8 billion model or sort of the minimum model that gets you the right output. And then a smaller model also is like a lot more cost and latency versus like much better characteristics on that front.

Alessio [00:17:48]: Yeah. Let's jump into the Dropbox AI story. So sure. Your initial prototype was Files GPT. How did it start? And then how did you communicate that internally? You know, I know you have a pretty strong like mammal culture. One where you're like, okay, Hey, we got to really take this seriously.

Drew [00:18:06]: Yeah. Well, on the latter, it was, so how do we say like how we took Dropbox, how AI seriously as a company started kind of around that time, that honeymoon time, unfortunately. In January, I wrote this like memo to the company, like around basically like how we need to play offense in 23. And that most of the time the kind of concrete is set and like the winners are the winners and things are kind of frozen. But then with these new eras of computing, like the PC or the internet or the phone or the concrete on freezes and you can sort of build, do things differently and have a new set of winners. It's sort of like a new season starts as a result of a lot of that sort of personal hacking and just like thinking about this. I'm like, yeah, this is an inflection point in the industry. Like we really need to change how we think about our strategy. And then becoming an AI first company was probably the headline thing that we did. And then, and then that got, and then calling on everybody in the company to really think about in your world, how is AI going to reshape your workflows or what sort of the AI native way of thinking about your job. File GPT, which is sort of this Dropbox AI kind of initial concept that actually came from our engineering team as, you know, as we like called on everybody, like really think about what we should be doing that's new or different. So it was kind of organic and bottoms up like a bunch of engineers just kind of hacked that together. And then that materialized as basically when you preview a file on Dropbox, you can have kind of the most straightforward possible integration of AI, which is a good thing. Like basically you have a long PDF, you want to be able to ask questions of it. So like a pretty basic implementation of RAG and being able to do that when you preview a file on Dropbox. So that was the origin of that, that was like back in 2023 when we released just like the starting engines had just, you know, gotten going.

Alessio [00:19:53]: It's funny where you're basically like these files that people have, they really don't want them in a way, you know, like you're storing all these files and like you actually don't want to interact with them. You want a layer on top of it. And that's kind of what also takes you to Dash eventually, which is like, Hey, you actually don't really care where the file is. You just want to be the place that aggregates it. How do you think about what people will know about files? You know, are files the actual file? Are files like the metadata and they're just kind of like a pointer that goes somewhere and you don't really care where it is?

Drew [00:20:21]: Yeah.

Alessio [00:20:22]: Any thoughts about?

Drew [00:20:23]: Totally. Yeah. I mean, there's a lot of potential complexity in that question, right? Is it a, you know, what's the difference between a file and a URL? And you can go into the technicals, it's like pass by value, pass by reference. Okay. What's the format like? All right. So it starts with a primitive. It's not really a flat file. It's like a structured data. You're sort of collaborative. Yeah. That's keeping in sync. Blah, blah, blah. I actually don't start there at all. I just start with like, what do people, like, what do humans, let's work back from like how humans think about this stuff or how they should think about this stuff. Meaning like, I don't think about, Oh, here are my files and here are my links or cloud docs. I'm just sort of like, Oh, here's my stuff. This, this, here's sort of my documents. Here's my media. Here's my projects. Here are the people I'm working with. So it starts from primitives more like those, like how do people, how do humans think about these things? And then, then start from like a more ideal experience. Because if you think about it, we kind of have this situation that will look like particularly medieval in hindsight where, all right, how do you manage your work stuff? Well, on all, you know, on one side of your screen, you have this file browser that literally hasn't changed since the early eighties, right? You could take someone from the original Mac and sit them in front of like a computer and they'd be like, this is it. And that's, it's been 40 years, right? Then on the other side of your screen, you have like Chrome or a browser that has so many tabs open, you can no longer see text or titles. This is the state of the art for how we manage stuff at work. Interestingly, neither of those experiences was purpose-built to be like the home for your work stuff or even anything related to it. And so it's important to remember, we get like stuck in these local maxima pretty often in tech where we're obviously aware that files are not going away, especially in certain domains. So that format really matters and where files are still going to be the tool you use for like if there's something big, right? If you're a big video file, that kind of format in a file makes sense. There's a bunch of industries where it's like construction or architecture or sort of these domain specific areas, you know, media generally, if you're making music or photos or video, that all kind of fits in the big file zone where Dropbox is really strong and that's like what customers love us for. It's also pretty obvious that a lot of stuff that used to be in, you know, Word docs or Excel files, like all that has tilted towards the browser and that tilt is going to continue. So with Dash, we wanted to make something that was really like cloud-native, AI-native and deliberately like not be tied down to the abstractions of the file system. Now on the other hand, it would be like ironic and bad if we then like fractured the experience that you're like, well, if it touches a file, it's a syncing metaphor to this app. And if it's a URL, it's like this completely different interface. So there's a convergence that I think makes sense over time. But you know, but I think you have to start from like, not so much the technology, start from like, what do the humans want? And then like, what's the idealized product experience? And then like, what are the technical underpinnings of that, that can make that good experience?

Alessio [00:23:20]: I think it's kind of intuitive that in Dash, you can connect Google Drive, right? Because you think about Dropbox, it's like, well, it's file storage, you really don't want people to store files somewhere, but the reality is that they do. How do you think about the importance of storage and like, do you kind of feel storage is like almost solved, where it's like, hey, you can kind of store these files anywhere, what matters is like access.

Drew [00:23:38]: It's a little bit nuanced in that if you're dealing with like large quantities of data, it actually does matter. The implementation matters a lot or like you're dealing with like, you know, 10 gig video files like that, then you sort of inherit all the problems of sync and have to go into a lot of the challenges that we've solved. Switching on a pretty important question, like what is the value we provide? What does Dropbox do? And probably like most people, I would have said like, well, Dropbox syncs your files. And we didn't even really have a mission of the company in the beginning. I'm just like, yeah, I just don't want to carry a thumb driving around and life would be a lot better if our stuff just like lived in the cloud and I just didn't have to think about like, what device is the thing on or what operating, why are these operating systems fighting with each other and incompatible? You know, I just want to abstract all of that away. But then so we thought, even we were like, all right, Dropbox provides storage. But when we talked to our customers, they're like, that's not how we see this at all. Like actually, Dropbox is not just like a hard drive in the cloud. It's like the place where I go to work or it's a place like I started a small business is a place where my dreams come true. Or it's like, yeah, it's not keeping files in sync. It's keeping people in sync. It's keeping my team in sync. And so they're using this kind of language where we're like, wait, okay, yeah, because I don't know, storage probably is a commodity or what we do is a commodity. But then we talked to our customers like, no, we're not buying the storage, we're buying like the ability to access all of our stuff in one place. We're buying the ability to share everything and sort of, in a lot of ways, people are buying the ability to work from anywhere. And Dropbox was kind of, the fact that it was like file syncing was an implementation detail of this higher order need that they had. So I think that's where we start too, which is like, what is the sort of higher order thing, the job the customer is hiring Dropbox to do? Storage in the new world is kind of incidental to that. I mean, it still matters for things like video or those kinds of workflows. The value of Dropbox had never been, we provide you like the cheapest bits in the cloud. But it is a big pivot from Dropbox is the company that syncs your files to now where we're going is Dropbox is the company that kind of helps you organize all your cloud content. I started the company because I kept forgetting my thumb drive. But the question I was really asking was like, why is it so hard to like find my stuff, organize my stuff, share my stuff, keep my stuff safe? You know, I'm always like one washing machine and I would leave like my little thumb drive with all my prior company stuff on in the pocket of my shorts and then almost wash it and destroy it. And so I was like, why do we have to, this is like medieval that we have to think about this. So that same mindset is how I approach where we're going. But I think, and then unfortunately the, we're sort of back to the same problems. Like it's really hard to find my stuff. It's really hard to organize myself. It's hard to share my stuff. It's hard to secure my content at work. Now the problem is the same, the shape of the problem and the shape of the solution is pretty different. You know, instead of a hundred files on your desktop, it's now a hundred tabs in your browser, et cetera. But I think that's the starting point.

Alessio [00:26:30]: How has the idea of a product evolved for you? So, you know, famously Steve Jobs started by Dropbox and he's like, you know, this is just a feature. It's not a product. And then you build like a $10 billion feature. How in the age of AI, how do you think about, you know, maybe things that used to be a product are now features because the AI on top of it, it's like the product, like what's your mental model? Do you think about it?

Drew [00:26:50]: Yeah. So I don't think there's really like a bright line. I don't know if like I use the word features and products and my mental model that much of how I break it down because it's kind of a, it's a good question. I mean, I don't not think about features, I don't think about products, but it does start from that place of like, all right, we have all these new colors we can paint with and all right, what are these higher order needs that are sort of evergreen, right? So people will always have stuff at work. They're always need to be able to find it or, you know, all the verbs I just mentioned. It's like, okay, how can we make like a better painting and how can we, and then how can we use some of these new colors? And then, yeah, it's like pretty clear that after the large models, the way you find stuff share stuff, it's going to be completely different after COVID, it's going to be completely different. So that's the starting point. But I think it is also important to, you know, you have to do more than just work back from the customer and like what they're trying to do. Like you have to think about, and you know, we've, we've learned a lot of this the hard way sometimes. Okay. You might start with a customer. You might start with a job to be on there. You're like, all right, what's the solution to their problem? Or like, can we build the best product that solves that problem? Right. Like what's the best way to find your stuff in the modern world? Like, well, yeah, right now the status quo for the vast majority of the billion, billion knowledge workers is they have like 10 search boxes at work that each search 10% of your stuff. Like that's clearly broken. Obviously you should just have like one search box. All right. So we can do that. And that also has to be like, I'll come back to defensibility in a second, but like, can we build the right solution that is like meaningfully better from the status quo? Like, yes, clearly. Okay. Then can we like get distribution and growth? Like that's sort of the next thing you learned is as a founder, you start with like, what's the product? What's the product? What's the product? Then you're like, wait, wait, we need distribution and we need a business model. So those are the next kind of two dominoes you have to knock down or sort of needles you have to thread at the same time. So all right, how do we grow? I mean, if Dropbox 1.0 is really this like self-serve viral model that there's a lot of, we sort of took a borrowed from a lot of the consumer internet playbook and like what Facebook and social media were doing and then translated that to sort of the business world. How do you get distribution, especially as a startup? And then a business model, like, all right, storage happened to be something in the beginning happened to be something people were willing to pay for. They recognize that, you know, okay, if I don't buy something like Dropbox, I'm going to have to buy an external hard drive. I'm going to have to buy a thumb drive and I have to pay for something one way or another. People are already paying for things like backup. So we felt good about that. But then the last domino is like defensibility. Okay. So you build this product or you get the business model, but then, you know, what do you do when the incumbents, the next chess move for them is I just like copy, bundle, kill. So they're going to copy your product. They'll bundle it with their platforms and they'll like give it away for free or no added cost. And, you know, we had a lot of, you know, scar tissue from being on the wrong side of that. Now you don't need to solve all four for all four or five variables or whatever at once or you can sort of have, you know, some flexibility. But the more of those gates that you get through, you sort of add a 10 X to your valuation. And so with AI, I think, you know, there's been a lot of focus on the large language model, but it's like large language models are a pretty bad business from a, you know, you sort of take off your tech lens and just sort of business lens. Like there's sort of this weirdly self-commoditizing thing where, you know, models only have value if they're kind of on this like Pareto frontier of size and quality and cost. Being number two, you know, if you're not on that frontier, the second the frontier moves out, which it moves out every week, like your model literally has zero economic value because it's dominated by the new thing. LLMs generate output that can be used to train or improve. So there's weird, peculiar things that are specific to the large language model. And then you have to like be like, all right, where's the value going to accrue in the stack or the value chain? And, you know, certainly at the bottom with Nvidia and the semiconductor companies, and then it's going to be at the top, like the people who have the customer relationship who have the application layer. Those are a few of the like lenses that I look at a question like that through.

Alessio [00:30:48]: Do you think AI is making people more careful about sharing the data at all? People are like, oh, data is important, but it's like, whatever, I'm just throwing it out there. Now everybody's like, but are you going to train on my data? And like your data is actually not that good to train on anyway. But like how have you seen, especially customers, like think about what to put in, what to not?

Drew [00:31:06]: I mean, everybody should be. Well, everybody is concerned about this and nobody should be concerned about this, right? Because nobody wants their personal companies information to be kind of ground up into little pellets to like sell you ads or train the next foundation model. I think it's like massively top of mind for every one of our customers, like, and me personally, and with my Dropbox hat on, it's like so fundamental. And, you know, we had experience with this too at Dropbox 1.0, the same kind of resistance, like, wait, I'm going to take my stuff on my hard drive and put it on your server somewhere. Are you serious? What could possibly go wrong? And you know, before that, I was like, wait, are you going to sell me, I'm going to put my credit card number into this website? And before that, I was like, hey, I'm going to take all my cash and put it in a bank instead of under my mattress. You know, so there's a long history of like tech and comfort. So in some sense, AI is kind of another round of the same thing, but the issues are real. And then when I think about like defensibility for Dropbox, like that's actually a big advantage that we have is one, our incentives are very aligned with our customers, right? We only get, we only make money if you pay us and you only pay us if we do a good job. So we don't have any like side hustle, you know, we're not training the next foundation model. You know, we're not trying to sell you ads. Actually we're not even trying to lock you into an ecosystem, like the whole point of Dropbox is it works, you know, everywhere. Because I think one of the big questions we've circling around is sort of like, in the world of AI, where should our lane be? Like every startup has to ask, or in every big company has to ask, like, where can we really win? But to me, it was like a lot of the like trust advantages, platform agnostic, having like a very clean business model, not having these other incentives. And then we also are like super transparent. We were transparent early on. We're like, all right, we're going to establish these AI principles, very table stakes stuff of like, here's transparency. We want to give people control. We want to cover privacy, safety, bias, like fairness, all these things. And we put that out up front to put some sort of explicit guardrails out where like, hey, we're, you know, because everybody wants like a trusted partner as they sort of go into the wild world of AI. And then, you know, you also see people cutting corners and, you know, or just there's a lot of uncertainty or, you know, moving the pieces around after the fact, which no one feels good about.

Alessio [00:33:14]: I mean, I would say the last 10, 15 years, the race was kind of being the system of record, being the storage provider. I think today it's almost like, hey, if I can use Dash to like access my Google Drive file, why would I pay Google for like their AI feature? So like vice versa, you know, if I can connect my Dropbook storage to this other AI assistant, how do you kind of think about that, about, you know, not being able to capture all the value and how open people will stay? I think today things are still pretty open, but I'm curious if you think things will get more closed or like more open later.

Drew [00:33:42]: Yeah. Well, I think you have to get the value exchange right. And I think you have to be like a trustworthy partner or like no one's going to partner with you if they think you're going to eat their lunch, right? Or if you're going to disintermediate them and like all the companies are quite sophisticated with how they think about that. So we try to, like, we know that's going to be the reality. So we're actually not trying to eat anyone's like Google Drive's lunch or anything. Actually we'll like integrate with Google Drive, we'll integrate with OneDrive, really any of the content platforms, even if they compete with file syncing. So that's actually a big strategic shift. We're not really reliant on being like the store of record and there are pros and cons to this decision. But if you think about it, we're basically like providing all these apps more engagement. We're like helping users do what they're really trying to do, which is to get, you know, that Google Doc or whatever. And we're not trying to be like, oh, by the way, use this other thing. This is all part of our like brand reputation. It's like, no, we give people freedom to use whatever tools or operating system they want. We're not taking anything away from our partners. We're actually like making it, making their thing more useful or routing people to those things. I mean, on the margin, then we have something like, well, okay, to the extent you do rag and summarize things, maybe that doesn't generate a click. Okay. You know, we also know there's like infinity investment going into like the work agents. So we're not really building like a co-pilot or Gemini competitor. Not because we don't like those. We don't find that thing like captivating. Yeah, of course. But just like, you know, you learn after some time in this business that like, yeah, there's some places that are just going to be such kind of red oceans or just like super big battlefields. Everybody's kind of trying to solve the same problem and they just start duplicating all each other effort. And then meanwhile, you know, I think the concern would be is like, well, there's all these other problems that aren't being properly addressed by AI. And I was concerned that like, yeah, and everybody's like fixated on the agent or the chatbot interface, but forgetting that like, hey guys, like we have the opportunity to like really fix search or build a self-organizing Dropbox or environment or there's all these other things that can be a compliment. Because we don't really want our customers to be thinking like, well, do I use Dash or do I use co-pilot? And frankly, none of them do. In a lot of ways, actually, some of the things that we do on the security front with Dash for Business are a good compliment to co-pilot. Because as part of Dash for Business, we actually give admins, IT, like universal visibility and control over all the different, what's being shared in your company across all these different platforms. And as a precondition to installing something like co-pilot or Dash or Glean or any of these other things, right? You know, IT wants to know like, hey, before we like turn all the lights in here, like let's do a little cleaning first before we let everybody in. And there just haven't been good tools to do that. And post AI, you would do it completely differently. And so that's like a big, that's a cornerstone of what we do and what sets us apart from these tools. And actually, in a lot of cases, we will help those tools be adopted because we actually help them do it safely. Yeah.

Alessio [00:36:27]: How do you think about building for AI versus people? It's like when you mentioned cleaning up is because maybe before you were like, well, humans can have some common sense when they look at data on what to pick versus models are just kind of like ingesting. Do you think about building products differently, knowing that a lot of the data will actually be consumed by LLMs and like agents and whatnot versus like just people?

Drew [00:36:46]: I think it'll always be, I aim a little bit more for like, you know, level three, level four kind of automation, because even if the LLM is like capable of completely autonomously organizing your environment, it probably would do a reasonable job. But like, I think you build bad UI when the sort of user has to fit itself to the computer versus something that you're, you know, it's like an instrument you're playing or something where you have some kind of good partnership. And you know, and on the other side, you don't have to do all this like manual effort. And so like the command line was sort of subsumed by like, you know, graphical UI. We'll keep toggling back and forth. Maybe chat will be, chat will be an increasing, especially when you bring in voice, like will be an increasing part of the puzzle. But I don't think we're going to go back to like a million command lines either. And then as far as like the sort of plumbing of like, well, is this going to be consumed by an LLM or a human? Like fortunately, like you don't really have to design it that differently. I mean, you have to make sure everything's legible to the LLM, but it's like quite tolerant of, you know, malformed everything. And actually the more, the easier it makes something to read for a human, the easier it is for an LLM to read to some extent as well. But we really think about what's that kind of right, how do we build that right, like human machine interface where you're still in control and driving, but then it's super easy to translate your intent into like the, you know, however you want your folder, setting your environment set up or like your preferences.

Alessio [00:38:05]: What's the most underrated thing about Dropbox that maybe people don't appreciate?

Drew [00:38:09]: Well, I think this is just such a natural evolution for us. It's pretty true. Like when people think about the world of AI, file syncing is not like the next thing you would auto complete mentally. And I think we also did like our first thing so well that there were a lot of benefits to that. But I think there also are like, we hit it so hard with our first product that it was like pretty tough to come up with a sequel. And we had a bit of a sophomore slump and you know, I think actually a lot of kids do use Dropbox through in high school or things like that, but you know, they're not, they're using, they're a lot more in the browser and then their file system, right. And we know all this, but still like we're super well positioned to like help a new generation of people with these fundamental problems and these like that affect, you know, a billion knowledge workers around just finding, organizing, sharing your stuff and keeping it safe. And there's, there's a ton of unsolved problems in those four verbs. We've talked about search a little bit, but just even think about like a whole new generation of people like growing up without the ability to like organize their things and yeah, search is great. And if you just have like a giant infinite pile of stuff, then search does make that more manageable. But you know, you do lose some things that were pretty helpful in prior decades, right? So even just the idea of persistence, stuff still being there when you come back, like when I go to sleep and wake up, my physical papers are still on my desk. When I reboot my computer, the files are still on my hard drive. But then when in my browser, like if my operating system updates the wrong way and closes the browser or if I just more commonly just declared tab bankruptcy, it's like your whole workspace just clears itself out and starts from zero. And you're like, on what planet is this a good idea? There's no like concept of like, oh, here's the stuff I was working on. Yeah, let me get back to it. And so that's like a big motivation for things like Dash. Huge problems with sharing, right? If I'm remodeling my house or if I'm getting ready for a board meeting, you know, what do I do if I have a Google doc and an air table and a 10 gig 4k video? There's no collection that holds mixed format things. And so it's another kind of hidden problem, hidden in plain sight, like he's missing primitives. Files have folders, songs have playlists, links have, you know, there's no, somehow we miss that. And so we're building that with stacks in Dash where it's like a mixed format, smart collection that you can then, you know, just share whatever you need internally, externally and have it be like a really well designed experience and platform agnostic and not tying you to any one ecosystem. We're super excited about that. You know, we talked a little bit about security in the modern world, like IT signs all these compliance documents, but in reality has no way of knowing where anything is or what's being shared. It's actually better for them to not know about it than to know about it and not be able to do anything about it. And when we talked to customers, we found that there were like literally people in IT whose jobs it is to like manually go through, log into each, like log into office, log into workspace, log into each tool and like go comb through one by one the links that people have shared and like unshares. There's like an unshare guy in all these companies and that that job is probably about as fun as it sounds like, my God. So there's, you know, fortunately, I guess what makes technology a good business is for every problem it solves, it like creates a new one, so there's always like a sequel that you need. And so, you know, I think the happy version of our Act 2 is kind of similar to Netflix. I look at a lot of these companies that really had multiple acts and Netflix had the vision to be streaming from the beginning, but broadband and everything wasn't ready for it. So they started by mailing you DVDs, but then went to streaming and then, but the value probably the whole time was just like, let me press play on something I want to see. And they did a really good job about bringing people along from the DVD mailing off. You would think like, oh, the DVD mailing piece is like this burning platform or it's like legacy, you know, ankle weight. And they did have some false starts in that transition. But when you really think about it, they were able to take that DVD mailing audience, move, like migrate them to streaming and actually bootstrap a, you know, take their season one people and bootstrap a victory in season two, because they already had, you know, they weren't starting from scratch. And like both of those worlds were like super easy to sort of forget and be like, oh, it's all kind of destiny. But like, no, that was like an incredibly competitive environment. And Netflix did a great job of like activating their Act 1 advantages and winning in Act 2 because of it. So I don't think people see Dropbox that way. I think people are sort of thinking about us just in terms of our Act 1 and they're like, yeah, Dropbox is fine. I used to use it 10 years ago. But like, what have they done for me lately? And I don't blame them. So fortunately, we have like better and better answers to that question every year.

Alessio [00:42:39]: And you call it like the silicon brain. So you see like Dash and Stacks being like the silicon brain interface, basically for

Drew [00:42:46]: people. I mean, that's part of it. Yeah. And writ large, I mean, I think what's so exciting about AI and everybody's got their own kind of take on it, but if you like really zoom out civilizationally and like what allows humans to make progress and, you know, what sort of is above the fold in terms of what's really mattered. I certainly want to, I mean, there are a lot of points, but some that come to mind like you think about things like the industrial revolution, like before that, like mechanical energy, like the only way you could get it was like by your own hands, maybe an animal, maybe some like clever sort of machines or machines made of like wood or something. But you were quite like energy limited. And then suddenly, you know, the industrial revolution, things like electricity, it suddenly is like, all right, mechanical energy is now available on demand as a very fungible kind of, and then suddenly we consume a lot more of it. And then the standard of living goes way, way, way, way up. That's been pretty limited to the physical realm. And then I believe that the large models, that's really the first time we can kind of bottle up cognitive energy and offloaded, you know, if we started by offloading a lot of our mechanical or physical busy work to machines that freed us up to make a lot of progress in other areas. But then with AI and computing, we're like, now we can offload a lot more of our cognitive busy work to machines. And then we can create a lot more of it. Price of it goes way down. Importantly, like, it's not like humans never did anything physical again. It's sort of like, no, but we're more leveraged. We can move a lot more earth with a bulldozer than a shovel. And so that's like what is at the most fundamental level, what's so exciting to me about AI. And so what's the silicon brain? It's like, well, we have our human brains and then we're going to have this other like half of our brain that's sort of coming online, like our silicon brain. And it's not like one or the other. They complement each other. They have very complimentary strengths and weaknesses. And that's, that's a good thing. There's also this weird tangent we've gone on as a species to like where knowledge work, knowledge workers have this like epidemic of, of burnout, great resignation, quiet quitting. And there's a lot going on there. But I think that's one of the biggest problems we have is that be like, people deserve like meaningful work and, you know, can't solve all of it. But like, and at least in knowledge work, there's a lot of own goals, you know, enforced errors that we're doing where it's like, you know, on one side with brain science, like we know what makes us like productive and fortunately it's also what makes us engaged. It's like when we can focus or when we're some kind of flow state, but then we go to work and then increasingly going to work is like going to a screen and you're like, if you wanted to design an environment that made it impossible to ever get into a flow state or ever be able to focus, like what we have is that. And that was the thing that just like seven, eight years ago just blew my mind. I'm just like, I cannot understand why like knowledge work is so jacked up on this adventure. It's like, we, we put ourselves in like the most cognitively polluted environment possible and we put so much more stress on the system when we're working remotely and things like that. And you know, all of these problems are just like going in the wrong direction. And I just, I just couldn't understand why this was like a problem that wasn't fixing itself. And I'm like, maybe there's something Dropbox can do with this and you know, things like Dash are the first step. But then, well, so like what, well, I mean, now like, well, why are humans in this like polluted state? It's like, well, we're just, all of the tools we have today, like this generation of tools just passes on all of the weight, the burden to the human, right? So it's like, here's a bajillion, you know, 80,000 unread emails, cool. Here's 25 unread Slack channels. Here's, we all get started like, it's like jittery like thinking about it. And then you look at that, you're like, wait, I'm looking at my phone, it says like 80,000 unread things. There's like no question, product question for which this is the right answer. Fortunately, that's why things like our silicon brain are pretty helpful because like they can serve as like an attention filter where it's like, actually, computers have no problem reading a million things. Humans can't do that, but computers can. And to some extent, this was already happening with computer, you know, Excel is an aversion of your silicon brain or, you know, you could draw the line arbitrarily. But with larger models, like now so many of these little subtasks and tasks we do at work can be like fully automated. And I think, you know, I think it's like an important metaphor to me because it mirrors a lot of what we saw with computing, computer architecture generally. It's like we started out with the CPU, very general purpose, then GPU came along much better at these like parallel computations. We talk a lot about like human versus machine being like substituting, it's like CPU, GPU, it's not like one is categorically better than the other, they're complements. Like if you have something really parallel, use a GPU, if not, use a CPU. The whole relationship, that symbiosis between CPU and GPU has obviously evolved a lot since, you know, playing Quake 2 or something. But right now we have like the human CPU doing a lot of, you know, silicon CPU tasks. And so you really have to like redesign the work thoughtfully such that, you know, probably not that different from how it's evolved in computer architecture, where the CPU is sort of an orchestrator of these really like heavy lifting GPU tasks. That dividing line does shift a little bit, you know, with every generation. And so I think we need to think about knowledge work in that context, like what are human brains good at? What's our silicon brain good at? Let's resegment the work. Let's offload all the stuff that can be automated. Let's go on a hunt for like anything that could save a human CPU cycle. Let's give it to the silicon one. And so I think we're at the early earnings of actually being able to do something about it.

Alessio [00:48:00]: It's funny, I gave a talk to a few government people earlier this year with a similar point where we used to make machines to release human labor. And then the kilowatt hour was kind of like the unit for a lot of countries. And now you're doing the same thing with the brain and the data centers are kind of computational power plants, you know, they're kind of on demand tokens. You're on the board of Meta, which is the number one donor of Flops for the open source world. The thing about open source AI is like the model can be open source, but you need to carry a briefcase to actually maybe run a model that is not even that good compared to some of the big ones. How do you think about some of the differences in the open source ethos with like traditional software where it's like really easy to run and act on it versus like models where it's like it might be open source, but like I'm kind of limited, sort of can do with it?

Drew [00:48:45]: Yeah, well, I think with every new era of computing, there's sort of a tug of war between is this going to be like an open one or a closed one? And, you know, there's pros and cons to both. It's not like open is always better or open always wins. But, you know, I think you look at how the mobile, like the PC era and the Internet era started out being more on the open side, like it's very modular. Everybody sort of party that everybody could, you know, come to some downsides of that security. But I think, you know, the advent of AI, I think there's a real question, like given the capital intensity of what it takes to train these foundation models, like are we going to live in a world where oligopoly or cartel or all, you know, there's a few companies that have the keys and we're all just like paying them rent. You know, that's one future. Or is it going to be more open and accessible? And I'm like super happy with how that's just I find it exciting on many levels with all the different hats I wear about it. You know, fortunately, you've seen in real life, yeah, even if people aren't bringing GPUs on a plane or something, you've seen like the price performance of these models improve 10 or 100x year over year, which is sort of like many Moore's laws compounded together for a bunch of reasons like that wouldn't have happened without open source. Right. You know, for a lot of same reasons, it's probably better that we can anyone can sort of spin up a website without having to buy an internet information server license like there was some alternative future. So like things are Linux and really good. And there was a good balance of trade to where like people contribute their code and then also benefit from the community returning the favor. I mean, you're seeing that with open source. So you wouldn't see all this like, you know, this flourishing of research and of just sort of the democratization of access to compute without open source. And so I think it's been like phenomenally successful in terms of just moving the ball forward and pretty much anything you care about, I believe, even like safety. You can have a lot more eyes on it and transparency instead of just something is happening. And there was three places with nuclear power plants attached to them. Right. So I think it's it's been awesome to see. And then and again, for like wearing my Dropbox hat, like anybody who's like scaling a service to millions of people, again, I'm probably not using like frontier models for every request. It's, you know, there are a lot of different configurations, mostly with smaller models. And even before you even talk about getting on the device, like, you know, you need this whole kind of constellation of different options. So open source has been great for that.

Alessio [00:51:06]: And you were one of the first companies in the cloud repatriation. You kind of brought back all the storage into your own data centers. Where are we in the AI wave for that? I don't think people really care today to bring the models in-house. Like, do you think people will care in the future? Like, especially as you have more small models that you want to control more of the economics? Or are the tokens so subsidized that like it just doesn't matter? It's more like a principle. Yeah. Yeah.

Drew [00:51:30]: I mean, I think there's another one where like thinking about the future is a lot easier if you start with the past. So, I mean, there's definitely this like big surge in demand as like there's sort of this FOMO driven bubble of like all of big tech taking their headings and shipping them to Jensen for a couple of years. And then you're like, all right, well, first of all, we've seen this kind of thing before. And in the late 90s with like Fiber, you know, this huge race to like own the internet, own the information superhighway, literally, and then way overbuilt. And then there was this like crash. I don't know to what extent, like maybe it is really different this time. Or, you know, maybe if we create AGI that will sort of solve the rest of the, or we'll just have a different set of things to worry about. But, you know, the simplest way I think about it is like this is sort of a rent not buy phase because, you know, I wouldn't want to be, we're still so early in the maturity, you know, I wouldn't want to be buying like pallets of over like of 286s at a 5x markup when like the 386 and 486 and Pentium and everything are like clearly coming there around the corner. And again, because of open source, there's just been a lot more competition at every layer in the stack. And so product developers are basically beneficiaries of that. You know, the things we can do with the sort of cost estimates I was looking at a year or two ago to like provide different capabilities in the product, you know, cut, right, you know, slashing by 10, 100, 1000x. I think about coming back around. I mean, I think, you know, at some point you have to believe that the sort of supply and demand will even out as it always does. And then there's also like non-NVIDIA stacks like the Grok or Cerebris or some of these custom silicon companies that are super interesting and outperformed NVIDIA stack in terms of latency and things like that. So I guess it'd be a pretty exciting change. I think we're not close to the point where we were with like hard drives or storage when we sort of went back from the public cloud because like there it was like, yeah, the cost curves are super predictable. We know what the cost of a hard drive and a server and, you know, terabyte of bandwidth and all the inputs are going to just keep going down, riding down this cost curve. But to like rely on the public cloud to pass that along is sort of, we need a better strategy than like relying on the kindness of strangers. So we decided to bring that in house and still do, and we still get a lot of advantages. That said, like the public cloud is like scaled and been like a lot more reliable and just good all around than we would have predicted because actually back then we were worried like, is the public cloud going to even scale fast enough to where to keep up with us? But yeah, I think we're in the early innings. It's a little too chaotic right now. So I think renting and not sort of preserving agility is pretty important in times like these. Yeah.

Alessio [00:54:01]: We just went to the Cerebrus factory to do an episode there. We saw one of their data centers inside. Yeah. It's kind of like, okay, if this really works, you know, it kind of changes everything.

Drew [00:54:13]: And that is one of the things there, like this is one where you could just have these things that just like, okay, there's just like a new kind of piece on the chessboard, like recalc everything. So I think there's still, I mean, this is like not that likely, but I think this is an area where it actually could, you could have these sort of like, you know, and out of nowhere, all of a sudden, you know, everything's different. Yeah.

Alessio [00:54:33]: I know one of the management books he references, Ending Growth's, I'm only the paranoid survive.

Drew [00:54:37]: Yeah.

Alessio [00:54:37]: Maybe if you look at Intel, they did a great job memory to chip, but then it's like maybe CPU to GPU, they kind of missed that thing. Yeah. How do you think about staying relevant for so long now? It's been 17 years you've been doing Dropbox.

Drew [00:54:50]: What's the secret?

Alessio [00:54:50]: And maybe we can touch on founder mode and all of that. Yeah.

Drew [00:54:55]: Well, first, what makes tech exciting and also makes it hard is like, there's no standing still, right? And your customers never are like, oh no, we're good now. They always want more just, and then the ground is shifting under you or it's like, oh yeah, well, files are not even that relevant to the modern. I mean, it's still important, but like, you know, so much is tilted elsewhere. So I think you have to like always be moving and think about on the one level, like what is, and thinking of these different layers of abstraction, like, well, yeah, the technical service we provide is file syncing and storage in the past, but in the future it's going to be different. The way Netflix had to look at, well, technically we mail people physical DVDs and fulfillment centers, and then we have to switch like streaming and codex and bandwidth and data centers. So you, you, you do have to think about that level, but then it's like our, what's the evergreen problem we're solving is an important problem. Can we build the best product? Can we get distribution? Can we get a business model? Can we defend ourselves when we get copied? And then having like some context of like history has always been like one of the reading about the history, not just in tech, but of business or government or sports or military, these things that seem like totally new, you know, and to me would have been like totally new as a 25 year old, like, oh my God, the world's completely different and everything's going to change. You're like, well, there's not a lot of great things about getting older, but you do see like, well, no, this actually has like a million like precedents and you can actually learn a lot from, you know, about like the future of GPUs from like, I don't know how, you know, how formula one teams work or you can draw all these like weird analogies that are super helpful in guiding you from first principles or through a combination of first principles and like past context. But like, you know, build shit we're really proud of. Like, that's a pretty important first step and really think about like, you sort of become blind to like how technology works as that's just the way it works. And even something like carrying a thumb drive, you're like, well, I'd much rather have a thumb drive than like literally not have my stuff or like have to carry a big external hard drive around. So you're always thinking like, oh, this is awesome. Like I ripped CDs and these like MP3s and these files and folders. This is the best. But then you miss on the other side. You're like, this isn't the end, right? MP3s and folders. It's like an Apple comes along. It's like, this is dumb. You should have like a catalog, artists, playlists, you know, that Spotify is like, Hey, this is dumb. Like you should, why are you buying these things? All the cards, it's the internet. You should have access to everything. And then by the way, why is this like such a single player experience? You should be able to share and they should have, there should be AI curated, et cetera, et cetera. And then a lot of it is also just like drawing, connecting dots between different disciplines, right? So a lot of what we did to make Dropbox successful is like we took a lot of the consumer internet playbook, applied it to business software from a virality and kind of ease of use standpoints. And then, you know, I think there's a lot of, you can draw from the consumer realm and what's worked there and that hasn't been ported over to business, right? So a lot of what we think about is like, yeah, when you sign into Netflix or Spotify or YouTube or any consumer experience, like what do you see? Well, you don't see like a bunch of titles starting with AA, right? You see like this whole, and it went on evolution, right? Like we talked about music and TV went through the same thing, like 10 channels over the air broadcast to 30 channels, a hundred channels, but that's something like a thousand channels. You're like, this has totally lost the plot. So we're sort of in the thousand channels era of productivity tools, which is like, wait, wait, we just need to like rethink the system here and we don't need another thousand channels. We need to redesign the whole experience. And so I think the consumer experiences that are like smart, you know, when you sign into Netflix, it's not like a thousand channels. It's like, here are a bunch of smart defaults. Even if you're a new signup, we don't know anything about you, but because of what the world is watching, here are some, you know, reasonable suggestions. And then it's like, okay, I watched drive to survive. I didn't watch squid game. You know, the next time I sign in, it's like a complete, it's a learning system, right? So a combination of design, machine learning, and just like the courage to like rethink the whole thing. I think that's, that's a pretty reliable recipe. And then you think you're like, all right, there's all that intelligence in the consumer experience. There's no filing things away. Everything's, there's all this sort of auto curated for you and sort of self optimizing. Then you go to work and you're like, there's not even an attempt to incorporate any intelligence or organization anywhere in this experience. And so like, okay, can we do something about that?

Alessio [00:58:57]: You know, you're one of the last founder CEOs, like you would talk, then you're like, Toby Lute, some of these folks.

Drew [00:59:03]: How, how does that change? I'm like 300 years old and why can't I be a founder CEO?

Alessio [00:59:07]: I was saying like when you run, when you run a company, like you've had multiple executives over the years, like how important is that for the founder to be CEO and just say, Hey, look, we're changing the way the company and the strategy works. It's like, we're really taking this seriously versus like you could be a public CEO and be like, Hey, I got my earnings call and like whatever, I just need to focus on getting the right numbers. Like how does that change the culture in the company? Yeah.