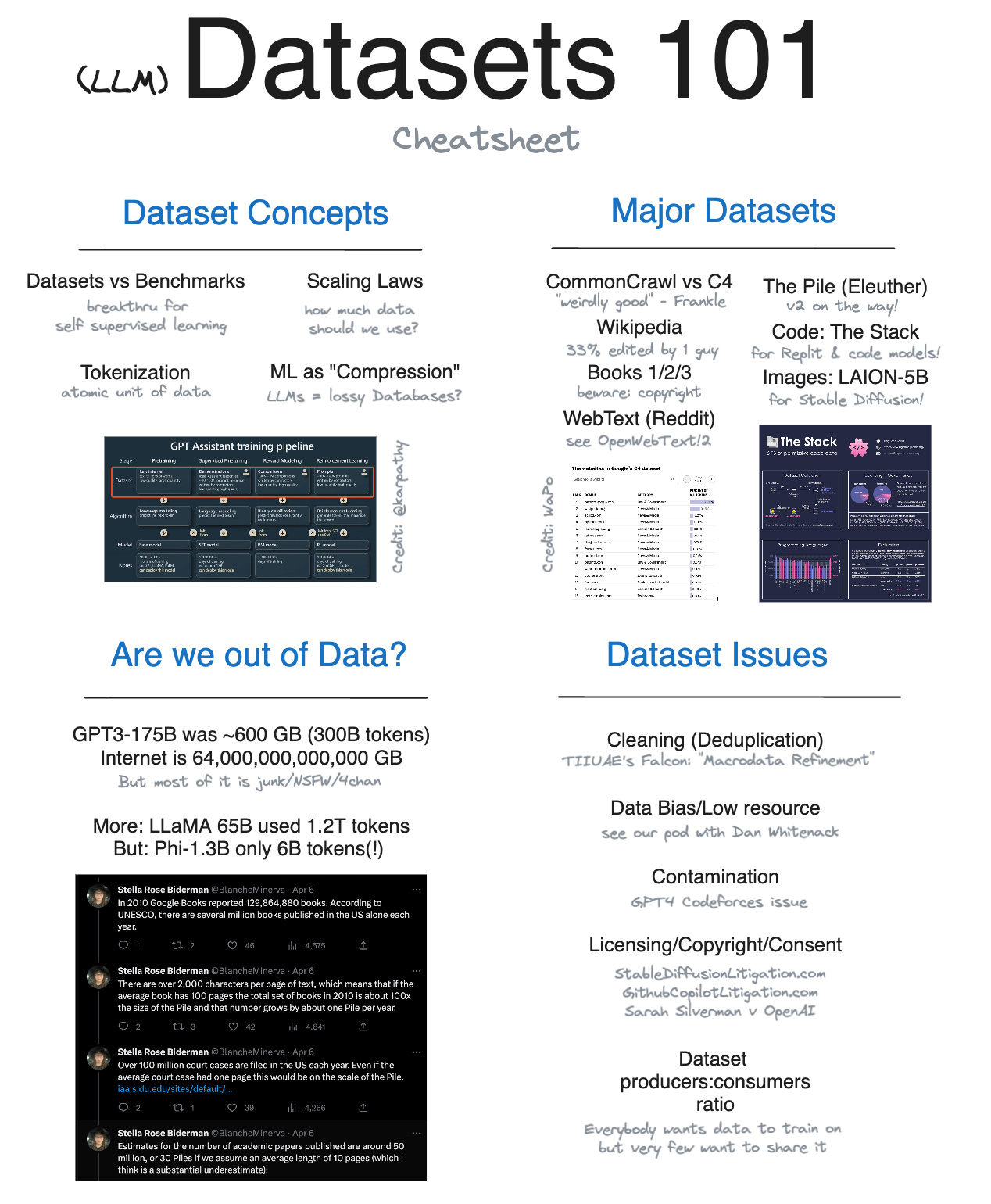

In April, we released our first AI Fundamentals episode: Benchmarks 101. We covered the history of benchmarks, why they exist, how they are structured, and how they influence the development of artificial intelligence.

Today we are (finally!) releasing Datasets 101! We’re really enjoying doing this series despite the work it takes - please let us know what else you want us to cover!

Stop me if you’ve heard this before: “GPT3 was trained on the entire Internet”.

Blatantly, demonstrably untrue: the GPT3 dataset is a little over 600GB, primarily on Wikipedia, Books corpuses, WebText and 2016-2019 CommonCrawl. The Macbook Air I am typing this on has more free disk space than that. In contrast, the “entire internet” is estimated to be 64 zetabytes, or 64 trillion GB. So it’s more accurate to say that GPT3 is trained on 0.0000000001% of the Internet.

Why spend $5m1 on GPU time training on $50 worth2 of data?

Simple: Garbage in, garbage out. No matter how good your algorithms, no matter how much money/compute you have, your model quality is strongly determined by the data you train it on3 and research scientists think we just don’t need or have that much high quality data. We spend an enormous amount of effort throwing out data to keep the quality high, and recently Web 2.0-era UGC platforms like StackOverflow, Reddit, and Twitter clamped down on APIs as they realize the goldmines they sit on.

Data is the new new oil. Time for a primer!

Show Notes

Our 2 months worth of podcast prep notes!

Datasets

Audio:

LibriSpeech: A dataset of audio recordings of audiobooks

CommonVoice: A dataset of audio recordings of people speaking different languages

Voxforge: A dataset of audio recordings of people speaking different languages

Switchboard: A dataset of audio recordings of telephone conversations

Fisher Corpus: A dataset of audio recordings of news broadcasts

Chinese:

Copyright & Privacy:

Deduplication

Contamination

Estimates of GPT3-quality (reported at 3640 petaflop/s-days, or 31.4 million petaflops) training cost vary since research is messy. Estimates go has high as $5-$50m, and it definitely has come down over time to potentially as low as $100k as we learned from our pod with Mosaic.

Based on going rate of a 500GB hard drive. I know disk storage and data value isn’t the same thing but bear with me…

Although I do have to acknowledge that Language Models are Few Shot Learners that generalize outside of tasks they were specifically finetuned for, still there are limits, for example there will always be a knowledge cutoff…

Share this post