Thanks to the over 1m people that have checked out the Rise of the AI Engineer. It’s a long July 4 weekend in the US, and we’re celebrating with a podcast feed swap!

We’ve been big fans of Nathan Labenz and Erik Torenberg’s work at the Cognitive Revolution podcast for a while, which started around the same time as we did and has done an incredible job of hosting discussions with top researchers and thinkers in the field, with a wide range of topics across computer vision (a special focus thanks to Nathan’s work at Waymark), GPT-4 (with exceptional insight due to Nathan’s time on the GPT-4 “red team”), healthcare/medicine/biotech (Harvard Medical School, Med-PaLM, Tanishq Abraham, Neal Khosla), investing and tech strategy (Sarah Guo, Elad Gil, Emad Mostaque, Sam Lessin), safety and policy, curators and influencers and exceptional AI founders (Josh Browder, Eugenia Kuyda, Flo Crivello, Suhail Doshi, Jungwon Byun, Raza Habib, Mahmoud Felfel, Andrew Feldman, Matt Welsh, Anton Troynikov, Aravind Srinivas).

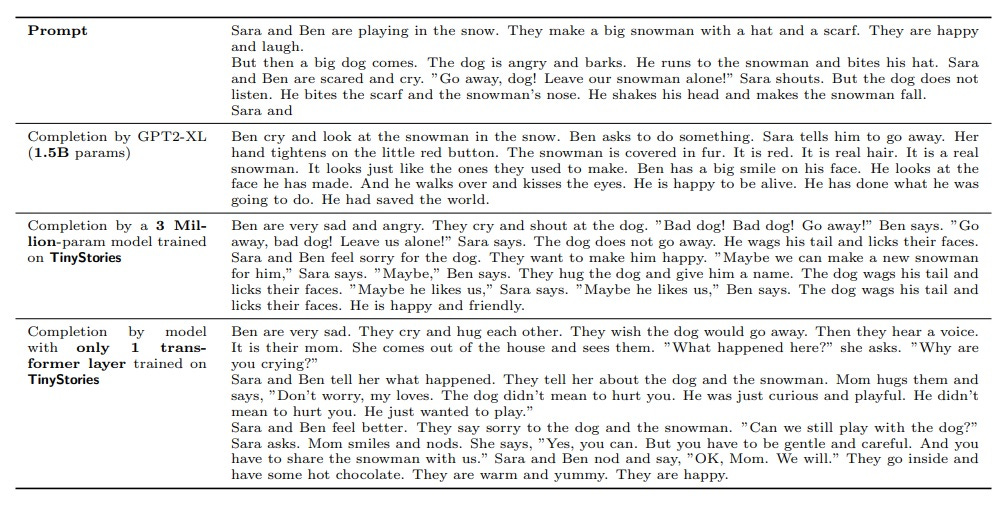

If Latent Space is for AI Engineers, then Cognitive Revolution covers the much broader field of AI in tech, business and society at large, with a longer runtime to go deep on research papers like TinyStories. We hope you love this episode as much as we do, and check out CogRev wherever fine podcasts are sold!

Subscribe to the Cognitive Revolution on:

Good Data is All You Need

The work of Ronen and Yuanzhi echoes a broader theme emerging in the midgame of 2023:

Falcon-40B (trained on 1T tokens) outperformed LLaMA-65B (trained on 1.4T tokens), primarily due to the RefinedWeb Dataset that runs CommonCrawl through extensive preprocessing and cleaning in their MacroData Refinement1 pipeline.

UC Berkeley LMSYS’s Vicuna-13B is near GPT-3.5/Bard quality at a tenth of their size, thanks to fine-tuning from 70k user-highlighted ChatGPT conversations (indicating some amount of quality).

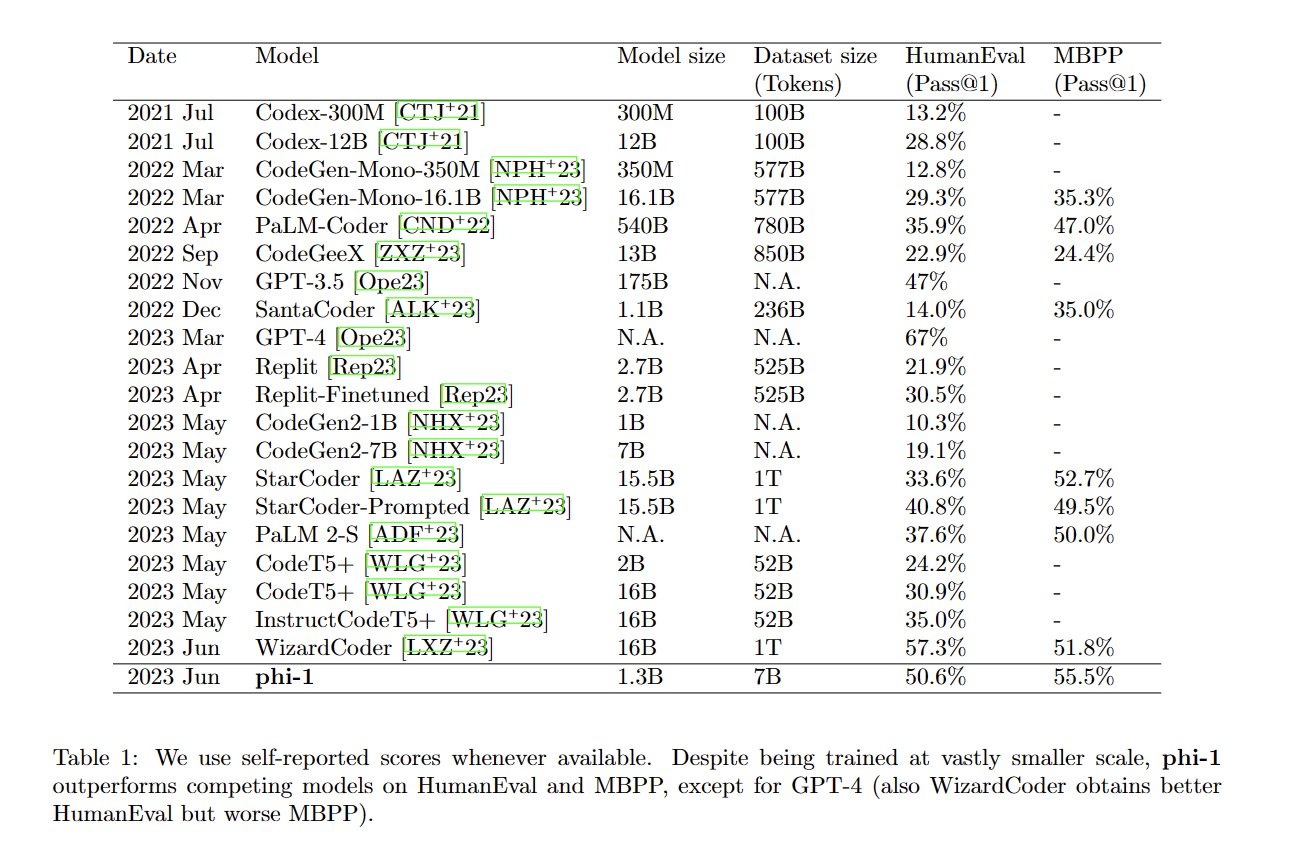

Replit’s finetuned 2.7B model outperforms the 12B OpenAI Codex model based on HumanEval, thanks to high quality data from Replit users

The path to smaller models leans on better data (and tokenization!), whether from cleaning, from user feedback, or from synthetic data generation, i.e. finetuning high quality on outputs from larger models. TinyStories and Phi-1 are the strongest new entries in that line of work, and we hope you’ll pick through the show notes to read up further.

Show Notes

TinyStories (Apr 2023)

Paper: TinyStories: How Small Can Language Models Be and Still Speak Coherent English?

Internal presentation with Sebastien Bubeck at MSR

Twitter thread from Ronen Eldan

Will future LLMs be based almost entirely on synthetic training data? In a new paper, we introduce TinyStories, a dataset of short stories generated by GPT-3.5&4. We use it to train tiny LMs (< 10M params) that produce fluent stories and exhibit reasoning.

Phi-1 (Jun 2023)

Paper: Textbooks are all you need (HN discussion)

Twitter announcement from Sebastien Bubeck:

phi-1 achieves 51% on HumanEval w. only 1.3B parameters & 7B tokens training dataset and 8 A100s x 4 days = 800 A100-hours. Any other >50% HumanEval model is >1000x bigger (e.g., WizardCoder from last week is 10x in model size and 100x in dataset size).

No, not that one, but kudos if you got the reference

Share this post