We are hosting the AI World’s Fair in San Francisco on June 8th! You can RSVP here. Come meet fellow builders, see amazing AI tech showcases at different booths around the venue, all mixed with elements of traditional fairs: live music, drinks, games, and food! We are also at Amplitude’s AI x Product Hackathon and are hosting our first joint Latent Space + Practical AI Podcast Listener Meetup next month!

We are honored by the rave reviews for our last episode with MosaicML! They are also welcome on Apple Podcasts and Twitter/HN/LinkedIn/Mastodon etc!

We recently spent a wonderful week with Itamar Friedman, visiting all the way from Tel Aviv in Israel:

We first recorded a podcast (releasing with this newsletter) covering Codium AI, the hot new VSCode/Jetbrains IDE extension focused on test generation for Python and JS/TS, with plans for a Code Integrity Agent.

Then we attended Agent Weekend, where the founders of multiple AI/agent projects1 got together with a presentation from Toran Bruce Richards on Auto-GPT’s roadmap and then from Itamar on Codium’s roadmap

Then some of us stayed to take part in the NextGen Hackathon and won first place with the new AI Maintainer project.

So… that makes it really hard to recap everything for you. But we’ll try!

Podcast: Codium: Code Integrity with Zero Bugs

When it launched in 2021, there was a lot of skepticism around Github Copilot.

Fast forward to 2023, and 40% of all code is checked in unmodified from Copilot2.

Codium3 burst on the scene this year, emerging from stealth with an $11m seed, their own foundation model (TestGPT-1) and a vision to revolutionize coding by 2025.

You might have heard of "DRY” programming (Don’t Repeat Yourself), which aims to replace repetition with abstraction. Itamar came on the pod to discuss their “extreme DRY” vision: if you already spent time writing a spec, why repeat yourself by writing the code for it? If the spec is thorough enough, automated agents could write the whole thing for you.

Live Demo Video Section

This is referenced in the podcast about 6 minutes in.

Timestamps, show notes, and transcript are below the fold. We would really appreciate if you shared our pod with friends on Twitter, LinkedIn, Mastodon, Bluesky, or your social media poison of choice!

Auto-GPT: A Roadmap To The Future of Work

Making his first public appearance, Toran (perhaps better known as @SigGravitas on GitHub) presented at Agents Weekend:

Lightly edited notes for those who want a summary of the talk:

What is AutoGPT?

AutoGPT is an Al agent that utilizes a Large Language Model to drive its actions and decisions. It can be best described as a user sitting at a computer, planning and interacting with the system based on its goals. Unlike traditional LLM applications, AutoGPT does not require repeated prompting by a human. Instead, it generates its own 'thoughts', criticizes its own strategy and decides what next actions to take.

AutoGPT was released on GitHub in March 2023, and went viral on April 1 with a video showing automatic code generation. 2 months later it has 132k+ stars, is the 29th highest ranked open-source project of all-time, a thriving community of 37.5k+ Discord members, 1M+ downloads.

What’s next for AutoGPT? The initial release required users to know how to build and run a codebase. They recently announced plans for a web/desktop UI and mobile app to enable nontechnical/everyday users to use AutoGPT. They are also working on an extensible plugin ecosystem called the Abilities Hub also targeted at nontechnical users.

Improving Efficacy. AutoGPT has many well documented cases where it trips up. Getting stuck in loops, using <placeholders> instead of actual content in

commands, and making obvious mistakes like

execute_code("writea cookbook"'. The plan is a new design called Challenge Driven Development - Challenges are goal-orientated tasks or problems thatAuto-GPT has difficulty solving or has not yet been able to accomplish. These may include improving specific functionalities, enhancing the model's understanding of specific domains, or even developing new features that the current version of Auto-GPT lacks. (AI Maintainer was born out of one such challenge). Itamar compared this with Software 1.0 (Test Driven Development), and Software 2.0 (Dataset Driven Development).

Self-Improvement. Auto-GPT will analyze its own codebase and contribute to its own improvement. AI Safety (aka not-kill-everyone-ists) people like Connor Leahy might freak out at this, but for what it’s worth we were pleasantly surprised to learn that Itamar and many other folks on the Auto-GPT team are equally concerned and mindful about x-risk as well.

The overwhelming theme of Auto-GPT’s roadmap was accessibility - making AI Agents usable by all instead of the few.

Podcast Timestamps

[00:00:00] Introductions

[00:01:30] Itamar’s background and previous startups

[00:03:30] Vision for Codium AI: reaching “zero bugs”

[00:06:00] Demo of Codium AI and how it works

[00:15:30] Building on VS Code vs JetBrains

[00:22:30] Future of software development and the role of developers

[00:27:00] The vision of integrating natural language, testing, and code

[00:30:00] Benchmarking AI models and choosing the right models for different tasks

[00:39:00] Codium AI spec generation and editing

[00:43:30] Reconciling differences in languages between specs, tests, and code

[00:52:30] The Israeli tech scene and startup culture

[01:03:00] Lightning Round

Show Notes

TDD (Test-Driven Development)

AST (Abstract Syntax Tree)

Transcript

Alessio: [00:00:00] Hey everyone. Welcome to the Latent Space podcast. This is Alessio, Partner and CTO-in-Residence at Decibel Partners. I'm joined by my co-host, Swyx, writer and editor of Latent Space.

Swyx: Today we have a special guest, Tamar Friedman, all the way from Tel Aviv, CEO and co-founder of Codium AI. Welcome.

Itamar: Hey, great being here. Thank you for inviting me.

Swyx: You like the studio? It's nice, right?

Itamar: Yeah, they're awesome.

Swyx: So I'm gonna introduce your background a little bit and then we'll learn a bit more about who you are. So you graduated from Teknion Israel Institute of Technology's kind of like the MIT of of Israel. You did a BS in CS, and then you also did a Master's in Computer Vision, which is kind of relevant.

You had other startups before this, but your sort of claim to fame is Visualead, which you started in 2011 and got acquired by Alibaba Group You showed me your website, which is the sort of QR codes with different forms of visibility. And in China that's a huge, huge deal. It's starting to become a bigger deal in the west. My favorite anecdote that you told me was something about how much sales use you saved or something. I forget what the number was.

Itamar: Generally speaking, like there's a lot of peer-to-peer transactions going on, like payments and, and China with QR codes. So basically if for example 5% of the scanning does not work and with our scanner we [00:01:30] reduce it to 4%, that's a lot of money. Could be tens of millions of dollars a day.

Swyx: And at the scale of Alibaba, it serves all of China. It's crazy. You did that for seven years and you're in Alibaba until 2021 when you took some time off and then hooked up with Debbie, who you've known for 25 years, to start Codium AI and you just raised your $11 million seed rounds with TlB Partners and Vine. Congrats. Should we go right into Codium? What is Codium?

Itamar: So we are an AI coding assistant / agent to help developers reaching zero bugs. We don't do that today. Right now, we help to reduce the amount of bugs. Actually you can see people commenting on our marketplace page saying that they found bugs with our tool, and that's like our premise. Our vision is like for Tesla zero emission or something like that, for us it's zero bugs.

We started with building an IDE extension either in VS Code or in JetBrains. And that actually works alongside the main panel where you write your code and I can show later what we do is analyze the code, whether you started writing it or you completed it.

Like you can go both TDD (Test-Driven Development) or classical coding. And we offer analysis, tests, whether they pass or not, we further self debug [00:03:00] them and make suggestions eventually helping to improve the code quality specifically on code logic testing.

Alessio: How did you get there? Obviously it's a great idea. Like, what was the idea, maze? How did you get here?

Itamar: I'll go back long. So, yes I was two and a half times a CTO, VC backed startup CTO where we talked about the last one that I sold to Alibaba. But basically I'm like, it's weird to say by 20 years already of R&D manager, I'm not like the best programmer because like you mentioned, I'm coming more from the machine learning / computer vision side, one, one of the main application, but a lot of optimization. So I’m not necessarily the best coder, but I am like 20 year R&D manager. And I found that verifying code logic is very hard thing. And one of the thing that really makes it difficult to increase the development velocity.

So you have tools related to checking performance.You have tools for vulnerabilities and security, Israelis are really good at that. But do you have a tool that actually helps you test code logic? I think what we have like dozens or hundreds, even thousands that help you on the end to end, maybe on the microservice integration system. But when you talk about code level, there isn't anything.

So that was the pain I always had, especially when I did have tools for that, for the hardware. Like I worked in Mellanox to be sold to Nvidia as a student, and we had formal tools, et cetera. [00:04:30] So that's one part.

The second thing is that after being sold to Alibaba, the team and I were quite a big team that worked on machine learning, large language model, et cetera, building developer tools relate with, with LLMs throughout the golden years of. 2017 to 2021, 2022. And we saw how powerful they became.

So basically, if I frame it this way, because we develop it for so many use cases, we saw that if you're able to take a problem put a framework of a language around it, whether it's analyzing browsing behavior, or DNA, or etc, if you can put a framework off a language, then LLMs take you really far.

And then I thought this problem that I have with code logic testing is basically a combination of a few languages: natural language, specification language, technical language. Even visual language to some extent. And then I quit Alibaba and took a bit of time to maybe wrap things around and rest a bit after 20 years of startup and corporate and joined with my partner Dedy Kredo who was my ever first employee.

And that's how we like, came to this idea.

Alessio: The idea has obviously been around and most people have done AST analysis, kinda like an abstract syntax tree, but it's kind of hard to get there with just that. But I think these models now are getting good enough where you can mix that and also traditional logical reasoning.

Itamar: Exactly.

Alessio: Maybe talk a little bit more about the technical implementation of it. You mentioned the agent [00:06:00] part. You mentioned some of the model part, like what happens behind the scenes when Codium gets in your code base?

Itamar: First of all, I wanna mention I think you're really accurate.

If you try to take like a large language model as is and try to ask it, can you like, analyze, test the code, etc, it'll not work so good. By itself it's not good enough on the other side, like all the traditional techniques we already started to invent since the Greek times. You know, logical stuff, you mentioned ASTs, but there's also dynamic code analysis, mutation testing, etc. There's a lot of the techniques out there, but they have inefficiencies.

And a lot of those inefficiencies are actually matching with AI capabilities. Let me give you one example. Let's say you wanna do fuzzy testing or mutation testing.

Mutation testing means that you either mutate the test, like the input of the test, the code of the test, etc or you mutate the code in order to check how good is your test suite.

For example, if I mutate some equation in the application code and the test finds a bug and it does that at a really high rate, like out of 100 mutation, I [00:07:30] find all of the 100 problems in the test. It's probably a very strong test suite.

Now the problem is that there's so many options for what to mutate in the data, in the test. And this is where, for example, AI could help, like pointing out where's the best thing that you can mutate. Actually, I think it's a very good use case. Why? Because even if AI is not 100% accurate, even if it's 80% accurate, it could really take you quite far rather just randomly selecting things.

So if I wrap up, just go back high level. I think LLM by themselves cannot really do the job of verifying code logic and and neither can the traditional ones, so you need to merge them. But then one more thing before maybe you tell me where to double click. I think with code logic there's also a philosophy question here.

Logic different from performance or quality. If I did a three for in loop, like I loop three things and I can fold them with some vector like in Python or something like that. We need to get into the mind of the developer. What was the intention? Like what is the bad code? Not what is the code logic that doesn't work. It's not according to the specification. So I think like one more thing that AI could really help is help to match, like if there is some natural language description of the code, we can match it. Or if there's missing information in natural language that needs [00:09:00] to be asked for the AI could help asking the user.

It's not like a closed solution. Rather open and leaving the developer as the lead. Just like moving the developer from, from being the coder to actually being like a pilot that that clicks button and say, ah, this is what I meant, or this is the fix, rather actually writing all the code.

Alessio: That makes sense. I think I talked about it on the podcast before, but like the switch from syntax to like semantics, like developers used to be focused on the syntax and not the meaning of what they're writing. So now you have the models that are really good at the syntax and you as a human are supposed to be really good at the semantics of what you're trying to build.

How does it practically work? So I'm a software developer, I want to use Codium, like how do I start and then like, how do you make that happen in the, in the background?

Itamar: So, like I said, Codium right now is an IDE extension. For example, I'm showing VS code. And if you just install it, like you'll have a few access points to start Codium AI, whether this sidebar or above every component or class that we think is very good to check with Codium.

You'll have this small button. There's other way you can mark specific code and right click and run code. But this one is my favorite because we actually choose above which components we suggest to use code. So once I click it code, I starts analyzing this class. But not only this class, but almost everything that is [00:10:30] being used by the call center class.

But all and what's call center is, is calling. And so we do like a static code analysis, et cetera. What, what we talked about. And then Codium provides with code analysis. It's right now static, like you can't change. It can edit it, and maybe later we'll talk about it. This is what we call the specification and we're going to make it editable so you can add additional behaviors and then create accordingly, test that will not pass, and then the code will, will change accordingly. So that's one entrance point, like via natural language description. That's one of the things that we're working on right now. What I'm showing you by the way, could be downloaded as is. It's what we have in production.

The second thing that we show here is like a full test suite. There are six tests by default but you can just generate more almost as much as you want every time. We'll try to cover something else, like a happy pass edge case et cetera. You can talk with specific tests, okay? Like you can suggest I want this in Spanish or give a few languages, or I want much more employees.

I didn't go over what's a call center, but basically it manages like call center. So you can imagine, I can a ask to make it more rigorous, etc, but I don't wanna complicate so I'm keeping it as is.

I wanna show you the next one, which is run all test. First, we verify that you're okay, we're gonna run it. I don't know, maybe we are connected to the environment that is currently [00:12:00] configured in the IDE. I don't know if it's production for some reason, or I don't know what. Then we're making sure that you're aware we're gonna run the code that and then once we run, we show if it pass or fail.

I hope that we'll have one fail. But I'm not sure it's that interesting. So I'll go like to another example soon, but, but just to show you what's going on here, that we actually give an example of what's a problem. We give the log of the error and then you can do whatever you want.

You can fix it by yourself, or you can click reflect and fix, and what's going on right now is a bit a longer process where we do like chain of thought or reflect and fix. And we can suggest a solution. You can run it and in this case it passes. Just an example, this is a very simple example.

Maybe later I'll show you a bug. I think I'll do that and I'll show you a bug and how we recognize actually the test. It's not a problem in the test, it's a problem in the code and then suggest you fix that instead of the code. I think you see where I'm getting at.

The other thing is that there are a few code suggestion, and there could be a dozen of, of types that could be related to performance modularity or I see this case there is a maintainability.

There could also be vulnerability or best practices or even suggestion for bugs. Like if we noticed, if we think one of the tests, for example, is failing because of a bug. So just code presented in the code suggestion. Probably you can choose a few, for example, if you like, and then prepare a code change like I didn't show you which exactly.

We're making a diff now that you can apply on your code. So basically what, what we're seeing here is that [00:13:30] there are three main tabs, the code, the test and the code analysis. Let's call spec.

And then there's a fourth tab, which is a code suggestion, if you wanna look at analytics, etc. Mm-hmm. Right now code okay. This is the change or quite a big change probably clicked on something. So that's the basic demo.

Right now let's be frank. Like I wanted to show like a simple example. So it's a call center. All the inputs to the class are like relatively simple. There is no jsm input, like if you're Expedia or whatever, you have a J with the hotels, Airbnb, you know, so the test will be almost like too simple or not covering enough.

Your code, if you don't provide it with some input is valuable, like adjacent with all information or YAMA or whatever. So you can actually add input data and the AI or model. It's actually by the way, a set of models and algorithms that will use that input to create interesting tests. And another thing is many people have some reference tests that they already made. It could be because they already made it or because they want like a very specific they have like how they imagine the test. So they just write one and then you add a reference and that will inspire all the rest of the tests. And also you can give like hints. [00:15:00] This is by the way plan to be like dynamic hints, like for different type of code.

We will provide different hints. So we can help you become a bit more knowledgeable about how to test your code. So you can ask for like having a, a given one then, or you can have like at a funny private, like make different joke for each test or for example,

Swyx: I'm curious, why did you choose that one? This is the pirate one. Yeah.

Itamar: Interesting choice to put on your products. It could be like 11:00 PM of people sitting around. Let's choose one funny thing

Swyx: and yeah. So two serious ones and one funny one. Yeah. Just for the listening audience, can you read out the other hints that you decided on as well?

Itamar: Yeah, so specifically, like for this case, relatively very simple class, so there's not much to do, but I'm gonna go to one more thing here on the configuration. But it basically is given when then style, it's one of the best practices and tests. So even when I report a bug, for example, I found a bug when someone else code, usually I wanna say like, given, use this environment or use that this way when I run this function, et cetera.

Oh, then it's a very, very full report. And it's very common to use that in like in unit test and perform.

Swyx: I have never been shown this format.

Itamar: I love that you, you mentioned that because if you go to CS undergrad you take so many courses in development, but none of them probably in testing, and it's so important. So why would you, and you don't go to Udemy or [00:16:30] whatever and, and do a testing course, right? Like it's, it's boring. Like people either don't do component level testing because they hate it or they do it and they hate it. And I think part of it it’s because they're missing tool to make it fun.

Also usually you don't get yourself educated about it because you wanna write your code. And part of what we're trying to do here is help people get smarter about testing and make it like easy. So this is like very common. And the idea here is that for different type of code, we'll suggest different type of hints to make you more knowledgeable.

We're doing it on an education app, but we wanna help developers become smarter, more knowledgeable about this field. And another one is mock. So right now, our model decided that there's no need for mock here, which is a good decision. But if we would go to real world case, like, I'm part of AutoGPT community and there's all of tooling going on there. Right? And maybe when I want to test like a specific component, and it's relatively clear that going to the web and doing some search and coming back, I don't really need to do that. Like I know what I expect to do and so I can mock that part of using to crawl the web.

A certain percentage of accuracy, like around 90, we will decide this is worth mocking and we will inject it. I can click it now and force our system to mock this. But you'll see like a bit stupid mocking because it really doesn't make sense. So I chose this pirate stuff, like add funny pirate like doc stringing make a different joke for each test.

And I forced it to add mocks, [00:18:00] the tests were deleted and now we're creating six new tests. And you see, here's the shiver me timbers, the test checks, the call successful, probably there's some joke at the end. So in this case, like even if you try to force it to mock it didn't happen because there's nothing but we might find here like stuff that it mock that really doesn't make sense because there's nothing to mock here.

So that's one thing I. I can show a demo where we actually catch a bug. And, and I really love that, you know how it is you're building a developer tools, the best thing you can see is developers that you don't know giving you five stars and sharing a few stuff.

We have a discord with thousands of users. But I love to see the individual reports the most. This was one of my favorites. It helped me to find two bugs. I mentioned our vision is to reach zero bugs. Like, if you may say, we want to clean the internet from bugs.

Swyx: So debugging the internet. I have my podcast title.

Itamar: So, so I think like if we move to another example

Swyx: Yes, yes, please, please. This is great.

Itamar: I'm moving to a different example, it is the bank account. By the way, if you go to ChatGPT and, and you can ask me what's the difference between Codium AI and using ChatGPT.

Mm-hmm. I'm, I'm like giving you this hard question later. Yeah. So if you ask ChatGPT give me an example to test a code, it might give you this bank account. It's like the one-on-one stuff, right? And one of the reasons I gave it, because it's easy to inject bugs here, that's easy to understand [00:19:30] anyway.

And what I'm gonna do right now is like this bank account, I'm gonna change the deposit from plus to minus as an example. And then I'm gonna run code similarly to how I did before, like it suggests to do that for the entire class. And then there is the code analysis soon. And when we announce very soon, part of this podcast, it's going to have more features here in the code analysis.

We're gonna talk about it. Yep. And then there is the test that I can run. And the question is that if we're gonna catch the bag, the bugs using running the test, Because who knows, maybe this implementation is the right one, right? Like you need to, to converse with the developer. Maybe in this weird bank, bank you deposit and, and the bank takes money from you.

And we could talk about how this happens, but actually you can see already here that we are already suggesting a hint that something is wrong here and here's a suggestion to put it from minus to to plus. And we'll try to reflect and, and fix and then we will see actually the model telling you, hey, maybe this is not a bug in the test, maybe it's in the code.

Swyx: I wanna stay on this a little bit. First of all, this is very impressive and I think it's very valuable. What user numbers can you disclose, you launched it and then it's got fairly organic growth. You told me something off the air, but you know, I just wanted to show people like this is being adopted in quite a large amount.

Itamar: [00:21:00] First of all, I'm a relatively transparent person. Like even as a manager, I think I was like top one percentile being transparent in Alibaba. It wasn't five out of five, which is a good thing because that's extreme, but it was a good, but it also could be a bad, some people would claim it's a bad thing.

Like for example, if my CTO in Alibaba would tell me you did really bad and it might cut your entire budget by 30%, if in half a year you're not gonna do like much better and this and that. So I come back to a team and tell 'em what's going on without like trying to smooth thing out and we need to solve it together.

If not, you're not fitting in this team. So that's my point of view. And the same thing, one of the fun thing that I like about building for developers, they kind of want that from you. To be transparent. So we are on the high numbers of thousands of weekly active users. Now, if you convert from 50,000 downloads to high thousands of weekly active users, it means like a lot of those that actually try us keep using us weekly.

I'm not talking about even monthly, like weekly. And that was like one of their best expectations because you don't test your code every day. Right now, you can see it's mostly focused on testing. So you probably test it like once a week. Like we wanted to make it so smooth with your development methodology and development lifecycle that you use it every day.

Like at the moment we hope it to be used weekly. And that's what we're getting. And the growth is about like every two, three weeks we double the amount of weekly and downloads. It's still very early, like seven weeks. So I don't know if it'll keep that way, but we hope so. Well [00:22:30] actually I hope that it'll be much more double every two, three weeks maybe. Thanks to the podcast.

Swyx: Well, we, yeah, we'll, we'll add you know, a few thousand hopefully. The reason I ask this is because I think there's a lot of organic growth that people are sharing it with their friends and also I think you've also learned a lot from your earliest days in, in the private beta test.

Like what have you learned since launching about how people want to use these testing tools?

Itamar: One thing I didn't share with you is like, when you say virality, there is like inter virality and intra virality. Okay. Like within the company and outside the company. So which teams are using us? I can't say, but I can tell you that a lot of San Francisco companies are using us.

And one of the things like I'm really surprised is that one team, I saw one user two weeks ago, I was so happy. And then I came yesterday and I saw 48 of that company. So what I'm trying to say to be frank is that we see more intra virality right now than inter virality. I don't see like video being shared all around Twitter. See what's going on here. Yeah. But I do see, like people share within the company, you need to use it because it's really helpful with productivity and it's something that we will work about the [00:24:00] inter virality.

But to be frank, first I wanna make sure that it's helpful for developers. So I care more about intra virality and that we see working really well, because that means that tool is useful. So I'm telling to my colleague, sharing it on, on Twitter means that I also feel that it will make me cool or make me, and that's something maybe we'll need, still need, like testing.

Swyx: You know, I don't, well, you're working on that. We're gonna announce something like that. Yeah. You are generating these tests, you know, based on what I saw there. You're generating these tests basically based on the name of the functions. And the doc strings, I guess?

Itamar:

So I think like if you obfuscate the entire code, like our accuracy will drop by 50%. So it's right. We're using a lot of hints that you see there. Like for example, the functioning, the dog string, the, the variable names et cetera. It doesn't have to be perfect, but it has a lot of hints.

By the way. In some cases, in the code suggestion, we will actually suggest renaming some of the stuff that will sync, that will help us. Like there's suge renaming suggestion, for example. Usually in this case, instead of calling this variable is client and of course you'll see is “preferred client” because basically it gives a different commission for that.

So we do suggest it because if you accept it, it also means it will be easier for our model or system to keep improving.

Swyx: Is that a different model?

Itamar: Okay. That brings a bit to the topic of models properties. Yeah. I'll share it really quickly because Take us off. Yes. It's relevant. Take us off. Off. Might take us off road.

I think [00:25:30] like different models are better on different properties, for example, how obedient you are to instruction, how good you are to prompt forcing, like to format forcing. I want the results to be in a certain format or how accurate you are or how good you are in understanding code.

There's so many calls happening here to models by the way. I. Just by clicking one, Hey Codium AI. Can you help me with this bank account? We do a dozen of different calls and each feature you click could be like, like with that reflect and fix and then like we choose the, the best one.

I'm not talking about like hundreds of models, but we could, could use different APIs of open AI for example, and, and other models, et cetera. So basically like different models are better on different aspect. Going back to your, what we talked about, all the models will benefit from having those hints in, in the code, that rather in the code itself or documentation, et cetera.

And also in the code analysis, we also consider the code analysis to be the ground truth to some extent. And soon we're also going to allow you to edit it and that will use that as well.

Alessio: Yeah, maybe talk a little bit more about. How do I actually get all these models to work together? I think there's a lot of people that have only been exposed to Copilot so far, which is one use case, just complete what I'm writing. You're doing a lot more things here. A lot of people listening are engineers themselves, some of them build these tools, so they would love to [00:27:00] hear more about how do you orchestrate them, how do you decide which model the what, stuff like that.

Itamar: So I'll start with the end because that is a very deterministic answer, is that we benchmark different models.

Like every time this there a new model in, in town, like recently it's already old news. StarCoder. It's already like, so old news like few days ago.

Swyx: No, no, no. Maybe you want to fill in what it is StarCoder?

Itamar: I think StarCoder is, is a new up and coming model. We immediately test it on different benchmark and see if, if it's better on some properties, et cetera.

We're gonna talk about it like a chain of thoughts in different part in the chain would benefit from different property. If I wanna do code analysis and, and convert it to natural language, maybe one model would be, would be better if I want to output like a result in, in a certain format.

Maybe another model is better in forcing the, a certain format you probably saw on Twitter, et cetera. People talk about it's hard to ask model to output JSON et cetera. So basically we predefine. For different tasks, we, we use different models and I think like this is for individuals, for developers to check, try to sync, like the test that now you are working on, what is most important for you to get, you want the semantic understanding, that's most important? You want the output, like are you asking for a very specific [00:28:30] output?

It's just like a chat or are you asking to give a output of code and have only code, no description. Or if there's a description of the top doc string and not something else. And then we use different models. We are aiming to have our own models in in 2024. Being independent of any other third party, like OpenAI or so, but since our product is very challenging, it has UI/UX challenges, engineering challenge, statical and dynamical analysis, and AI.

As entrepreneur, you need to choose your battles. And we thought that it's better for us to, to focus on everything around the model. And one day when we are like thinking that we have the, the right UX/UI engineering, et cetera, we'll focus on model building. This is also, by the way, what we did in in Alibaba.

Even when I had like half a million dollar a month for trading one foundational model, I would never start this way. You always try like first using the best model you can for your product. Then understanding what's the glass ceiling for that model? Then fine tune a foundation model, reach a higher glass ceiling and then training your own.

That's what we're aiming and that's what I suggest other developers like, don't necessarily take a model and, and say, oh, it's so easy these days to do RLHF, et cetera. Like I see it’s like only $600. Yeah, but what are you trying to optimize for? The properties. Don't try to like certain models first, organize your challenges.

Understand the [00:30:00] properties you're aiming for and start playing with that. And only then go to train your own model.

Alessio: Yeah. And when you say benchmark, you know, we did a one hour long episode, some benchmarks, there's like many of them. Are you building some unique evals to like your own problems? Like how are you doing that? And that's also work for your future model building, obviously, having good benchmarks. Yeah.

Itamar:. Yeah. That's very interesting. So first of all, with all the respect, I think like we're dealing with ML benchmark for hundreds of years now.

I'm, I'm kidding. But like for tens of years, right? Benchmarking statistical creatures is something that, that we're doing for a long time. I think what's new here is the generative part. It's an open challenge to some extent. And therefore, like maybe we need to re rethink some of the way we benchmark.

And one of the notions that I really believe in, I don't have a proof for that, is like create a benchmark in levels. Let's say you create a benchmark from level one to 10, and it's a property based benchmark. Let's say I have a WebGPT ask something from the internet and then it should fetch it for me.

So challenge level one could be, I'm asking it and it brings me something. Level number two could be I'm asking it and it has a certain structure. Let's say for example, I want to test AutoGPT. Okay. And I'm asking it to summarize what's the best cocktail I could have for this season in San Francisco.

So [00:31:30] I would expect, like, for example, for that model to go. This is my I what I think to search the internet and do a certain thing. So level number three could be that I want to check that as part of this request. It uses a certain tools level five, you can add to that. I expect that it'll bring me back something like relevance and level nine it actually prints the cocktail for me I taste it and it's good. So, so I think like how I see it is like we need to have data sets similar to before and make sure that we not fine tuning the model the same way we test it. So we have one challenges that we fine tune over, right? And few challenges that we don't.

And the new concept may is having those level which are property based, which is something that we know from software testing and less for ML. And this is where I think that these two concepts merge.

Swyx: Maybe Codium can do ML testing in the future as well.

Itamar: Yeah, that's a good idea.

Swyx: Okay. I wanted to cover a little bit more about Codium in the present and then we'll go into the slides that you have.

So you have some UI/UX stuff and you've obviously VS Code is the majority market share at this point of IDE, but you also have IntelliJ right?

Itamar: Jet Brains in general.

Swyx: Yeah. Anything that you learned supporting JetBrains stuff? You were very passionate about this one user who left you a negative review.

What is the challenge of that? Like how do you think about the market, you know, maybe you should focus on VS Code since it's so popular?

Itamar: Yeah. [00:33:00] So currently the VS Code extension is leading over JetBrains. And we were for a long time and, and like when I tell you long time, it could be like two or three weeks with version oh 0.5, point x something in, in VS code, although oh 0.4 or so a jet brains, we really saw the difference in, in the how people react.

So we also knew that oh 0.5 is much more meaningful and one of the users left developers left three stars on, on jet brands and I really remember that. Like I, I love that. Like it's what do you want to get at, at, at our stage? What's wrong? Like, yes, you want that indication, you know, the worst thing is getting nothing.

I actually, not sure if it's not better to get even the bad indication, only getting good ones to be re frank like at, at, at least in our stage. So we're, we're 9, 10, 10 months old startup. So I think like generally speaking We find it easier and fun to develop in vs code extension versus JetBrains.

Although JetBrains has like very nice property, when you develop extension for one of the IDEs, it usually works well for all the others, like it's one extension for PyCharm, and et cetera. I think like there's even more flexibility in the VS code. Like for example, this app is, is a React extension as opposed that it's native in the JetBrains one we're using. What I learned is that it's basically is almost like [00:34:30] developing Android and iOS where you wanna have a lot of the best practices where you have one backend and all the software development like best practices with it.

Like, like one backend version V1 supports both under Android and iOS and not different backends because that's crazy. And then you need all the methodology. What, what means that you move from one to 1.1 on the backend? What supports whatnot? If you don't what I'm talking about, if you developed in the past, things like that.

So it's important. And then it's like under Android and iOS and, and you relatively want it to be the same because you don't want one developer in the same team working with Jet Brains and then other VS code and they're like talking, whoa, that's not what I'm seeing. And with code, what are you talking about?

And in the future we're also gonna have like teams offering of collaboration Right now if you close Codium Tab, everything is like lost except of the test code, which you, you can, like if I go back to a test suite and do open as a file, and now you have a test file with everything that you can just save, but all the goodies here it's lost. One day we're gonna have like a platform you can save all that, collaborate with people, have it part of your PR, like have suggested part of your PR. And then you wanna have some alignment. So one of the challenges, like UX/UI, when you think about a feature, it should, some way or another fit for both platforms be because you want, I think by the way, in iOS and Android, Android sometimes you don’t care about parity, but here you're talking about developers that might be on the same [00:36:00] team.

So you do care a lot about that.

Alessio: Obviously this is a completely different way to work for developers. I'm sure this is not everything you wanna build and you have some hint. So maybe take us through what you see the future of software development look like.

Itamar: Well, that's great and also like related to our announcement, what we're working on.

Part of it you already start seeing in my, in my demo before, but now I'll put it into a framework. I'll be clearer. So I think like the software development world in 2025 is gonna look very different from 2020. Very different. By the way. I think 2020 is different from 2000. I liked the web development in 95, so I needed to choose geocities and things like that.

Today's much easier to build a web app and whatever, one of the cloud. So, but I think 2025 is gonna look very different in 2020 for the traditional coding. And that's like a paradigm I don't think will, will change too much in the last few years. And, and I'm gonna go over that when I, when I'm talking about, so j just to focus, I'm gonna show you like how I think the intelligence software development world look like, but I'm gonna put it in the lens of Codium AI.

We are focused on code integrity. We care that with all this advancement of co-generation, et cetera, we wanna make sure that developers can code fast with confidence. That they have confidence on generated code in the AI that they are using that. That's our focus. So I'm gonna put, put that like lens when I'm going to explain.

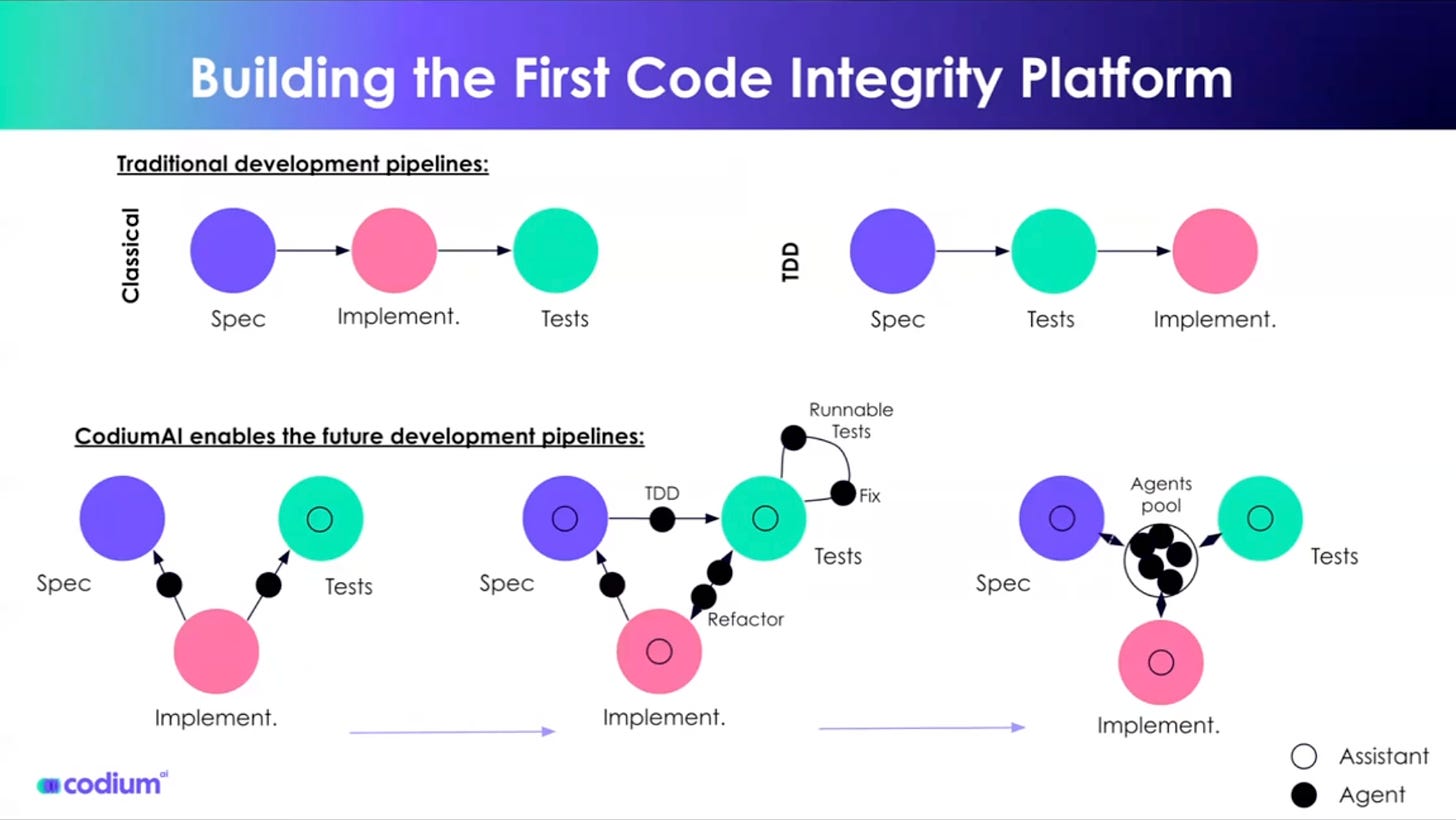

So I think like traditional development. Today works like creating some spec for different companies, [00:37:30] different development teams. Could mean something else, could be something on Figma, something on Google Docs, something on Jira. And then usually you jump directly to code implementation. And then if you have the time or patience, or will, you do some testing.

And I think like some people would say that it's better to do TDD, like not everyone. Some would say like, write spec, write your tests, make sure they're green, that they do not pass. Write your implementation until your test pass. Most people do not practice it. I think for just a few, a few reason, let them mention two.

One, it's tedious and I wanna write my code like before I want my test. And I don't think, and, and the second is, I think like we're missing tools to make it possible. And what we are advocating, what I'm going to explain is actually neither. Okay. It's very, I want to say it's very important. So here's how we think that the future of development pipeline or process is gonna look like.

I'm gonna redo it in steps. So, first thing I think there do I wanna say that they're gonna be coding assistance and coding agents. Assistant is like co-pilot, for example, and agents is something that you give it a goal or a task and actually chains a few tasks together to complete your goal.

Let's have that in mind. So I think like, What's happening right now when you saw our demo is what I presented a few minutes ago, is that you start with an implementation and we create spec for you and test for you. And that was like a agent, like you didn't converse with it, you just [00:39:00] click a button.

And, and we did a, a chain of thought, like to create these, that's why it's it's an agent. And then we gave you an assistant to change tests, like you can converse it with it et cetera. So that's like what I presented today. What we're announcing is about a vision that we called the DRY. Don't repeat yourself. I'm gonna get to that when I'm, when I'm gonna show you the entire vision. But first I wanna show you an intermediate step that what we're going to release. So right now you can write your code. Or part of it, like for example, just a class abstract or so with a coding assistant like copilot and maybe in the future, like a Codium AI coding assistant.

And then you can create a spec I already presented to you. And the next thing is that you going to have like a spec assistant to generate technical spec, helping you fill it quickly focused on that. And this is something that we're working on and, and going to release the first feature very soon as part of announcement.

And it's gonna be very lean. Okay? We're, we're a startup that going bottom up, like lean features going to more and more comprehensive one. And then once you have the spec and implementation, you can either from implementation, have tests, and then you can run the test and fix them like I presented to you.

But you can also from spec create tests, okay? From the spec directly to tests. [00:40:30]

So then now you have a really interesting thing going on here is that you can start from spec, create, test, create code. You can start from test create code. You can start from a limitation. From code, create, spec and test. And actually we think the future is a very flexible one. You don't need to choose what you're practicing traditional TDD or whatever you wanna start with.

If you have already some spec being created together with one time in one sprint, you decided to write a spec because you wanted to align about it with your team, et cetera, and now you can go and create tests and implementation or you wanted to run ahead and write your code. Creating tests and spec that aligns to it will be relatively easy.

So what I'm talking about is extreme DRY concept; DRY is don't repeat yourself. Until today when we talked about DRY is like, don't repeat your code. I claim that there is a big parts of the spec test and implementation that repeat himself, but it's not a complete repetition because if spec was as detailed as the implementation, it's actually the implementation.

But the spec is usually in different language, could be natural language and visual. And what we're aiming for, our vision is enabling the dry concept to the extreme. With all these three: you write your test will help you generate the code and the spec you write your spec will help you doing the test and implementation.

Now the developers is the driver, okay? You'll have a lot [00:42:00] of like, what do you think about this? This is what you meant. Yes, no, you wanna fix the coder test, click yes or no. But you still be the driver. But there's gonna be like extreme automation on the DRY level. So that's what we're announcing, that we're aiming for as our vision and what we're providing these days in our product is the middle, is what, what you see in the middle, which is our code integrity agents working for you right now in your id, but soon also part of your Github actions, et cetera, helping you to align all these three.

Alessio: This is great. How do you reconcile the difference in languages, you know, a lot of times the specs is maybe like a PM or it's like somebody who's more at the product level.

Some of the implementation details is like backend developers for something. Frontend for something. How do you help translate the language between the two? And then I think in the one of the blog posts on your blog, you mentioned that this is also changing maybe how programming language themselves work. How do you see that change in the future? Like, are people gonna start From English, do you see a lot of them start from code and then it figures out the English for them?

Itamar: Yeah. So first of all, I wanna say that although we're working, as we speak on managing we front-end frameworks and languages and usage, we are currently focused on the backend.

So for example, as the spec, we won't let you input Figma, but don't be surprised if in 2024 the input of the spec could be a Figma. Actually, you can see [00:43:30] demos of that on a pencil drawing from OpenAI and when he exposed the GPT-4. So we will have that actually.

I had a blog, but also I related to two different blogs. One, claiming a very knowledgeable and respectful, respectful person that says that English is going to be the new language program language and, and programming is dead. And another very respectful person, I think equally said that English is a horrible programming language.

And actually, I think both of are correct. That's why when I wrote the blog, I, I actually related, and this is what we're saying here. Nothing is really fully redundant, but what's annoying here is that to align these three, you always need to work very hard. And that's where we want AI to help with. And if there is inconsistency will raise a question, what do, which one is true?

And just click yes or no or test or, or, or code that, that what you can see in our product and we'll fix the right one accordingly. So I think like English and, and visual language and code. And the test language, let's call it like, like that for a second. All of them are going to persist. And just at the level of automation aligning all three is what we're aiming for.

Swyx: You told me this before, so I I'm, I'm just actually seeing Alessio’s reaction to it as a first time.

Itamar: Yeah, yeah. Like you're absorbing like, yeah, yeah.

Swyx: No, no. This is, I mean, you know, you can put your VC hat on or like compare, like what, what is the most critical or unsolved question presented by this vision?

Alessio: A lot of these tools, especially we've seen a lot in the past, it's like the dynamic nature of a lot of this, you know?

[00:45:00] Yeah. Sometimes, like, as you mentioned, sometimes people don't have time to write the test. Sometimes people don't have time to write the spec. Yeah. So sometimes you end up with things. Out of sync, you know? Yeah. Or like the implementation is moving much faster than the spec, and you need some of these agents to make the call sometimes to be like, no.

Yeah, okay. The spec needs to change because clearly if you change the code this way, it needs to be like this in the future. I think my main question as a software developer myself, it's what is our role in the future? You know? Like, wow, how much should we intervene, where should we intervene?

I've been coding for like 15 years, but if I've been coding for two years, where should I spend the next year? Yeah. Like focus on being better at understanding product and explain it again. Should I get better at syntax? You know, so that I can write code. Would love have any thoughts.

Itamar: Yeah. You know, there's gonna be a difference between 1, 2, 3 years, three to six, six to 10, and 10 to 20. Let's for a second think about the idea that programming is solved. Then we're talking about a machine that can actually create any piece of code and start creating, like we're talking about singularity, right?

Mm-hmm. If the singularity happens, then we're talking about this new set of problems. Let's put that aside. Like even if it happens in 2041, that's my prediction. I'm not sure like you should aim for thinking what you need to do, like, or not when the singularity happens. So I, [00:46:30] I would aim for mm-hmm.

Like thinking about the future of the next five years or or, so. That's my recommendation because it's so crazy. Anyway. Maybe not the best recommendation. Take that we're for grain of salt. And please consult with a lawyer, at least in the scope of, of the next five years. The idea that the developers is the, the driver.

It actually has like amazing team members. Agents that working for him or her and eventually because he or she's a driver, you need to understand especially what you're trying to achieve, but also being able to review what you get. The better you are in the lower level of programming in five years, it it mean like real, real program language.

Then you'll be able to develop more sophisticated software and you will work in companies that probably pay more for sophisticated software and the more that you're less skilled in, in the actual programming, you actually would be able to be the programmer of the new era, almost a creator. You'll still maybe look on the code levels testing, et cetera, but what's important for you is being able to convert products, requirements, et cetera, to working with tools like Codium AI.

So I think like there will be like degree of diff different type developers now. If you think about it for a second, I think like it's a natural evolution. It's, it's true today as well. Like if you know really good the Linux or assembly, et cetera, you'll probably work like on LLVM Nvidia [00:48:00] whatever, like things like that.

Right. And okay. So I think it'll be like the next, next step. I'm talking about the next five years. Yeah. Yeah. Again, 15 years. I think it's, it's a new episode if you would like to invite me. Yeah. Oh, you'll be, you'll be back. Yeah. It's a new episode about how, how I think the world will look like when you really don't need a developer and we will be there as Cody mi like you can see.

Mm-hmm.

Alessio: Do we wanna dive a little bit into AutoGPT? You mentioned you're part of the community. Yeah.

Swyx: Obviously Try, Catch, Finally, Repeat is also part of the company motto.

Itamar: Yeah. So it actually really. Relates to what we're doing and there's a reason we have like a strong relationship and connection with the AutoGPT community and us being part part of it.

So like you can see, we're talking about agent for a few months now, and we are building like a designated, a specific agent because we're trying to build like a product that works and gets the developer trust to have developer trust us. We're talking about code integrity. We need it to work. Like even if it will not put 100% it's not 100% by the way our product at all that UX/UI should speak the language of, oh, okay, we're not sure here, please take the driving seat.

You want this or that. But we really not need, even if, if we're not close to 100%, we still need to work really well just throwing a number. 90%. And so we're building a like really designated agents like those that from code, create tests.

So it could create tests, run them, fix them. It's a few tests. So we really believe in that we're [00:49:30] building a designated agent while Auto GPT is like a swarm of agents, general agents that were supposedly you can ask, please make me rich or make me rich by increase my net worth.

Now please be so smart and knowledgeable to use a lot of agents and the tools, et cetera, to make it work. So I think like for AutoGPT community was less important to be very accurate at the beginning, rather to show the promise and start building a framework that aims directly to the end game and start improving from there.

While what we are doing is the other way around. We're building an agent that works and build from there towards that. The target of what I explained before. But because of this related connection, although it's from different sides of the, like the philosophy of how you need to build those things, we really love the general idea.

So we caught it really early that with Toran like building it, the, the maker of, of AutoGPT, and immediately I started contributing, guess what, what did I contribute at the beginning tests, right? So I started using Codium AI to build tests for AutoGPT, even, even finding problems this way, et cetera.

So I become like one of the, let's say 10 contributors. And then in the core team of the management, I talk very often with with Toran on, on different aspects. And we are even gonna have a workshop,

Swyx: a very small [00:49:00] meeting

Itamar: work meeting workshop. And we're going to compete together in a, in a hackathons.

And to show that AutoGPT could be useful while, for example, Codium AI is creating the test for it, et cetera. So I'm part of that community, whether is my team are adding tests to it, whether like advising, whether like in in the management team or whether to helping Toran. Really, really on small thing.

He is the amazing leader like visionaire and doing really well.

Alessio: What do you think is the future of open source development? You know, obviously this is like a good example, right? You have code generating the test and in the future code could actually also implement the what the test wanna do. So like, yeah.

How do you see that change? There's obviously not enough open source contributors and yeah, that's one of the, the main issue. Do you think these agents are maybe gonna help us? Nadia Eghbal has this great book called like Working in Public and there's this type of projects called Stadium model, which is, yeah, a lot of people use them and like nobody wants to contribute to them.

I'm curious about, is it gonna be a lot of noise added by a lot of these agents if we let them run on any repo that is open source? Like what are the contributing guidelines for like humans versus agents? I don't have any of the answers, but like some of the questions that I've been thinking about.

Itamar: Okay. So I wanna repeat your question and make sure I understand you, but like, if they're agents, for example, dedicated for improving code, why can't we run them on, mm-hmm.

Run them on like a full repository in, in fixing that? The situation right now is that I don't think that right now Auto GPT would be able to do that for you. Codium AI might but it's not open sourced right now. And and like you can see like in the months or two, you will be able to like running really quickly like development velocity, like our motto is moving fast with confidence by the way.

So we try to like release like every day or so, three times even a day in the backend, et cetera. And we'll develop more feature, enable you, for example, to run an entire re, but, but it's not open source. So about the open source I think like AutoGPT or LangChain, you can't really like ask please improve my repository, make it better.

I don't think it will work right now because because let me like. Softly quote Ilya from Open AI. He said, like right now, let's say that a certain LLM is 95% accurate. Now you're, you're concatenating the results. So the accuracy is one point like it's, it's decaying. And what you need is like more engineering frameworks and work to be done there in order to be able to deal with inaccuracies, et cetera.

And that's what we specialize in Codium, but I wanna say that I'm not saying that Auto GPT won't be able to get there. Like the more tools and that going to be added, the [00:52:30] more prompt engineering that is dedicated for this, this idea will be added by the way, where I'm talking with Toran, that Codium, for example, would be one of the agents for Auto GPT.

Think about it AutoGPT is not, is there for any goal, like increase my net worth, though not focused as us on fixing or improving code. We might be another agent, by the way. We might also be, we're working on it as a plugin for ChatGPT. We're actually almost finished with it. So that's like I think how it's gonna be done.

Again, open opensource, not something we're thinking about. We wanted to be really good before we

Swyx: opensource it. That was all very impressive. Your vision is actually very encouraging as well, and I, I'm very excited to try it out myself. I'm just curious on the Israel side of things, right? Like you, you're visiting San Francisco for a two week trip for this special program you can tell us about. But also I think a lot of American developers have heard that, you know, Israel has a really good tech scene. Mostly it's just security startups. You know, I did some, I was in some special unit in the I D F and like, you know, I come out and like, I'm doing the same thing again, but like, you know, for enterprises but maybe just something like, describe for, for the rest of the world.

It's like, What is the Israeli tech scene like? What is this program that you're on and what should

Itamar: people know? So I think like Israel is the most condensed startup per capita. I think we're number one really? Or, or startup pair square meter. I think, I think we're number one as well because of these properties actually there is a very strong community and like everyone are around, like are [00:57:00] working in a.

An entrepreneur or working in a startup. And when you go to the bar or the coffee, you hear if it's 20, 21, people talking about secondary, if it's 2023 talking about like how amazing Geni is, but everyone are like whatever are around you are like in, in the scene. And, and that's like a lot of networking and data propagation, I think.

Somehow similar here to, to the Bay Area in San Francisco that it helps, right. So I think that's one of our strong points. You mentioned some others. I'm not saying that it doesn't help. Yes. And being in the like idf, the army, that age of 19, you go and start dealing with technology like very advanced one, that, that helps a lot.

And then going back to the community, there's this community like is all over the world. And for example, there is this program called Icon. It's basically Israelis and in the Valley created a program for Israelis from, from Israel to come and it's called Silicon Valley 1 0 1 to learn what's going on here.

Because with all the respect to the tech scene in Israel here, it's the, the real thing, right? So, so it's an non-profit organization by Israelis that moved here, that brings you and, and then brings people from a 16 D or, or Google or Navon or like. Amazing people from unicorns or, or up and coming startup or accelerator, and give you up-to-date talks and, and also connect you to relevant people.

And that's, that's why I'm here in addition to to, you know, to [00:58:30] me and, and participate in this amazing podcast, et cetera.

Swyx: Yeah. Oh, well, I, I think, I think there's a lot of exciting tech talent, you know, in, in Tel Aviv, and I, I'm, I'm glad that your offer is Israeli.

Itamar: I, I think one of thing I wanted to say, like yeah, of course, that because of what, what what we said security is, is a very strong scene, but a actually water purification agriculture attack, there's a awful other things like usually it's come from necessity.

Yeah. Like, we have big part of our company of our state is like a desert. So there's, there's other things like ai by the way is, is, is big also in Israel. Like, for example, I think there's an Israeli competitor to open ai. I'm not saying like it's as big, but it's ai 21, I think out of 10.

Yeah. Out. Oh yeah. 21. Is this really? Yeah. Out of 10 like most, mm-hmm. Profound research labs. Research lab is, for example, I, I love, I love their. Yeah. Yeah.

Swyx: I, I think we should try to talk to one of them. But yeah, when you and I met, we connected a little bit Singapore, you know, I was in the Singapore Army and Israeli army.

We do have a lot of connections between countries and small countries that don't have a lot of natural resources that have to make due in the world by figuring out some other services. I think the Singapore startup scene has not done as well as the Israeli startup scene. So I'm very interested in, in how small, small countries can have a world impact essentially.

Itamar: It's a question we're being asked a lot, like why, for example, let's go to the soft skills. I think like failing is a bad thing. Yeah. Like, okay. Like sometimes like VCs prefer to [01:00:00] put money on a, on an entrepreneur that failed in his first startup and actually succeeded because now that person is knowledgeable, what it mean to be, to fail and very hungry to, to succeed.

So I think like generally, like there's a few reason I think it's hard to put the finger exactly, but we talked about a few things. But one other thing I think like failing is not like, this is my fourth company. I did one as, it wasn't a startup, it was a company as a teenager. And then I had like my first startup, my second company that like, had a amazing run, but then very beautiful collapse.

And then like my third company, my second startup eventually exit successfully to, to Alibaba. So, so like, I think like it's there, there are a lot of trial and error, which is being appreciated, not like suppressed. I guess like that's one of the reason,

Alessio: wanna jump into lightning round?

Swyx: Yes. I think we send you into prep, but there's just three questions now.

We've, we've actually reduced it quite a bit, but you have it,

Alessio: so, and we can read them that you can take time and answer. You don't have to right away. First question, what is a already appin in AI that Utah would take much longer than an s

Itamar: Okay, so I have to, I hope it doesn't sound like arrogant, but I started coding AI BC before chatty.

Mm-hmm. And, and I was like going to like VCs and V P R and D is director, et cetera, and telling them, listen, we're gonna help with code logic testing and we're going to do that interactive conversation way. And they were like, no way. I even had like two saying, I won't let your silly AI get close to my code.[01:01:30]

That was bc ac. It's really different. And so like we kind of saw like it. Like if you played with G P T three, especially three and a half, whatever, like you felt working really well with instruction and conversation. So having said that, I think like still like Open Eye did amazing job, like building the product, like of course building the model, but that's forgiven.

Like they're the leaders, but did an amazing job building the product that's as accessible. And I think that was maybe a bit surprising. Like I think like many tried to do a chatbot or so with these GPTs, but they, since they're. Developing these, these models, they probably felt, and I think that's what happened, that it's not being used correctly.

So I think like the fact that they built actually the product, so well, that was maybe surprising for me. Again, I hope it doesn't sound too arrogant, but I I don't feel like there was a step function here. We might reach your point, but that's like, as we said, a different episode at inflection point and things were gonna be really surprising

Swyx: when the agents take over exploration.

So what do you think is the most interesting unsolved question in, in ai? Like, what would you re, what's an open question that you think, man, somebody should solve that?

Itamar: Okay, so here I am going to go to the Yes obvious answer. That's a AI alignment. Mm-hmm. Like, it's, it's a technical question. It's it's a philosophy question, et cetera.

It's, it's, it's not easy. Like it raises so many question even about ourself [01:03:00] as as human or we, like, I saw one tweet by someone that I'm thinking about like for a few years he wrote are we actually like LLMs, like in essence? So, so I think like we're trying to look into those LLMs for years. Like there, there was, like in 2014 there was already in the C N N, there was a few works.

Trying to visualize what, what are the, the feature detection, the feature, like what are the feature with the hidden layers that you see, like we're trying to work on it for years, lately, like a really long time ago, like five years, days ago or so, like, we saw work by open ai, like trying to turn, look on on different parts of Dell LM and trying to provide a natural language description for them.

So I think like this is very important. Very interesting tech-wise, philosophy wise, et cetera, that that's like, I think need to be explored more. And just one takeaway

Alessio: for all the listeners, like what's one message you want everyone to remember about ai? I, I

Itamar: would say, again, something might be a bit obvious, but I think right now what's happening is that we're actually true to this month's overestimating what gen AI can do overestimating, but we're underestimating what it can do in the future.

Okay. So why am I saying that? Because if you're a builder, I really encourage you, speak less and do more play with it. Try it for specific use cases and see what's easy to do. And then if your purpose is just like incorporating stuff and that's what you wanna do and [01:04:30] then do it, but don't like, tell everyone you're gonna do it before you do it, because you might find that it's actually really hard and there's a lot of problems.

It works amazing. Like it wowed you for two examples, but then for eight other examples that like works crappy data. I want, if you're building, you wanna build a startup. So find that case where you believe that you can think about a solution around LLMs or what it's going to be in in one or two years because you want to, what?

You wanna try to predict that and what's a challenging around it and do that through trying, trying, trying. Like for example, if you're really excited about auto G P T. Try to find five different cases that you, you managed to make it work for. Again, you might find you can't. I'm, I think that it's, it will do a lot and I think it was good that somebody brought these frameworks and now will try to jump, will progress with the levels that I talked about before.

So that, that's my like really like. If you think of idea first, try it. It's like easier than ever. Like there are so many, so many tools to, to try like, and that's one of the things that brought us to coding large language model as is do not work for verifying code logic. But we think there's, we see the path, how to combine with other technical elements and how AI's going to evolve that we can actually bring to fruition this, this idea, this notion of the dry concept that I mentioned.

Well,

Alessio: Edmar, thank you so much for coming on. This was great.

Itamar: Thank you for inviting me. It was a pleasure.[01:06:00]

Share this post