Brex’s AI Hail Mary — With CTO James Reggio

Inside Brex’s $500M+ (ARR) AI-Fueled Comeback

AIE Europe early bird tickets and CFP speaker applications are now open!

In 2024, there was a big question mark around the future of Brex as they cut 20% of staff among stalled growth with the space becoming increasingly competitive. Fast forward to 2025, Brex has accomplished one of the most impressive turnarounds, accelerating sales and passing $500 million in annualized revenue with European expansion in sight, dissipating concerns of a dying business. Among the internal changes that led to this successful turnaround was an aggressive shift towards AI adoption and utilization in every aspect of Brex’s business.

In this episode, we sit down with Brex CTO James Reggio to discuss Brex’s culture and how they facilitated an aggressive shift towards a culture of AI fluency in addition to how Brex modernized their tech stack and apply AI agents to streamline their business.

Culture

Team

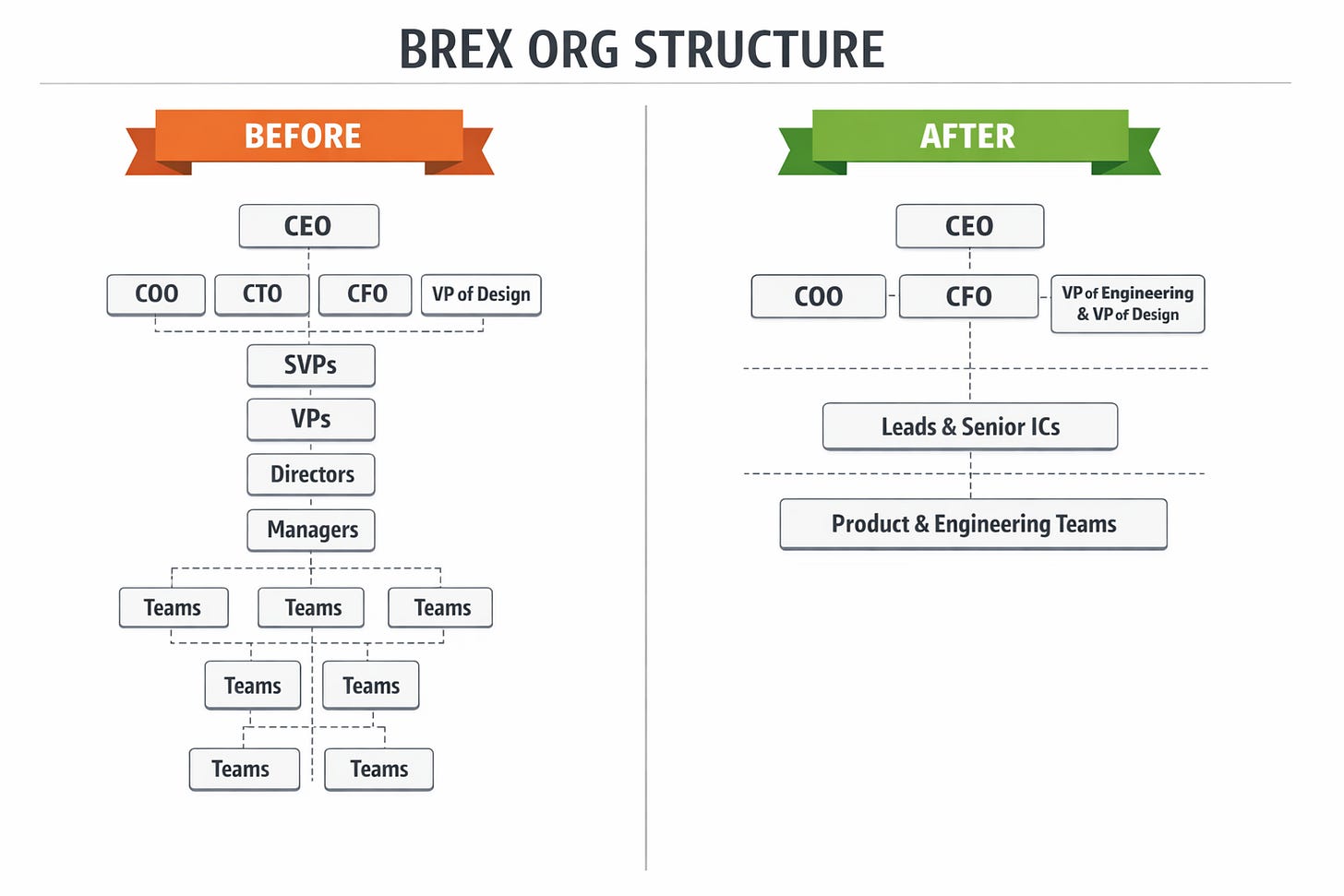

Heading into 2024, Brex showed natural signs of a startup scaling up as they continued increasing headcount and expanded org layers to accommodate. However, Brex realized the rate they were scaling the company didn’t match their speed of execution. Addressing this scary truth head on, Brex laid off 282 people (~20% of the company), flattened their org structure, and reduced layers of management with the goal of having leaders “operate closer to the metal” and be less siloed with only a few important cross-company priorities.

Brex realized that speed and execution shouldn’t come at the cost of increasing headcount. Even as Brex scaled up, it can still operate similar to a startup with high-velocity.

In March 2024, Franceschi described this as Brex 3.0: a new operating model designed to increase intensity and execution quality by making fewer bets, consolidating to one roadmap, and maintaining a release cadence. They doubled down on fostering a high trust and high performance team by promoting from within, bringing employees back in-person to work, and expecting leaders to operate at all levels.

Brex’s team now consists of ~300 engineers and ~350 across EPD (Engineering, Product, & Design). Their team is split across product domains in addition to infrastructure. Their core product domains are the following:

Corporate Card

Corporate Bank Account

Expense Management

Travel

Accounting

In addition to these product teams, Brex has formed smaller specialized teams like their most recent team of ~10 focused on LLMs. This has been one of their key initiatives to keep up with and innovate with AI progress. This team was actually formed by the Brex team asking themselves, “What would a company that was founded today to disrupt Brex look like?” and the answers to this question were used to decide what to work on. Currently the team is still very lean with a mix of talented AI native 20 year olds who grew up with the tech and older more experienced staff engineers who are veterans in the industry and space that Brex operates in. This team operates like a startup working 996 (9AM to 9PM, 6 days a week) and growing the team very slowly like a pre-seed startup (i.e. only hire or add people to the team when absolutely necessary)

AI Adoption

This specialized team isn’t the only one using AI as Brex has engineering wide adoption for AI tools and coding agents. Oddly enough, the top Cursor user is actually an engineering manager. Brex strongly encourages employees to use AI tools and software that will boost their performance.

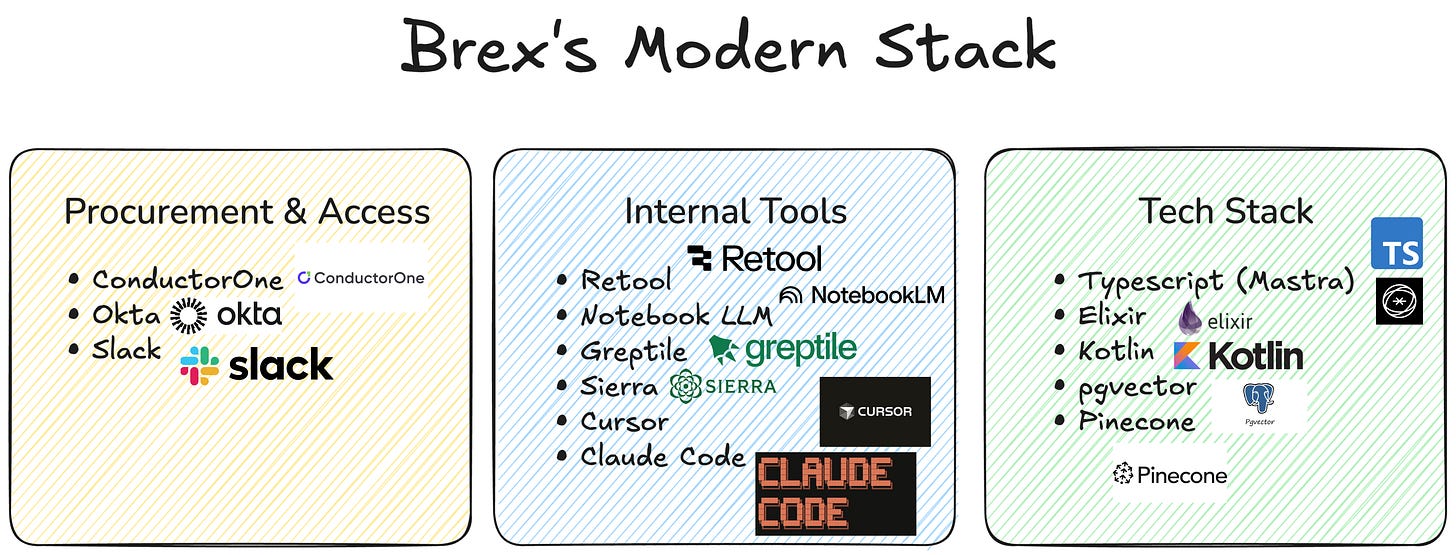

This sentiment is echoed by their bold decision to not pick winners between foundational model providers or agentic coding tools, etc. What Brex does instead is to procure a small number of seats of multiple solutions and give employees the ability to pick what they want to use through ConductorOne. For example, an employee can get a ChatGPT, Claude, and Gemini license to build their own stack or even get credits to try Cursor, Windsurf, or Claude Code.

This push for AI adoption doesn’t stop at engineering. Camilla Matias, Brex’s COO, has pushed aggressively to help every member of the operations organization to start rethinking their role as people who are building prompts & evals to become more AI native.

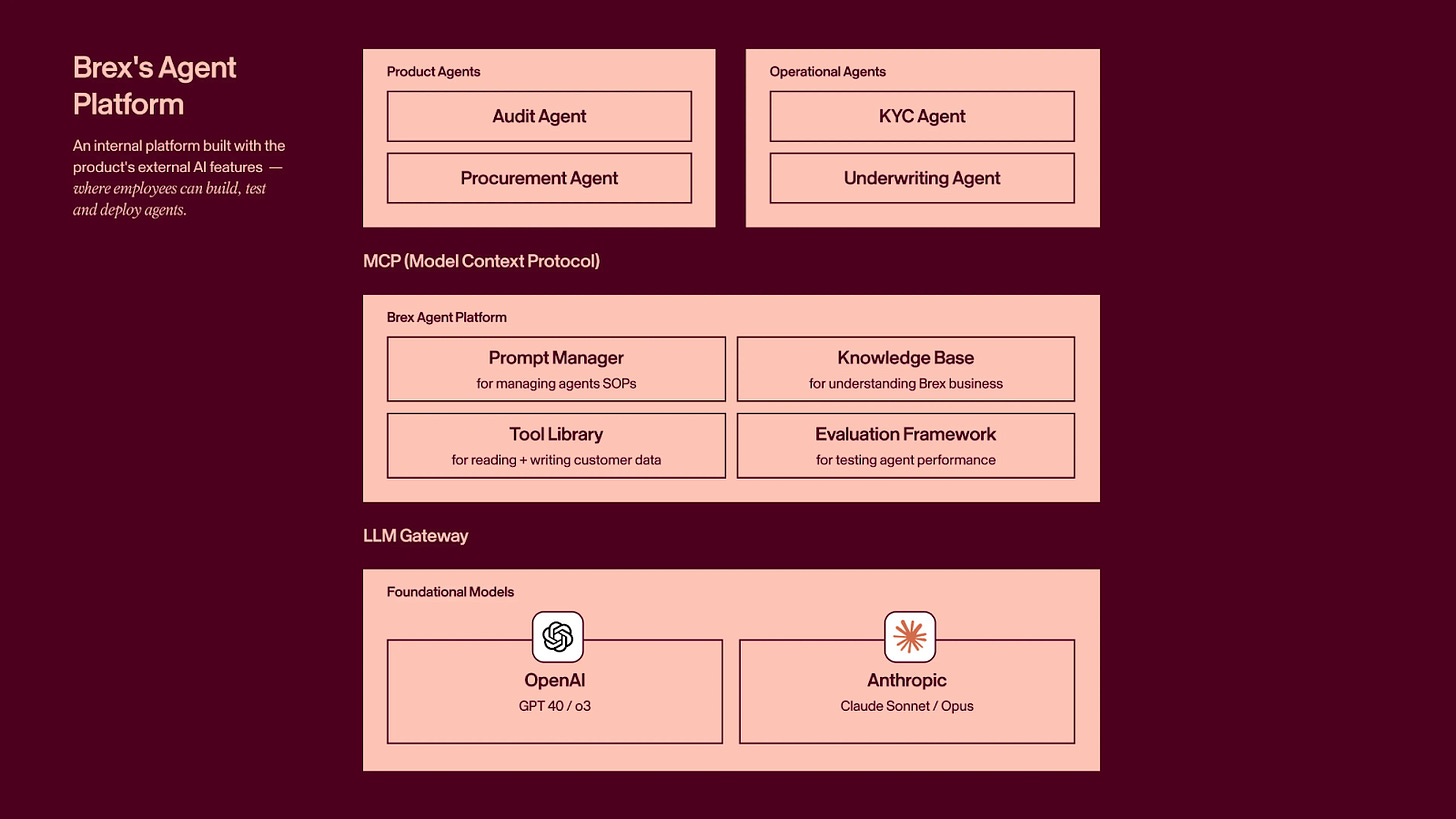

To make it even easier and accessible for non-technical employees, Brex’s Agent Platform is built in Retool which has enabled the operations org to do prompt refinement themselves instead of needing engineers to do it.

While many startups and larger companies discuss how to use AI internally, Brex actually executed on it by making the barrier to entry for any AI tool very low instead of sending a company wide email to “use more AI”.

AI Training & Fluency

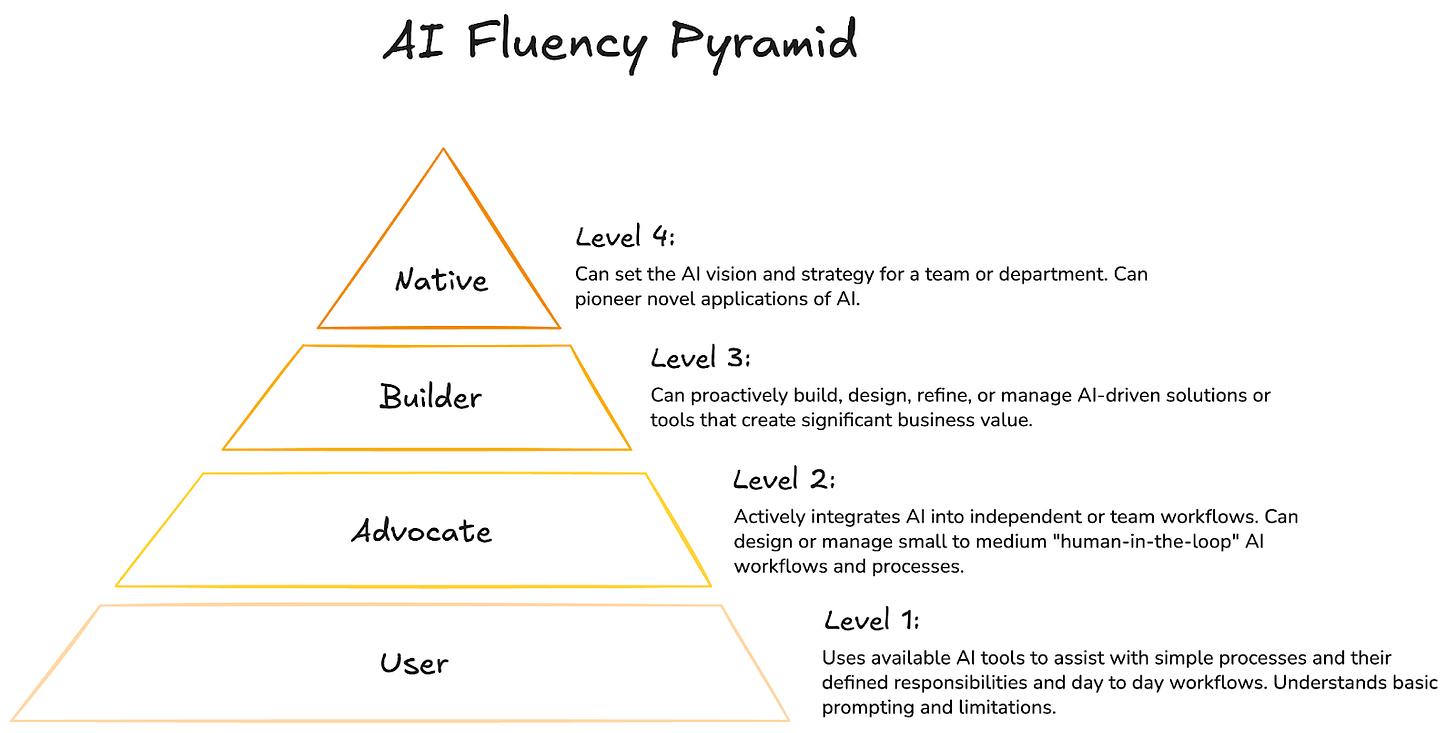

Brex has also implemented a tangible and definable approach for AI adoption with their org-wide AI training program & AI Fluency Levels which are below:

At the end of the AI training program, every employee must categorize themselves into one of the four groups which will then help managers assign them to relevant projects to upskill their AI fluency. These fluency levels are also used in quarterly assessment to gauge their progress. While this approach may sound excessive, it is not enforced as a metric to fire employees but rather as a tangible way to encourage current employees to utilize powerful AI tools available today.

Brex’s success in upskilling their employees’ AI competence comes down to two things they executed very well on: 1. Make it extremely easy for employees to use (i.e. Retool platform) and adopt AI tools (i.e. open access to any tool through ConductorOne) 2. Implementing a forcing function that aligns employee incentives (i.e. promotions, performance reviews, etc.) with AI fluency.

AI Fluency in Hiring

Even the hiring process has been adapted to align with Brex’s culture of being AI fluent. Previously, Brex had a straightforward coding & system design question for potential hires but that has been revamped to a project you work on-site that requires agentic coding or else it’s impossible to finish. Candidates are then evaluated on the candidates’s fluency and competence with AI tools, how the candidate works, whether the candidate understands the AI generated code, etc.

Making Brex’s culture even more clear, when this new AI native interview process was created, every employee in engineering including managers had to go through the interview. It wasn’t to fire or keep score but so that employees would also realize how they could uplevel their skills using AI which eventually pushed their AI fluent culture further.

By keeping a very high bar and holding everyone to the same standard, Brex ensures every employee is treated fairly while not sacrificing on talent density. The standard that new hires are held to are the same ones current employees are held to, establishing a truly meritocratic culture.

“Quitters Welcome” Recruiting Initiative

Many Brex employees have gone off to start enduring companies. Surprisingly, Brex actually encourages and celebrates it which they’ve leaned into more recently with their “Quitters Welcome” recruiting initiative focused on attracting talent that want to quit and start their own company one day. Brex has had success hiring former and future founders and have doubled down since many of their smaller, specialized teams, like the one focused on LLMs, operate like a startup. They hope to be the best “founder school” with the value proposition that future founders can learn the best by solving interesting problems with instant distribution under Brex. For example, you could build financial AI applications that are instantly deployed to thousands of customers from Fortune 100 to startups, expediting the feedback loop and learning from high quality customers.

Modernizing the Stack

Complementing Brex’s aggressive push towards a culture of employees becoming very AI fluent was a culture of trying and adopting the most modern tools and frameworks in the age of AI. Both were critical in Brex’s turnaround and acted like a feedback loop where AI fluency would enable easier adoption for the best modern tools and frameworks which in turn would increase AI fluency.

But this modernization and adaptation didn’t happen all at once. It started in January 2023 when the team created simple internal infrastructure that made it possible to deploy, manage, & evaluate prompts and route to different models. As more models and technologies were launched, Brex kept up with adoption, eventually building out an entire agent platform. This platform is actually powered by the current version of the internal infrastructure built out in 2023.

Agent Platform

Fall of 2025, Brex finally introduced their finance agents that learn, reason, and act on your behalf that is part of their Agent Platform below:

Below are some tangible ways Brex’s Agent Platform have streamlined operations:

Automating pipeline for evaluating customer applications to get them instantly onboarded.

Previously, human intervention was required for underwriting or KYC (Know Your Customer) but now a bunch of research agents can first handle all the research and then go do the work on behalf of humans to instantly onboard qualified customers.

Maintaining thorough, up-to-date, and accurate knowledge about Brex’s business.

Previously a big challenge Brex faced was outdated knowledge being baked into models during pre-training which would lead to very costly hallucinations and incorrect information that would pollute context for agents. As a result, Brex decided to manage this knowledge base so that every detail about the business is up-to-date and correct.

Evaluations

To ensure the quality of their agents and to prevent regressions during future updates, Brex implemented multi-turn evals where an AI agent would basically embody the end user and be given an objective to accomplish. Sometimes they’ll also pre-set an initial preamble to the conversation (i.e. couple turns will be hand written) then begin the eval in order to isolate certain behaviors and make the eval a bit more deterministic.

Here are some modern technologies and tools Brex uses:

Mastra

Surprisingly, Brex’s AI agents are built with Typescript and the Mastra framework, using pgvector & Pinecone as the data stores. Their eventual adoption of Mastra goes back 3 years when Brex built their own internal LLM framework after being dissatisfied with Langchain’s lack of support for key use cases. With the hope to eventually churn off their own framework which needed constant maintenance, Brex was drawn to Mastra because its ergonomics were very similar to the one they built and it also aligned with a key motivating question they asked themselves, “What languages and technology would we use if we started Brex today?”. Brex’s existing backend code is still written in either Elixir or Kotlin, but they are always reevaluating framework and tech choices because the half life of code has declined so much with agentic coding.

Greptile

Another modern tool in Brex’s stack is the AI code review software Greptile. According to James, what is most impressive about Greptile is their signal to noise ratio of comments left on a PR, “I never regret going through all 65 comments [Greptile] leaves on my diffs because it catches so many things.”

Sierra

When deciding whether to build or buy, Brex focuses on whether the solution is only possible with the context they have or can be built by others. When it came to CX (customer experience), Sierra already had alignment with Brex’s primary concern: having a UI/UX (i.e. low-code & more workflow oriented) that makes it extremely easy and accessible for operations and CX strategy teams to administer agents.

Brex’s Job to Be Done

There are 2 demographics that Brex primarily serves:

Finance teams: they interact with agents very specific for their roles

Employees of companies that use Brex: goal is for Brex to disappear for employees (i.e. the only thing they have to do is just use the card)

The way Brex believes this problem is best solved is by giving every employee an AI agent(s) that embodies an executive assistant. In the same way that an EA has all the context and can take relevant and correct actions on your behalf, Brex wants AI agents to do that for finance teams.

Brex also views their AI Strategy through 3 Pillars:

Corporate AI Strategy: How are we going to adopt and buy AI tooling across the business and in every single function to 10x workflows

Operational AI Strategy: How are we going to buy and build solutions that enable Brex to lower their cost of operations as a financial institution (i.e. fraud detection, handle disputes, etc.)

Product AI Strategy: How are we going to introduce new features that enable Brex to be part of the Corporate AI strategy for our customers. We want to be a solution where other companies are saying they adopted Brex for their corporate AI strategy

Initial Faulty Approaches

Brex’s Initial attempt to implement this solution of an EA for every employee was the naive approach of having an agent with a variety of tools, using RAG to fetch the appropriate context.

It soon became apparent that the wide range of Brex’s product lines made it extremely difficult for one agent to perform well when being responsible for everything from expense management to finding travel to answering procurement questions.

Other initial attempts that didn’t work were overloading the agent with tools and context switching (i.e. “this conversation seems more about X topic so lets update the prompt to have the relevant topic in context”)

Without these failed attempts, Brex would not have come to their fleshed out final approach of using subagents. In an era when technology and innovation moves very fast, the optimal path to discover the best approach is actually by trying many hypotheses to learn why an approach doesn’t work.

Final Successful Approach

Brex eventually broke the problem down to using many subagents that sit behind an orchestrator. They built this orchestrator themselves since there are no great solutions on the market right now.

What Brex realized was that there is significant value in multi turn conversations from the orchestrator, assistant, and sub-agent vs. a single tool call. The mental model they use is an agent org chart: sub-agents are specialists and they are conversing with each other so if more context is required, the sub-agents pass it along and then ask the user. (like how a human org chart would work)

Something That Didn’t Work

Among the many investments and experiments Brex has tried, one expensive initiative that didn’t work at all was using RL for credit decisions & underwriting. Initially, Brex thought that it would be the proper approach to building a model that effectively decides like a human underwriter, but after working with outside experts and investing significant time, the end performance was actually inferior to a simple web research agent.

What Brex realized was that in operations, the thing that matters most is being able to granularly break down problems and form SOPs (Standard Operating Procedure) that humans can repeatedly follow. These repeatable processes can then be audited and done in a compliant manner. Since then, Brex has seen great success using simpler approaches with LLMs for many ops use cases but wouldn’t have known its effectiveness without trying alternative approaches.

Links to James & Brex

X: https://x.com/jamesreggio

LinkedIn: https://www.linkedin.com/in/jamesreggio

Company Website: https://www.brex.com

Full Episode on YouTube

Timestamps

00:00:00 Introduction

00:01:24 From Mobile Engineer to CTO: The Founder's Path

00:03:00 Quitters Welcome: Building a Founder-Friendly Culture

00:05:13 The AI Team Structure: 10-Person Startup Within Brex

00:11:55 Building the Brex Agent Platform: Multi-Agent Networks

00:13:45 Tech Stack Decisions: TypeScript, Mastra, and MCP

00:24:32 Operational AI: Automating Underwriting, KYC, and Fraud

00:16:40 The Brex Assistant: Executive Assistant for Every Employee

00:40:26 Evaluation Strategy: From Simple SOPs to Multi-Turn Evals

00:37:11 Agentic Coding Adoption: Cursor, Windsurf, and the Engineering Interview

00:58:51 AI Fluency Levels: From User to Native

01:09:14 The Audit Agent Network: Finance Team Agents in Action

01:03:33 The Future of Engineering Headcount and AI Leverage

Transcript

swyx/Alessio [00:00:05]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, founder of Kernel Labs, and I’m joined by Swyx, editor of Latent Space. Hey, hey, hey, and we’re here with Jason Ruggiosi, too, at Brex. Welcome.

James [00:00:15]: Hey, thank you for having me. Thanks for visiting from up in Seattle, where I’ve been a little bit. It’s cold up there, huh? Yeah, and we have an atmospheric river hitting the city right now, so a lot of blowing. Yeah, well, yeah, we’re getting the full-on winter effect right now. Well, you’re here to talk about the sort of AI transformation within Brex. There’s a lot of interesting tidbits that we’re going to draw from your article, but also your background. You’ve got a wide array of experience from Stripe to Banter to Convoy, and I think also mostly I’m interested in your journey as one of the rare people that have transitioned from like a mobile engineering leader to a CTO, which I think is also a bit more rare. I used to have this comment in the past where there’s a career ceiling. There are people who work on client-only things where usually they don’t hit CTO, whereas they typically promote the back-end people, the back-end clouding for people to CTO. Yeah, you know, it’s something that I hear fairly frequently because there aren’t that many folks with a front-end background to reach this level of leadership. And it’s exciting for me to be able to represent that group. But I’ll say that even though my resume kind of reflects that I’ve been more on the front end of things, it’s probably more my experience as a founder a couple of times over that. Yeah. I think that actually helped me get to this level of my career, working for somebody else, becoming CTO is very much like a leadership and like general business role as much as it is a technical role. And so I think it was more the skills that I built from starting companies and trying to build those up made me a decent fit and enabled me to get the nod from Pedro to take this on as my predecessor left about two years ago. Yeah. One thing I’m curious, you guys’ commentary, this is a little bit broad, unscheduled, but a lot of startups are bragging about how many ex-founders they have.

swyx/Alessio [00:01:58]: And yes, to some extent you want people with the founder mentality and agency, which is what you did to be your employees and to take initiative in the company, but also I wonder if it’s becoming anti-signal sometimes, I don’t know if you’ve thought about this. I think it’s more about the turn for me, especially when people are hiring ex-founders, it’s like, if you’re truly of the founder gene, it’s kind of hard to just stay somewhere as like an IC for too long. And then it’s like, all right, I joined this thing and then in one year, I’m like, okay, I’m going to do this. I’m back to being a founder. I’m curious for you. Yeah. What was your, I’m sure you thought about leaving and like doing another company and stuff.

James [00:02:34]: In fact, that was, that was the alternative. I was considering even at the time that I got the phone call where they made me the offer to become CTO, I was thinking about leaving to go start a company. And, you know, I think what’s interesting about it, we actually launched sort of like a new recruiting and employee value proposition for Brex a couple months ago called Quitter’s Welcome, where we actually intentionally are leaning into this idea that we have a disproportionate number of folks who go on to become founders or like heads of a department when they leave our company and we celebrate that it’s actually something that I’m very proud of and that means that like we, we welcome in people who want to get a different experience. I think that there’s certainly like a lot of founders who don’t make it, don’t scale their own businesses to this, to the scale that we’ve achieved at Brex, so there’s something to be learned when they come in, and then we’re very happy to like support people on their way out. And so I actually really like hiring former founders. The one value proposition I find that’s most relevant, because a lot of the folks we’re hiring as AI engineers are kind of folks that are either like winding down their companies or considering maybe running AI startup. The thing that resonates the most with them is that we oftentimes can give them problems to solve that are interesting, problems that maybe they even want to want to like build their own startup around, but with instant distribution, right? Like that is the allure is it’s like you can come into this business and build like financial AI applications. And instantly have that deployed to roughly 40,000 customers across, you know, the Fortune 100 down to, you know, tens of thousands of startups. So that’s what is, I think, appealing to founders.

swyx/Alessio [00:04:09]: But the challenge then is making sure that we set them up for success in an environment that still feels a little bit like the startup that they might build themselves versus like something that’s too corporate. Yeah, instead of doing your own company and then coming to you and be like, can I integrate into Brex? Yeah, get all the data. Yeah, exactly. How’s the engineering team structure?

James [00:04:28]: Yeah, so we have about 300 people in engineering, like 350 total across EPD. And for the most part, we structure around our product domains. And so this means that Brex is a corporate card. It’s also a corporate bank account, expense management, travel and accounting. And so we actually have sort of full stack product domains that are roughly like 30, 40 people for each of those that have everything from like the low level infrastructure up to the web and mobile experiences. That’s generally the structure of our engineering organization. And then we have naturally an organization that focuses on infrastructure, security, IT. And then there are two additional centers of excellence that we’ve built that violate that org design, where we’ve felt the need to put more focus or operate slightly differently. And AI is one of those areas where we have another team of just roughly about 10 people who are focused primarily on LLM applications. And we wanted to create a bit of a separation there because the way that we were thinking about this, and this is actually something we did this summer, is we paused and asked ourselves on our AI journey towards infusing our product with AI and generating customer value. We asked ourselves, what would a company that was founded today to disrupt Brex look like? And then we tried to... To basically use the answer to that question to form this team internally. So it’s a little bit off to the side. Ideally, everybody kind of comes up to speed and contributes, you know, LLM features, but we have this sort of off on the side right now in a centralized manner.

swyx/Alessio [00:06:05]: What’s the difference in AI adoption for those teams? So like, are the people on the LLM team like much bigger cursor users, clock code users, or like, do you see similar diffusion?

James [00:06:15]: It’s actually fairly uniform across the entire engineering department. And it’s actually kinda funny, like one of our largest cursor users is actually an engineering manager. So like, and I think that this also just like speaks to our core value of operate at all levels where we want all of our EMs and everybody in leadership to still basically do the job that they’re managing, manage the work. So it actually is, I think the journey of getting everybody into using agentic coding was not sort of exclusive to the AI group. Yeah. In fact, I think this podcast was actually a really good justification for us. I actually set up because I called outreach to Pedro because he tweeted this. I assume this is the center of Rex’s. He says, I started a new company inside Brex to build the future of agentic finance. No BS, just building 996 and pushing production grade agents to 30,000 finance teams, now 40,000. And then he actually has like a little job description, which I think is really interesting. I’ll skip that and go straight to Brex Accelerator, grow 5x and cut burn 99% in the past 18 months. I assume that’s a mix of internal AI automation and other stuff. But basically, I wanted to put some headline numbers up front to impress people before we dig into the details. Yeah, absolutely. And you’re correct. That’s the team that we have, this AI team. What was that? Very young team. Yeah, it’s very young. I mean, it’s been really interesting. The composition of the team is very young, AI native, 20-year-olds who basically grew up with the tech, kind of paired off with more like staff level software engineers that have been at Brex for a little while, who can kind of navigate like the existing code bases and like understand, the product and the customer deeply. Like we’ve formed these really a couple of tight, tight knit pods in the AI org where it’s like three people. Generally somebody who has like more of a product, a customer focused background, that like staff engineer who knows where the skeletons are and then like a much younger, like AI native engineer who can just do things with, with agents that like the rest of us dinosaurs maybe don’t, don’t, can’t either dream of or like, or where are, I think, I think part of it is like sometimes the, the too much experience or too much knowledge of how to solve a problem and actually be an impediment to thinking differently about it and thinking about it from like an AI first lens. But yes, we, we’ve been, we’ve been slowly growing that team just in the same way that like a pre-seed startup, you want to be very, very careful about talent density and like very deliberate, like only hire when you absolutely need it. And so, yeah, at this point it’s just about 10 people. And I think it was probably four or five people. Uh, I think everybody was actually in the photo that was attached to that tweet, uh, when Pedro put that out a couple months ago. Yeah, we’ll put it up. Yeah. Yeah. Yeah. It’s a photo at 1.20 AM in a, on a Friday. Yes.

swyx [00:08:53]: Oh yeah. Yeah.

James [00:08:54]: Cause we, we always do, uh, we always do like Friday, Friday demos and, and like, that’s a time for everybody to get like kind of exec review time. And so, uh, Everybody’s in Seattle? Um, those folks were all in Seattle, uh, but they’re actually geo, geographically distributed. Uh, we have a couple of folks here, a couple in Sao Paulo, a couple in Seattle. Hmm.

swyx/Alessio [00:09:11]: How, at Decibel we have this like AI center of excellence, which are basically the people running these teams across companies. Yep. How do you make the other engineers not feel like you’re not special? I think that’s something that I hear a lot. It’s like, Hey, you know, why aren’t these people working on all the cool LM things? And like, I’m stuck working on, you know, the KYC integration with whatever, you know what I mean? It’s like, how do you build that culture?

James [00:09:32]: You know, it’s interesting. I, I thought that that would be more of a problem, but the benefit of having really optimized our engineering culture around business impact actually causes it to cut in the other direction where for folks, some folks don’t want to work on the AI products because it doesn’t have as much clear, direct, like business impact. Right now, it doesn’t, it doesn’t impact revenues directly. And so I, uh, I think folks for the most part, uh, we’ve, we’ve enabled folks who have a strong desire to work on, on, um, AI products to, to join that team. Like somebody, somebody transferred out of our expense management organization to come over there because they’re really passionate about taking like their knowledge of like policy evaluation and bringing it into the, the AI, uh, uh, team. But for the most part, I think everybody understands like how their work, uh, ladders up and maybe there’s some like friendly rivalry because like the folks who. We say we’re kind of card product, they, they drive 60% of our direct revenue. And so they, now they’re pretty happy with that. And, uh, and they don’t feel like they’re being left out. Uh, and I will also say, um, as you probably saw in this, this piece that we, we, uh, put out with, uh, first round, there is a lot of smaller applications of LLMs peppered throughout all of our product and operations teams. It’s just some of the more novel, like agentic layer that sits on top of Brex that has been put together, like in this, in this sort of isolated team. So it’s not like folks aren’t getting to, to build with LLMs or use LLMs on a daily basis. Yeah.

swyx/Alessio [00:10:55]: Maybe you run people through the Brex agent platform. We’ll put the diagram in the video where you had the LLM gateway, you know, like the whole MCP layer, which you said, David, the creator of MCP right before you. So this is very timely. Um, yeah. How did you start building that? What’s the architecture?

James [00:11:09]: Yeah, the architecture, you know, I, I think simple is, uh, is elegant and we we’ve had basically an LLM gateway and, and, uh, a basic hand rolled platform, uh, from the very early days. In fact, right before being tapped to become CTO, I was leading, uh, like a AI, uh, labs team internally, uh, in the wake of like the announcement of chat GPT, you know, everybody saw this through technology and said, Hey, what are we going to do with it? And so one of the first things that we did, um, I think January, 2023, that would have been, uh, was try to put together some internal infrastructure that made it possible for us to deploy, deploy, manage version and eval prompts, uh, and then be able to manage, uh, like data egress and model. Routing and, uh, have some very basic, like observability and cost monitoring, uh, in an LLM gateway. So that’s, that’s infrastructure that we stood up and it still continues to power a lot of those smaller, uh, more, let’s say like precise applications of LLMs. So like for instance, we’ve, uh, we set up a completely automated, uh, pipeline for, um, evaluating, uh, customer applications to get them onboarded instantly to Brex, which is something that used to require, um, human intervention either for underwriting or KYC. But now. We basically have a series of, of agents and, um, and particularly like research agents that will go and do the work that humans would normally do. And so that’s running on top of this, uh, this hand-rolled, uh, framework. And then for the agents on Brex that we announced in our fall release, which is like this agentic layer that we’re building that sort of sits on top of Brex and can embody workflows that a finance team would normally, uh, hire humans for. We’ve actually, uh, started using Mastro for that as like the kind of. Yeah. Primary, primary framework for, for accelerating us. We actually have built everything in TypeScript, um, which is another like technology choice. That’s, uh, that answers the question of like, what will we do if we started Brex today, but isn’t the case for all of our existing backend code, which is either Kotlin or Elixir. And then we have, uh, we have a mix of PG vector pine cone. And, uh, like, I think what we’ve seen is we’re always, we’re always reevaluating the tech and framework choices as we go, uh, because the half-life of code has declined so significantly with agentic. It’s actually quite, uh, easy or awesome for anyone else to, to kind of try on for size, a variety of different pieces of tech, to, to figure out what is going to be most ergonomic for solving the problem. So Matthew Cuellar new choice and interesting one. Yeah. I mean, I think that the main, the main reason that we adopted Mastro is that it provided the ergonomics that we were actually, uh, that the ergonomics of master are quite similar to the internal, um, LLM framework.

James [00:14:15]: Swyx and Alessio So like we did, I’m trying to remember because this is now ancient history. We evaluated a link chain, turned off of it, built our own thing. And then as we were looking, we kind of want to deprecate this internal framework that we built because at the end of the day, it’s not leveraged for us to maintain that. And Master ended up fitting the bill for the feature set that we were looking for. And I think what’s been interesting is about half of the applications that we’re building right now on the agent layer are running on Mastra. And then the other half are actually still running on like yet another internally developed framework, which is a framework that’s focused more on networks of agents. So sort of multi-agent orchestration versus more like strict, like, you know, single turn or like workflows, which are easier to use, like either Landgraf or Mastra. Tell us about your multi-agent framework. I mean, that’s what are the design considerations and why is this the first we’re hearing about it? Yeah, yeah. So it’s funny. A big, big reason why we haven’t written more about this is that it continues to evolve quite a bit. And I feel like we actually had a blog post that we were going to put out in conjunction with the fall release talking about how we built this. And by the time that we finished, you know, the blog post and had all the package ready, it was already like halfway outdated. And so the way that this has started to emerge is this multi-agent network approach to implementation was when we started to implement it, it was like, you know, when we started out, we were trying to scale up our sort of consumer-grade Brex assistant. So if you think about like Brex and our customers, there’s really like two very broad personas that we serve. We serve members of a finance team who are generally like going to be doing it like in roles like accountant or controller or head of T&E. For those folks, they are going to be interacting with agents that are much more specific to their roles. But then the other broad cohort of users we have are like employees of companies. And so we’re going to look at like our client, our staff, right? And then we’re going to look at the the past year, and then we’re going to look at the companies that have deployed Brex. So you know, you go join a new company, that company uses Brex, you get your Brex card. And our goal for employees is for Brex to completely disappear. Like the best UI UX for Brex is just the card, like every single thing that you have to do in the software beyond just swiping the card is like an opportunity for AI to to eliminate some work for you. And They have an EA and she knows enough about me. She has access to my calendar. My email has all the context on when I’m traveling and for what business purposes. And so she’s basically able to do everything that I would be obligated to do in Brex, be it like booking travel or like doing expense documentation. And so what we wanted to do is we wanted to build like that EA connected to the same data sources and see if we couldn’t simulate that behavior so that, you know, you basically your interface to Brex’s SMS and the card. And when we started building that out, you know, the most naive like architecture for that would be to have an agent with a variety of tools and maybe maybe do some some rag to ensure that it has like appropriate context for the conversation. But what we were finding is that the wide range of different product lines that exist on Brex made it difficult for one like agent to perform well, being responsible for everything from like expense management to finding and booking travel to answering. policy and procurement questions. And so that’s when we started breaking down the problem and into into a variety of sub agents that sit behind an orchestrator. And obviously, this is something that can be implemented using LandGraph or Master even has the notion of these as like network switches and data. But what we found is that it was easier for us when it came to being able to build evals for the system. We kind of just hit the eject button and built our own framework, which is one in which we have agents that are able to. Basically, DM with other agents and have multi turn conversations amongst themselves to coordinate to, to complete a task to, or like to complete an objective. And what’s what’s been nice about that is it means that like, you can have your Brex assistant is like one single, one single like point of contact between you as an employee and the Brex product, and then behind your assistant, if the company has like expense management turn on you have that if they have reimbursements as another agent for that if they’re they have travel. They actually also then facilitates like, our conception here is that, you know, it’s like generally like software encapsulation patterns taking like sort of projected into the agent space. It also makes it easier for us to have like the team that owns and understands travel, like be the ones to go and iterate on that without needing to worry about like regressing the total system, or needing like one team to own every single possible active action you could take as an employee. And I’ll say that, like, I’m still of the mindset that somebody who’s like, you know, I’m not willing to do that anymore, they will build a great framework, and we they have ultimately migrate to it, but or might be us that we ultimately open source this right like but um, but for us, like this is, this has worked out quite well. And like, loo of like a couple other approaches that we tried along the way that just didn’t perform well, which is to overload the the agent with a variety of tools or intellectual like context switching where we try to say, Oh, this conversation looks like it’s more about reimbursement. So let’s like update the prompt with more reimbursement context. Like that was, that was another approach that we kind of did that. that didn’t perform as well as actually having a reimbursement agent that it would collaborate with.

swyx/Alessio [00:19:46]: What about MCPs as sub-agents? Oh, yeah. That’s another pattern.

James [00:19:50]: The key thing there is that there’s actually a lot of value in having multi-turn conversations from the orchestrator or the assistant to the sub-agent, whereas a tool call is basically just one RPC. And so oftentimes what will happen is, let’s say the user reaches out to their RECS assistant and says, hey, how much am I allowed to expense per person for dinner tonight? I’m taking my team out. And your assistant’s going to then reach out to the policy agent. Maybe the policy agent needs to know, in order to answer that question, maybe it needs to know whether this was a customer event, a team event, or whether you’re traveling. And so it may actually send... Instead of... It can’t just answer the question, so it’s going to reply back to the assistant and say, hey, I need you to ask this clarifying question. And so then the assistant will return to the user, ask clarifying question, and they’ll basically have this sort of multi-turn conversation across multiple agents versus it just being encapsulated in a single call and response tool call. And so there are still... All the sub-agents have a ton of tools, but I think of the MCP and tool usage as being the interface to all of our... Conventional imperative systems, not the AI space.

swyx/Alessio [00:21:07]: Yeah, that’s the conversation we were having earlier, whether or not it should be an agent-to-agent call as well. Yeah. Or like, yeah, there should be like a chatback.

James [00:21:15]: Exactly, exactly. And that’s the thing. It’s like, okay, and one of the ways that we actually grafted this into Nastro before we built our own framework was to make every sub-agent a tool. And then the input was just natural language. The output was natural language. And if you needed to have multi-turn... You would basically just put the full, like, prior conversation in as you kept calling the sub-agent as a tool. And it’s just like, at that point, you’re like, okay, the ergonomics are kind of... The framework is fighting me on this. It’s actually helpful for us to basically conceive of it as an org chart. And like, it’s the agent org chart with, you know, my EA is DMing other specialists and having brief conversations to support me as their client. Yep. That was a really good deep dive. Thanks for indulging. I feel like you guys are not afraid to make your own tech, which I think is a competitive advantage. I really like that culture. Maybe we should go a bit breadth first as well. Of course. Because I think we also deep dive a little bit too much in one area. There’s, and we’ll put up the chart, but I’m also very interested in like the sort of internal agent stuff, the operational stuff, and just the general platform scope. So please feel free to just like go into your spiel on it. Yeah, of course. So one of the things that I was trying to do at the beginning of the year... As CTO, you know, I think it really fell to me to articulate what our AI strategy was as a business. You know, every board of director was, you know, or every member of our board is like, hey, what’s your AI strategy? And while we were doing a lot of things, we’d literally go, he’s got it. Well, yeah. Yeah, and if I didn’t, I’d be in trouble. I think he also was counting on me given that I was doing the AI organization before CTO to have... That’s true. But a big part of it was like we were doing a lot with LLMs. It was more like these little one-off features and, you know, hey, like maybe mix in some suggestions here or maybe do a little bit of ops automation over here. But it wasn’t easy to kind of create like a verbal framework of all of these investments. And without that framework, then we weren’t able to like set a vision or a roadmap for investments. So what we did at the beginning of the year is we took everything that was going on, as well as all of our ambitions, all of the good ideas, as well as like the problems we were trying to tackle. As a business this year, throw it all on the table and see if there were some ways to cluster it into a framework that made sense to the business, to our board, to ourselves. And we came up with, I think this is not particularly novel, but it’s helped us quite a bit. We have like three pillars to our AI strategy. We have our corporate AI strategy, which is how are we going to adopt and like buy AI tooling across the business and basically every single function to be able to 10x our workflows. And we have our operations. We have our operational AI strategy, which is how are we going to buy and build solutions that enable us to lower our cost of operations as a financial institution, because I think it’s fairly intuitive. Like financial institutions like ours face a lot of regulatory expectations and there’s just like a high ops burden for running our business. And so it’s sort of like a lot of kind of internal use cases, like being able to do like fraud detection, underwriting, KYC, be able to handle dispute automation on card transactions. Those types of operational investments are our ops AI pillar. And then the final pillar is the product AI pillar, which is like, are we going to introduce new features that enable Brex to be a part of the corporate AI pillar of our customers? It’s like we want to build features and be a solution that somebody else is saying to their board, hey, we adopted Brex and this is part of our corporate AI strategy. And so it’s kind of has this nice little feedback loop and we basically within the company split. You know, did a little bit of divide and conquer where folks in IT and on our people team were more or less spending more of the effort driving on corporate AI, really like looking for making the procurement decisions, like creating a culture of experimentation where we spotlight and incentivize people for trying to sort of improve their personal workflows using AI. And then the pieces that I’ve been more involved in have been operational and product. And we were just talking about products here, which is like the agents on Brex and stuff. But I think that the operational AI. Investments have been some of the most sort of immediately impactful to the business because we have hundreds of people who work in our operations organization. And it’s actually something that differentiates us because our CSAT and the quality of our support and service is very, very high, something we’re very proud of. And so trying to figure out how can we automate a significant portion of this and use LLMs in a way that doesn’t degrade the customer experience and then also kind of addresses, like, what is the future of the roles of the people who we already have working full time for us? So this is where Camilla, our COO, who kind of co-wrote the piece with First Round with me, she’s been leaning really aggressively to help every member of the operations organization start rethinking their role as being not people who kind of execute against an SOP, but are people who are going to, like, build prompts, build evals and, like, become more AI native and, like, the way that they’re going to be used in the future. And so a lot of the engineering we’ve done has been to enable folks, say, in fraud and risk to be able to refine prompts and add additional automation to their workflows. Yeah, and it’s the secret fourth pillar, the platform. Yeah, yeah, exactly. That is the thing that ties it all together exactly, is the platform. And I think what’s been really nice is that even though the platform is kind of a loose term because it consists of a wide... variety of technologies, as I said, like, we haven’t been too religious or dogmatic about everybody needing to be on one particular thing, what we’ve seen is that by making a variety of sort of ergonomic options for building with LOMs available, it, like, really has made it easier for us to make a quick leap forward on operational AI. Like, as soon as we put our mind to it, we said, like, look, no, we want to hit 80% automated acceptance rate for all startup and commercial businesses that apply for Brax. Like, we want a decision within 60 seconds. It’s fully touchless, no humans involved. We were able to break that down and then actually build the agents, build the tools on top of that platform really quickly. And a lot of those tools are the same tools that our product AI agents use as well. I was pretty sold on the Conductor. I don’t know if this is under exactly that bucket, the Conductor One provisioning command. I was like, yep, I want that. Yeah, that was actually, I’d love to talk about that. So that’s actually on the corporate side. And I think that this goes back to maybe another intuitive, but I’d say, like... I think that this is a really bold decision that we made, which is that we’re not going to, we’re not going to try to pick winners in the horse race between the foundational model providers or the agentic coding tools or like basically anywhere where there’s, there’s an active horse race. What we do instead of like trying to pick a single solution is we will procure like a small number of seats, like multiple solutions, and then we’ll give employees the ability to pick whatever one they want to use. And so, for instance, like we allow employees to... basically go to, in Slack and use Conductor One to get a ChatGPT, a Cloud or a Gemini license. And basically you can just like build your own stack where you pick your, you pick your like chat provider as a dev. You can pick, you know, between like Cursor, Windsurf, Cloud Code, Credits, like, and you can basically craft your stack to your preference and easily switch between them. And what that does for us too is when we’re going to, like, obviously we have sort of enterprise agreements in place for all of them for the sake of like the, you know, the privacy. we have some non-training guarantees, but it’s fun because when we go to renew these contracts, we can basically resist the need to like do a wall-to-wall deployment. We can say, Hey, look, like usage trends, our employees are voting with their feet. They’re voting with their dollars and maybe Amur tools and is as hot as it was a year ago. Jason Wonguaiohnaipai, Zephyr Access Group CEO & OEM Partner, Swyx does this give you a dashboard of what people are choosing? Yeah, actually, we look at that. We were looking at that as we’re going into budgeting for next year. I would love to see that. What’s, you know, anything that’s like really up, anything that’s really down? It’s fascinating how different the landscape is every three months. And I think one of the interesting challenges we had early on was getting folks to just like try these tools, try to incorporate like agentic coding. You know, like early on, I say like 12 to 18 months ago now, like get folks to just take the time to try a new workflow. And now at this point, I think what we’re seeing is like, even if, you know, a new model hits the same, like when Codex came out and everybody was like, oh, Codex is better at CodeGen, but it’s a little bit slower. I find fewer folks are like kicking the tires on new things because like they’re just so comfortable with the ergonomics of their current work. And I think that’s a big part of the workflow that, you know, some folks are just like, I want to stick with Cloud Code because I know it now. I’ve been working with it for like nine months, so I don’t need to keep switching. I don’t need, I don’t feel the incessant need to keep trying new things because I’ve gotten, I’m an iPhone person and I’m just like going to stay with an iPhone even, you know, even though there’s some really sexy Android hardware out there.

swyx/Alessio [00:30:37]: Do you have one of the big numbers, like 80% of all of our code is written by AI or, but how do you measure it internally?

James [00:30:44]: Yeah, no, not really. We, I mean, what we do is we’ll... We’ll measure like the attributions on the number of commits that have the like co-authored with, and we pull some of those stats, but I don’t index have like, in fact, I don’t index on those at all. I don’t, and honestly, like I, I don’t know how I, in honest, like honestly calculate that number. Yeah, I agree. Yeah, and so, so I, and the thing that, the thing that we’re really just, you know, we’re at the point now with the, like our AI agentic coding journey where now we’re trying to solve the second order of factors. So like a little bit too much slop, maybe a little not enough. Yeah, exactly. Not enough like rigor and code reviews. We’re trying to, the adoption is there. And now we have to figure out like how to mature in our usage of these tools. So that we know quality or like long-term maintainability doesn’t suffer. As well as like maybe one of the other facets of being able to generate a lot more code more quickly is like the, the drift between team members. As far as like the. Understanding of the, the, the code that’s in their services increases is like everybody’s moving faster and more independently. It, that is another sort of risk that we’re starting to see, like, you know, an incident response where folks don’t know, they don’t know a service as well as they, they used to, because it’s changed so much in the past couple months because everybody’s moving more quickly. Yeah. This has been a major topic for me this year on code-based understanding and slop, because obviously it’s so much easier to generate code, but then now we have to review it and to some extent you can’t. really fight AI with more AI. You can’t just be like, oh, just throw, throw an AI reviewer on their AI code and you solved it. Uh, and so, so you do need to just scale human attention. And I, I think that’s something I’ve been pushing a little bit in terms of like, well, you’re, you’re just going to like every engineer is just going to own more code. Yup. Period. And, uh, and be parachuted in and be expected to ramp up and be, be productive and also fix bugs. And if you’re on, you know, pager duty or whatever to, just because I mean, everyone’s going to try to be more efficient. And you’re supposed to see ROI productivity, because if you don’t, then what’s the whole point of this? Exactly. Exactly. And I, and I think it’s funny, you’re going back to the point of, you know, you could, you could add AI on top to solve the problems that the AI introduces and there, but you just keep you, that’s like an endless chain. Uh, and so. Well, I mean the, the, the, the code rabbits of the world, the graphites of the world would say, yes, actually you can. And so that’s the little bit of the tension there. Yeah. You know, I, I, uh, I’ve been thinking a lot about how the craft of Avengers. Engineering is evolving and, and I will say that I feel further away from being able to predict what, what it looks like than I, I did this past summer when I spent a bunch of time. Um, I actually basically went on leave for a month and joined the, um, joined the, the, the team that, uh, the, the AI team that we were building just to go and build alongside them. I felt like it was really important for me to deeply understand, uh, the problems in the tech. Uh, but, and so that was me. I was, I was, you know, writing, pushing code, um, effective. And, uh, and I, I went through so many different moments of realization of like, oh my God, this is going to change everything to, oh my God, this is just amplifying all the good and the bad in the industry, uh, to, oh my God, engineers are not going to have a job anymore to, you know, it’s like, and so I, I don’t have any, like, I felt like I had all the predictions back then. And at this point now, I’m just very interested to watch the, the phenomenon continue to unfold in front of us. And, uh, I will say. I was chatting with a bunch of really. Right. Uh, you know, college juniors and seniors at a dinner we hosted last night. And, um, all these folks are about to enter the industry, basically having kind of come up in the, the era of agentic development and LLMs. And I asked them like, so what is your workflow when you’re like building, uh, like building a project? Uh, how do you, how do you use agents versus like when you decide you’re going to actually just write code by hand? And I was surprised to hear the consensus was that most people there were using agents to collaborate on. Like building a design document and like collaborating on the architecture of the solution that they want to build, and then they’d be asking it to like emit, uh, you know, a doc or an implementation plan, but then they’ll go and write a lot of the code themselves still. Uh, so it’s a little bit more of the, the, uh, the rubber duck co-architect, uh, uh, use case that was most prevalent in that group. And I, I was very surprised by that. I’m impressed. The kids, the kids are all right. Yeah, I know. No, they still want to, they still want to actually write the code themselves. It’s interesting. Yeah. What we hear from like the Gen Zs.

swyx/Alessio [00:35:18]: That open the end, they, they just YOLO everything into code S and yeah, I would say most of the code I generate is like, yeah, but, but it’s been a lot of time on the doc. It’s curious, like when you’re like younger in your career, it’s like, you don’t really have all the mental models of the different patterns to instruct. I feel like there’s like over reliance, especially if you’re doing the design doc, you know, I, I feel like most of the senior engineers will spend more time on that. It’s like even things like, you know, what column should you index, depending on, you know. What queries we usually run on this table and things like that. It’s hard for any AI to know that. Right. You know, and it’s like, I feel like the, the role of like the more senior engineer should actually be more of this. It’s like spending time teaching the AI and then the AI can teach the junior people in a way. Yeah.

James [00:36:03]: Yeah. And it, it, everything, everything looks like mentorship and management at the end of the day. Right. It’s like, you’re breaking down tasks, you’re, uh, you’re supervising work, you’re giving feedback. Like it’s, you know, it’s basically management. Except that there’s. Agents are really bad at memory still, like they basically have zero memory and, and it’s, it’s, it’s the end of 2025. What’s going on? Yeah.

swyx/Alessio [00:36:26]: Yeah. What’s your internal stack for like, uh, uh, preferences. There’s like, kind of like, you know, explicit preference you can use with, uh, you know, agents that MD and all that stuff. Uh, there’s implicit preference with linter rules and things like that in a way where it’s like, it just happens. You don’t have to tell it. How do you structure that? Oh, no. You’re talking about for agentic coding or memory? Yeah. Just like. Platform. Yeah. Yeah. For like the coding specifically, it’s like, and then we can kind of talk about, you know, the whole Brex platform. Yeah.

James [00:36:53]: Just, just nothing, nothing special. Just a lot of, um, like explicit rules. That MD files. Yeah. And then we have, uh, and we, um, in linting, we still have like traditional linters in place for the couple of different language full chains. And then we’re, we’re, we’re big fans of reptile and we use them for basically all of sort of the, um, smarter than linting, uh, like agentic code review. Uh, that’s been the one solution that we’ve aligned around. And that has served us extremely well. Yeah. Can I go to reptile? Yeah, no, we’re, we’re huge fans there. They’ve built something really impressive. And I think the thing that constantly blows my mind about it is, um, the way that they’re able to just have a really impressive signal to, to noise ratio. Like the, the comments that it leaves are very, very high signal. Uh, like never, I never regret going through all like 65 comments that leaves on my, on my diffs because it catches so many things. Yeah.

swyx/Alessio [00:37:46]: I found the code. X review to be really good. I don’t use code expert code generation, but like the review product is like very good for some reason. Um, I used to have, when I was working in rails, there was like this project called danger systems. Oh yeah. It was kind of like a semantic linter. Exactly. I feel like there should be more of that now. It’s kind of like the rules are one thing, a generation, but I want something in my CI that is like enforce these rules and call out where they’re broken. And then I can just copy paste that in an agent.

James [00:38:12]: But yeah, when we, when we started building this, this new agent, um, code base, like, cause. As we were saying, like we were answering the question, what would you do if you built a, you know, a Brex disruptor today? And it’s like, it wouldn’t be to pick Kotlin and Elixir as the backend. And, uh, and so we actually went with the full like TypeScript stack and we, we were building on all like public interfaces and, um, really trying to make sure that this agent layer was, uh, like arm’s length from, from the, the good and the bad of, of the core of our product. And, um, and one thing, I think what we did early on. Yeah. And I don’t actually know if this is true because again, the team keeps sort of iterating, uh, but we were having good, uh, good luck using, um, cloud code, like in a GitHub action to basically go and do, uh, do more of that danger style like code review. So have a, uh, a prompt for it that went through all of the different facets that were more conceptual versus like rigidly enforceable by a linter, uh, and have it leave a big comment at the end with, um, you know, your conformance to the idiomatic coding patterns of the, of the new repo. I want us to spend some time. You said you wanted to devalve on operational agents, uh, customer support, onboarding, KYC, fraud, delinquent account disputes. Uh, this is, I imagine the bulk of it, of, of the work anywhere where there’s a good story about maybe, um, when you started out, it was, it was going to be this way. And then you discovered through building or through customer contacts that it had to go a different direction. And so that difference in beliefs is something that people can learn from. The thing that immediately comes to mind is that we, uh, we believed at the beginning that using RL for credit decisions would actually be a, like, would be the way that we would end up or like credit and underwriting, like how much of a, of a limit should we give to this business, um, that reinforcement learning would be the way that we would go about, um, building a model that effectively would decision in the way that, um, a human underwriter would. Yeah. Yeah. Yeah. Turns out that it was, we made this big investment. We were working with some outside, uh, like the, like a company, uh, that specializes in this and the performance we ended up getting was inferior to just building a, a, like a web research agent. And so, so I think what, what we took away, what, what has been most evident in operational AI is that in operations, you need to be able to break down problems really granularly and be able to form SOPs. That humans can. Root, repeatedly follow and, and thus can be audited, uh, because so much of, uh, the responsibilities and operations is to, uh, is to have auditable, repeatable processes that help to ensure that we’re operating in a compliant manner. yes. Um, and that actually translates just so cleanly to LLMs that we haven’t needed to use too many sophisticated techniques in, in operational AI. Uh, it’s been a, it’s been relatively simple, like a few tool, uh, like agents or maybe even a lot of them. problems can be solved, which is like a single turn chat completion. And so the fact that we didn’t, well, we did one sort of attempt to over-engineer and use more sophisticated techniques. And we discovered that, in fact, the solutions are a bit more plain and less technically sophisticated. The challenge is really articulating and refining prompts to reflect the execution of the SOP and reflect all the sort of institutional knowledge that isn’t written down so that agents can properly replace like the humans or the contractors we would have making these decisions.

swyx/Alessio [00:41:45]: How do you decide what is worth like spending a lot of time building versus what you think some of these models are just, because some of these tasks are so generic, they’re not really about Brex. Yep. Like you can assume the models will be good at it versus some of them are like very specific to you.

James [00:41:58]: We kind of prioritize like the tasks that are most common for the broadest number of customers. And some of them are fairly... Fairly intuitive, like being able to research a customer to look to assess like legitimacy of the business and whether that business would fit our ideal customer profile for onboarding, because there’s certain types of businesses that we either legally cannot serve or we are not comfortable being able to serve. So that’s the type of really kind of basic research and like a relatively straightforward problem that isn’t hyper Brex specific. The things that are a little bit more specific to us or companies in our sector would be preparing documentation for a network card dispute. Like if you go and dispute a transaction on your personal card, you will provide evidence to your card issuer. The card issuer then has to put together like a three or four page word document that goes to the card network and then eventually goes to the acquiring bank. And all of that is like much more specific to our business. It’s a huge operational overhead for us. And that’s something that we we decided to automate later because it’s not as it’s not on the critical path of like serving the vast number of our customers. Like disputes are expensive, but not very common operational process. And so they’re lower on the stack. And I think we’re getting there right now. But this year has basically been us just kind of like looking at every single process, this kind of stack ranking. And I will say, like the thing that got us started down this path was we wanted to be able to do a lot of things that we didn’t want to be able to do in the first place. We wanted to expand our ideal customer profile to support more business, like a wider variety of commercial businesses, which tend to be businesses that aren’t growing as quickly. So they’re not like tech startups, which have a lot of growth, and they’re not usually like they’re not enterprises, which also tend to have a lot of growth. It’s more like a lawyers, a law firm or a dentist office, these types of like solid businesses that we should be able to serve and underwrite. But the cost to to onboard them and the cost to serve, if you have all all the humans in the loop, make them ROI negative. And so that was the first sort of use case of of AI within our ops ops organization that then led to us really understanding we could automate much more than that. Is this Berks going back into SMBs? Ah, that’s a good question. Yeah, yeah. So never, never let let let that die. You know, we think the way we’ve thought about this is we want to always like offer our product to customers where we believe we have a like an offering that is well suited to the needs of those businesses. And I would say that still for very small businesses, our offering isn’t it’s not built for that. It’s built for it’s built for companies that have some degree of scale. Typically have at least sort of. One person, if not a couple of people in their finance team. So we consider these to be more like the the commercial segment. And so it rhymes with with SB. But our approach back then was was a little bit more naive. And I would say we also we were just going for volumes, like a volume game. There are internal controls were not as strong. We didn’t have as much experience like underwriting those businesses. And so it was really ended up being a case study.

James [00:45:46]: Swyx and Alessio

James [00:46:03]: Swyx and Alessio It depends on the scale of yourself as a business when you use these terms.

swyx/Alessio [00:46:28]: And all of these things are built in the Brax agent platform, like all these automations

James [00:46:32]: that people build? Yes, exactly. In fact, most of the operational AI is running on that original platform that we have. One element of it that I didn’t mention is that most of the UI UX for this platform is built in Retool. And so you can basically go into Retool and there’s a prompt manager, a tool manager, an eval manager, and that’s sort of where much of this was built. And the goal with that was, again, to make it more accessible, more ergonomic to get started, but a secondary effect of having a more visual set of tools for this is it’s enabled members of the app’s organization to go and do prompt refinement themselves. So you don’t need engineers to go and refine the prompts or even test new foundational models when they come out. I think that’s another fun thing. When a new model drops, folks will go into the platform and basically run the evals on the new model and kind of see, can we get better performance here? Or does this have different latency or different cost characteristics? Yeah, you want the domain experts or the people directly using the tool, not the engineers who are somewhat removed from the tool. I do want to highlight to listeners that a lot of the Brax agent platform are just things that every company should have. Basically, problem management system, which we talked about where the domain experts are doing it, multi-model testing, evaluation and benchmarking frameworks, API integrations for automated workflows, NCP-based architecture shared with Brax’s external AI products. This one is obviously very Brax-specific. One thing I did want to highlight that I was semi-impressed by, because nobody, people very few rarely talk about this, is knowledge base for understanding Brax’s business. So do you want to expand on that? Yeah, and this is an area where we’ve only scratched the surface. This year, but a big challenge that we face is that the world knowledge or the knowledge that’s built into the model about what GPT-5 thinks Brax does and how it thinks our business operates is actually quite different from what our business offers today or how our product works. And so we’ve had to work on building a corpus of product documentation, process documentation, and curate this set of information. What do you think, Zach, was the most of your experience building this model? We really needed to have a lot of communication to basically ground a variety of our LLM applications, including like that Brax Assistant, which is like the, you know, the assistant that employees will talk to is like we don’t want it to hallucinate features that we don’t have, or like give wrong information there. And similarly, some of the operational agents need to be grounded on what our ICP is, because if you ask, you know, ChatGPT-5 right now, like, what types of businesses does Brax honor? Thank you so much for having me. Thank you so much.

James [00:50:25]: Thank you so much.

James [00:50:52]: Thank you so much.

James [00:51:20]: Thank you so much.

James [00:51:50]: Thank you so much.

James [00:52:21]: Thank you so much.

James [00:52:59]: Thank you so much.

James [00:53:20]: Thank you so much.

swyx/Alessio [00:54:02]: Thank you so much.

James [00:54:20]: Evals that are blocking because they would indicate like a regression, an unacceptable regression. So these tend to be just accuracy related evals. But then there are others that are more about like tone and coherency and these types of things where they’re more subjective. And we were just looking at those over time as a metric. But the team is actually interesting. I think we’re going to get a big update on like how the team is thinking about evals tomorrow and like our Friday, our Friday review. So it’s, this is an area where I’d say the largest challenge, like the largest change we needed to make and how we were executing sort of as like a lab or an incubator back earlier this year to like where we are now, where we’ve, we’ve shipped and like we’re trying to, to increase the rigor has been around like avoiding regressions and having more and more increasingly robust evals.

swyx/Alessio [00:55:14]: Yeah, I’ve worked with a company called Verisai that does user simulation. And I think like that’s what’s been interesting. Some of these things they just don’t expect, like the customer does not expect the model to do, but they want to track the saturation of the model in a way, if that makes sense. And I feel like most companies know what they don’t want to happen, but it’s almost like they don’t, they cannot quite articulate, oh, I want in the future the model to be able to do this. They can do it today, but I’ll keep running this eval.

James [00:55:41]: That’s actually really, really interesting to me. And I, I’m going to take that away and start thinking about this because. There are, there are going to be certain, I mean, we already seen this where, where users will ask the assistant for help with things that we don’t support yet, or we haven’t implemented yet. It’s like, those are opportunities actually for us to build a, like effectively write a test that’s going to be fail, like failing for, for weeks or months and then eventually we’ll go green, but as a way for us to actually kind of show like the progression of sophistication of the assistant. I really, I really liked that as an idea. Yeah. I wonder how you also catch hallucinations and things that it does. That’s usually the, that’s usually the problem is it, you know, it’ll, it’ll, it’ll pretend like it can assist with something and it’ll, uh, like one thing that is really annoying that has been tough to, um, to prevent is that the, the assistant, because it is used to speaking to other agents, um, that can support it in like accomplishing various tasks. If you ask it to, to help with a task that it thinks it probably should have an agent to, uh, uh, to, to work with, it’ll just hallucinate that it, you know, it’s like, oh yes, I’ll, I will like, you know, I’ll reach out to the finance team on your behalf to, uh, to pass this question along, but it’s not doing anything. There’s like no finance team. There’s no way for it to do that. This is something that comes up a lot. It’s like, would you like me to ask the finance team? And there’s no, there’s no actual tool for that. Do you put guardrails for that? Yeah. Yeah. That was, that was something that we had to, uh, Like a regex? Oh, no, we don’t. I think we’ve been able, we’ve been able to just beat that out of its system with a system prompt. But, uh, but the, we don’t have as many guardrails in place right now, just around a couple of like potential, uh, like things that could get us into trouble. Yeah, really extreme ones. Yeah. I just, yeah, it’s surprising when I, I guess two years ago was first kicking around the idea of all these things. I would have said that probably guardrails would be more prevalent, especially in finance use cases, but surprisingly they’re not. Yeah. And that was actually part of what we, that was like a feature, I believe we built in the LLM, LLM gateway early on is like the, the sort of last chance, like, uh, you know, like, uh, um, Like hardguarded Yeah, exactly. Here’s some regexes that will just kill. Yeah, exactly. Or just, you know, in the way that like, if you go away a field on a ChatGPT, you just get like the inline 500 error. It doesn’t even tell you that it can’t tell, but just like craps out. Uh, like we kind of built a couple of those circuit breakers or like the ability to put those circuit breakers in and, and I don’t, I don’t believe that we’re using them for anything. One last thing I want to get your thoughts on was AI fluency levels, which you guys have a framework of user, advocate, builder, native, and everyone goes through it, including you. Including Camilla. And I just think it’s interesting. I think it’s a model that other people are thinking about adopting, but they’re worried about ruling it out that everybody’s going to be bad. And then, and also like, how do you have like this in-house training course that you keep up to date? Yeah. Just tell us more about it. Yeah. So in, in the operations org, uh, they’re actually more ahead of even engineering on this front, as far as like trying to create, um, create like, you know, like, you know, like learning pathways for this. Uh, and I think that part of the reason why they’re ahead of us is that in operations are much more, uh, they have to be elaborate, uh, training at, at scale. Like training is a big, very big part of, um, of how people build the aptitude around their, their job function within ops. Whereas like in EPD, a lot of it is sort of, uh, getting hands on building experience, like going a lot and getting mentored, getting code review. But, uh, it’s been really neat because I think we’ve really like, we created an environment.